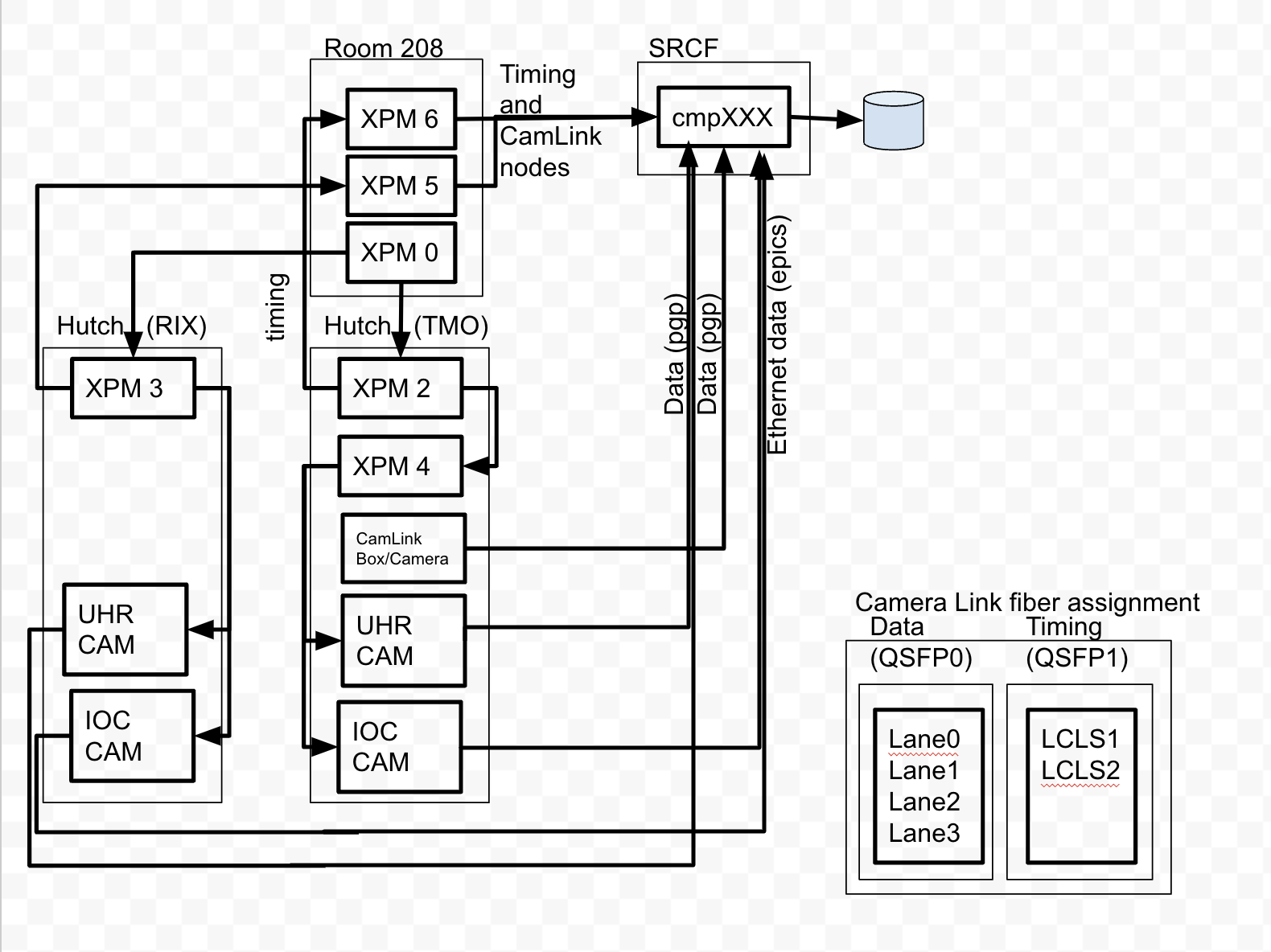

Timing and Data Connection Diagram

Running Multiple DAQ Instances In a Hutch Simultaneously

- Every cnf needs a different platform (for collection code)

- since platform corresponds to the primary readout group, this ensure that the primary readout group is different for different users, but also have to make sure other lower-rate readout groups are not used by different people at the same time

- All cnf files should use the same hutch supervisor xpm (e.g. xpm2 for TMO)

- Timing node can be shared, but each cnf should use a different fiber lane

- Different cnf files should not startup the drp executables that others want to use: can create errors if file descriptors are in-use by another user's executables

- Be careful with configdb setting conflicts: there is a configuration database per hutch/user defined in the cnf file (e.g. tmo/tmoopr) and there is also a prod and a dev configdb.

daqPipes and daqStats

Useful commands from Ric for looking at the history of DAQ Prometheus. Note that daqStats shows raw counts, while daqPipes shows percentages and also highlight (in red) detectors that are backed up (full buffer). daqPipes is more useful for understanding where bottlenecks are.

daqStats -p 0 --inst tmo --start '2020-11-11 13:50:00' daqPipes -p 0 --inst tmo --start '2020-11-11 13:50:00’

If you leave the —start off, then you get the live/current data.

Once either of these apps are running, hitting ‘h’ or '?' will give you help with the allowed keystrokes and what the columns mean.

Commissioning New DRP Nodes

See Riccardo's notes here: https://drive.google.com/file/d/19x52MNf_UyTjzZN1f7NkAQkqHTFE6mZy/view?usp=share_link

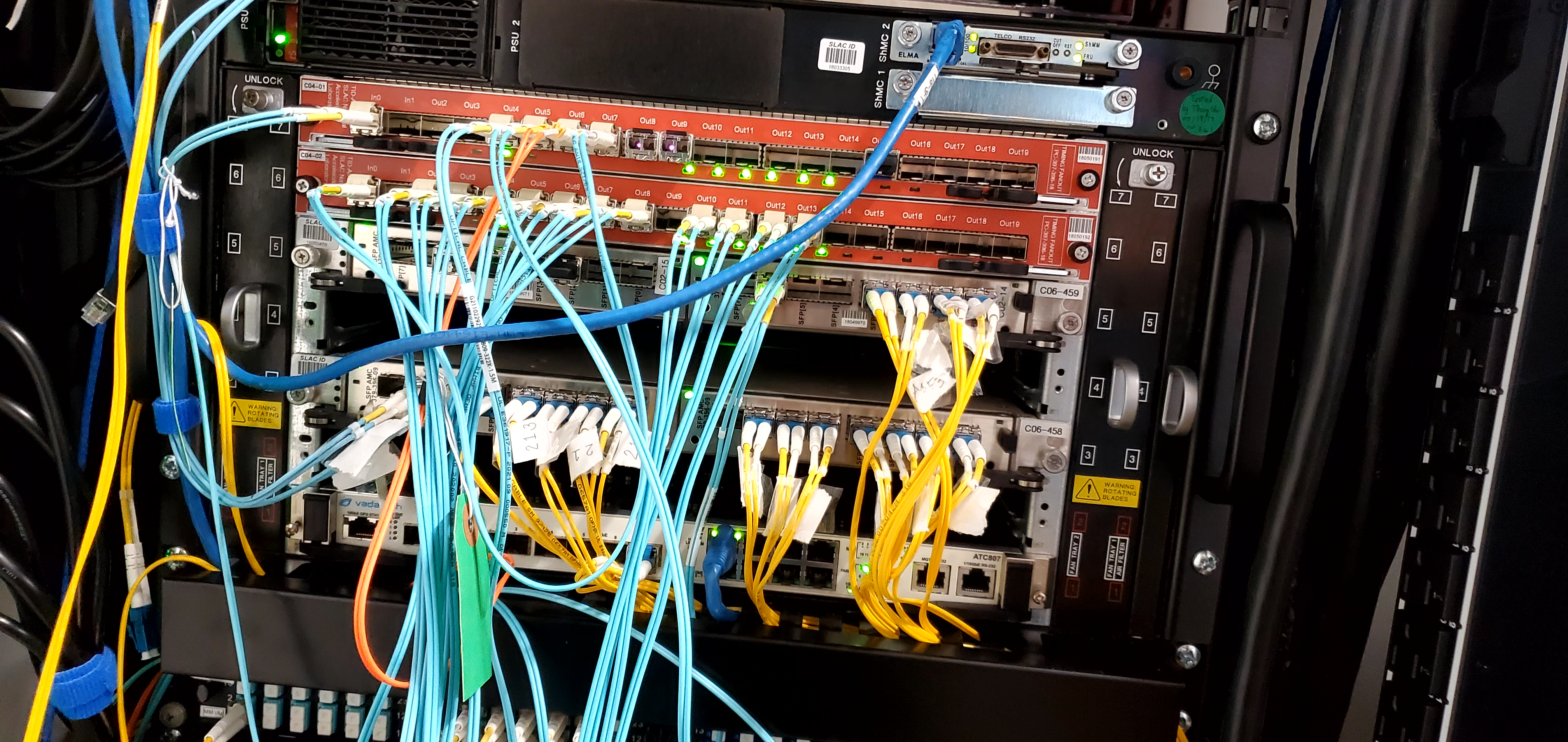

Hutch Patch Panels

TMO: https://drive.google.com/file/d/1hbbaltB7rknWg3xtvNtaaMDYXDoRfjgj/view

Data Flow and Monitoring

A useful diagram of the pieces of a DRP node that should be monitored via prometheus is here: https://docs.google.com/presentation/d/1LvvUsV4A1F-7cao4t6mzr8z2qFFZEIwLKR4pXazU3HE/edit?usp=sharing

TPR

Utilities

From Matt, in https://github.com/slaclab/evr-card-g2.

tprtest -d a -2 -n (for lcls2 timing, device a, ) (see help) tprtest -d a -1 -n (for lcls1 timing) tprtrig -h (generates triggers) tprdump (dumps registers)

Updating Firmware

See: SLAC EVR and TPR

sudo /reg/g/pcds/package/slac_evr_upgrade/UPGRADE.sh /dev/tpra /reg/g/pcds/package/slaclab/evr-card-g2/images/latest-tpr

Generic

- "clearreadout" and "clear"

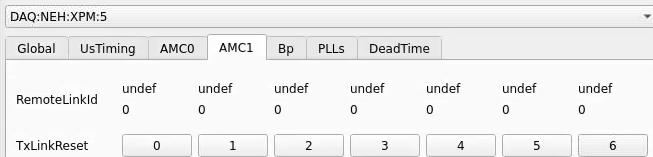

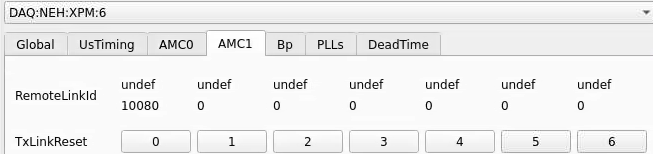

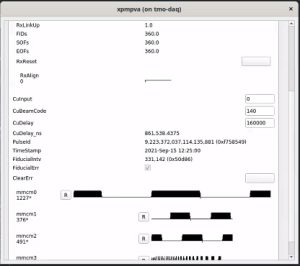

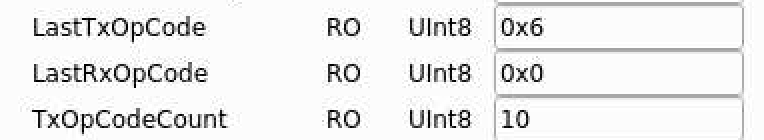

- need to reset the Rx/Tx link (in that order!) for XPM front-panel (note: have learned that RxLink reset can cause link CRC errors (see below) and have to do a TxLink reset to fix. so order is important). The TxLink reset causes the link to retrain using K characters

- look for deadtime

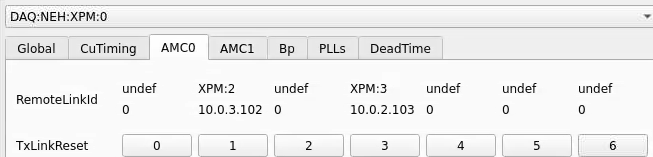

- check that the "partition" window (with the trigger-enable checkbox) is talking to the right XPM: look in the XPM window label, which is something like DAQ:LAB2:XPM:N, where N is the XPM number. A symptom of this number being incorrect is that the L0InpRate/L0AccRate remain at zeros when triggers are enabled. This number is a unique identifier within a hierarchy of XPMs.

- XPM is not configured to forward triggers ("LinkEnable" for that link on the XPM GUI)

- L0Delay set to 99

- DST Select (in PART window) set to "DontCare" (could be Dontcare/Internal)

- check processes in lab3-base.cnf are running

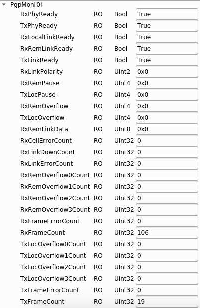

- run psdaq/build/psdaq/pgp/kcu1500/app/kcuStatus and kcuDmaStatus in kcuDmaStatus "blockspause" and "blocksfree" determine whether or not deadtime is set. if blocksfree drops below blockspause then it will assert deadtime. in hsd window "pgp last rx opcode" 0 means no backpressure, 1 means backpressure. Watch for locPause non zero which causes deadtime.

- check for multiple drp executables

- clearReadout broadcasts a message to receiving kcu's telling them to reset timing-header FIFOs.

- if running "drp" executable, check that lane mask is correct

- if events are showing up "sporadically" look for CRC errors from "kcuSim -s -d /dev/datadev_0". We have seen this caused by doing an XPM RxLink reset without a later TxLink reset.

>for the pgp driver this parameter needs to be increased in /etc/sysctl.conf:

[root@drp-neh-cmp005 cpo]# grep vm /etc/sysctl.conf vm.max_map_count=1000000 [root@drp-neh-cmp005 cpo]#

Connect Timeout Issues

On May 20th 2022 we found that the RIX connect timeout was near the edge and it was caused by the PVA "andor vls" detector. Ric wrote about this:

Thanks for looking into this, Chris. The only thing I’ve been able to come up with on a quick look is that the time is maybe going into setting up the memory region for the DRP to share the event with the MEBs. I think this is mostly libfabric manipulating the MMU for this already allocated space (the pebble). Is the event buffer size maybe particularly large compared to the other DRPs? I’m guessing that since it’s an Andor, maybe 8 MB? Not sure what ‘vls’ does to it. If the trigger rate for this DRP isn’t high (10 Hz?), we could maybe speed this step up by lowering the number of DMA buffers so that fewer MMU entries are needed.

DAQ Misconfiguration Issues

Default plan: high-rate detectors get L0Delay 100, low-rate detectors get L0Delay 0. Lower L0Delay numbers increase pressure on buffering which can result in deadtime and dropped Disable-phase2 transitions.

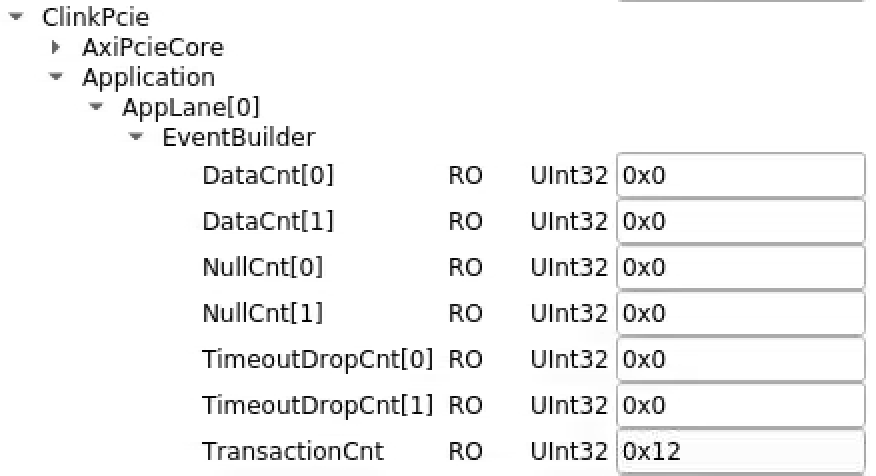

Jumping L1Count

In general, this error message is a sign that the back pressure mechanism is not working correctly and buffers are being overwritten. We have also seen it when the buffer size allocated in tdetsim.service is smaller than the event data coming in the fiber. L0Delay being set too small is another possible cause, but other firmware "high water mark" buffer parameters listed in the next section can be another. See TriggerDelays for a discussion of L0Delay.

If you also see messages like *** corrupt EvtBatcherOutput: 4056 17039628, it may be due to the DMA buffer size being too small for the data coming from the detector. In this case, try increasing the cfgSize value found in the corresponding *.service file. A reduction in the cfgRxCount value may be needed to allow the entire block of DMA buffers to fit in memory.

Buffer Management Registers (a.k.a. "high water mark")

Every detector should have XpmPauseThresh: was put in to protect a buffer of xpm messages. Get's or'd together with data pause threshold, can be difficult to disentangle in general.

A small complication to keep in mind: the tmo hsd’s in xpm4 have an extra trigger delay that means they need a different start_ns. The same delay effect happens for the deadtime signal in the reverse direction. In some ideal world those hsd’s would need a different high-water-mark, or we simplify and set the high-water-mark for all hsd’s to the required xpm4 values.

Two methods to test dead time behavior of a new detector:

- stop/resume a drp process with SIGSTOP/SIGCONT

- put in a sleep in the L1Accept portion of the PGPReader loop (not the batch portion)

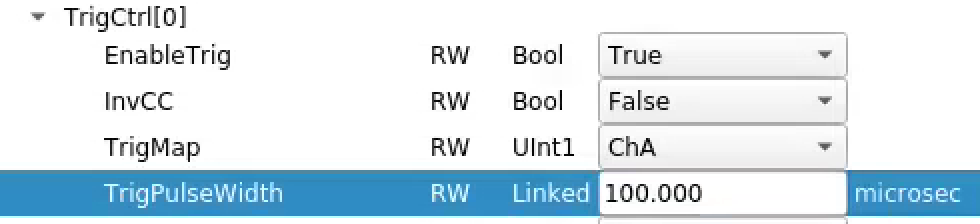

xpm

- L0delay

- the is no corresponding CuDelay for superconducting beam (because Cu system has 1ms "early notice", but superconducting has 100us of "early notice")

timing system

- matt thinks it is "pauseThr" visible in kcuSim (currently set to 10)

camlink gateway

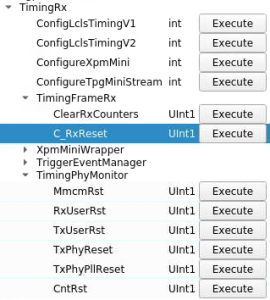

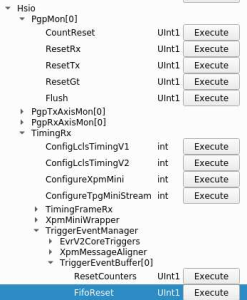

- need to protect timing system buffers in kcu1500 with ClinkPcie->Application→AppLane[n]→XpmPauseThresh

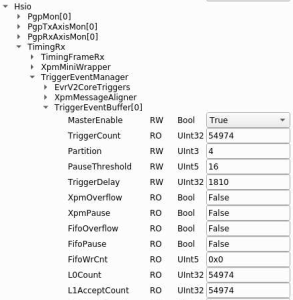

- need to protect data buffers with ClinkPcie->Hsio->TimingRx->TriggerEventManager->TriggerEventBuffer[n]->PauseThreshold

wave8

We think the full signal to the XPM is the logical-OR of the following fifo high-water-mark settings:

- xpm PauseThreshold in the TriggerEventManager (currently 16) needs to be set (think this is the fifo for the xpm messages)

- waveform FifoPauseThreshold (currently 255). seemed to need to lower this to 127?

- For "integrators":

- ProcFifoPauseThrHld

- IntFifoPauseThrHld

hsd

- in hsdpva "full_event" (currently 8) and "full_size" (currently 2048): these are "remaining" buffers, so when either of these ("or") drops below this value then deadtime is asserted. need two parameters because the FEX is variable length.

epix100

- Matt writes: The timing fifo is protected by TriggerEventBuffer[0].PauseThreshold. The data fifo is protected by a watermark of half of the DRAM depth on the KCU card, Matt believes it can be configured but the rogue register is currently unclear.

- Note that XpmOverflow (timing) and FifoOverflow (data) should never happen if the xpm-timing and data buffers are properly protected by dead time. These "latch" and are reset by the ClearReadout transition.

- XpmPause (timing) and FifoPause (data) should become true once the high and false whenever we are below the high-water-mark (i.e. not latched). The logical-or of these two is the dead time signal to the xpm.

epixhr

- to be determined

high rate encoder

- timing buffers protected by the usual TriggerEventBuffer PauseThreshold. For the data buffer Matt says: The high-rate-encoder pauses at 4 event buffers (remaining or possibly 124 remaining). It's not configurable. https://github.com/slaclab/high-rate-encoder-dev/blob/f8da4f5dbda9db166e94447320e86057fab78ec5/firmware/shared/rtl/App.vhd#L219C1-L228C1

Study Of Other Misconfigurations

May 19, 2023: working meeting monarin, melchior, valmar, cpo introduced deliberate misconfigurations in DAQ to understand behavior

gate_ns

- for hsd Matt says that the firmware supports overlapping waveforms, so if gate_ns is larger than the trigger rate it should behave correctly. we tried to make gate_ns large enough to do that by running at 1MHz and setting both raw/fex gate_ns to 3000ns. Both appeared to behave correctly, until we set the fex threshold low.

- for piranha if we made gate_ns larger than trigger rate got deadtime from the correct detector and disable timed out only for that detector. my guess is that we dropped triggers so would be off-by-one, but don't know that for sure

Slow DRP FEX

Introduced sleep(10000) in piranha _event() method. Chaotic deadtime not from the piranha(!) but from epicsarch. daqpipes showed a backup in the pre-fex-worker piranha buffers, but we think the clearest indication of the source of the problem is a low "%BatchesInFlight" to the TEB. This perhaps makes sense, because the TEB is "eager" to receive the batches from the bottleneck detector.

Also see TEB crashes where it is unclear who is the bottleneck.

Bad HSD FEX Params

Did this by setting low thresholds in tmo hsd_3 when there was too much data saw a low "%BatchesInFlight" to the TEB which is perhaps the clearest indication of the problem. Can also get TEB crashes (where it is unclear who is responsible) and for hsd_5 with low threshold we saw DMA buffer-overflow crashes (where it is clear who is responsible).

L0Delay

To be done

Timing System kcu1500 (or "sim")

- Matt's timing system firmware is available in GitHub here: https://github.com/slaclab/l2si-drp/releases/tag/v4.0.2

- When only one datadev device is found in /dev or /proc when two are expected, the BIOS PCIe bifurcation parameter may need to be changed from "auto" or "x16" to "x8x8" for the NUMA node (slot) containing the PCIe bus holding the KCU card

- If the BIOS PCIe bifurcation parameter seems to be missing (was the case for the SRCF DRP machines), the BIOS version may be out of date and need updating. See Updating the BIOS.

- kcuSim -t (resets timing counters)

- In some cases has fixed link-unlocked issues: kcuSim -T (reset timing PLL)

- kcuSim -s (dumps stats)

- kcuSim -c (setup clock synthesizers)

- Watch for these errors: RxDecErrs 0 RxDspErrs 0

- reload the driver with "systemctl restart tdetsim"

- currently driver is in /usr/local/sbin/datadev.ko, should be in /lib/modules/<kernel>/extra/

- reloading the driver does a soft-reset of the KCU (resetting buffer pointers etc.).

- if the soft-reset doesn't work, power-cycle is the hard-reset.

- program with this command: "python software/scripts/updatePcieFpga.py --path ~weaver/mcs/drp --dev /dev/datadev_1" (datadev_1 if we're overwriting a TDET kcu, or could be a datadev_0 if we're overwriting another firmware image)

Using TDet with pgpread

Need to enable DrpTDet detectors with "kcuSim -C 2" or "kcuSim -C 2,0,0" where second argument is sim-length (0 for real data) and third arg is linkmask (can be in hex). first arg is readout group. use readout group >7 to disable, e.g. kcuSim -C 8,0,0xff to disable all

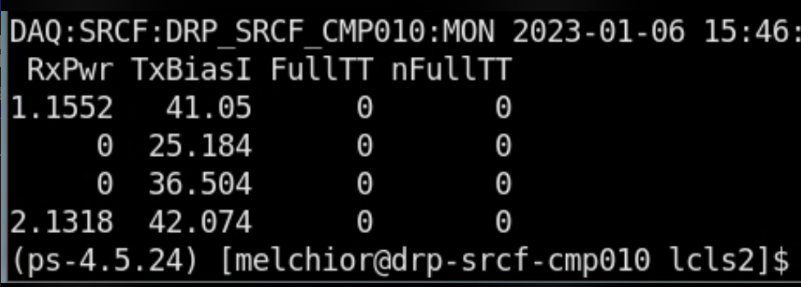

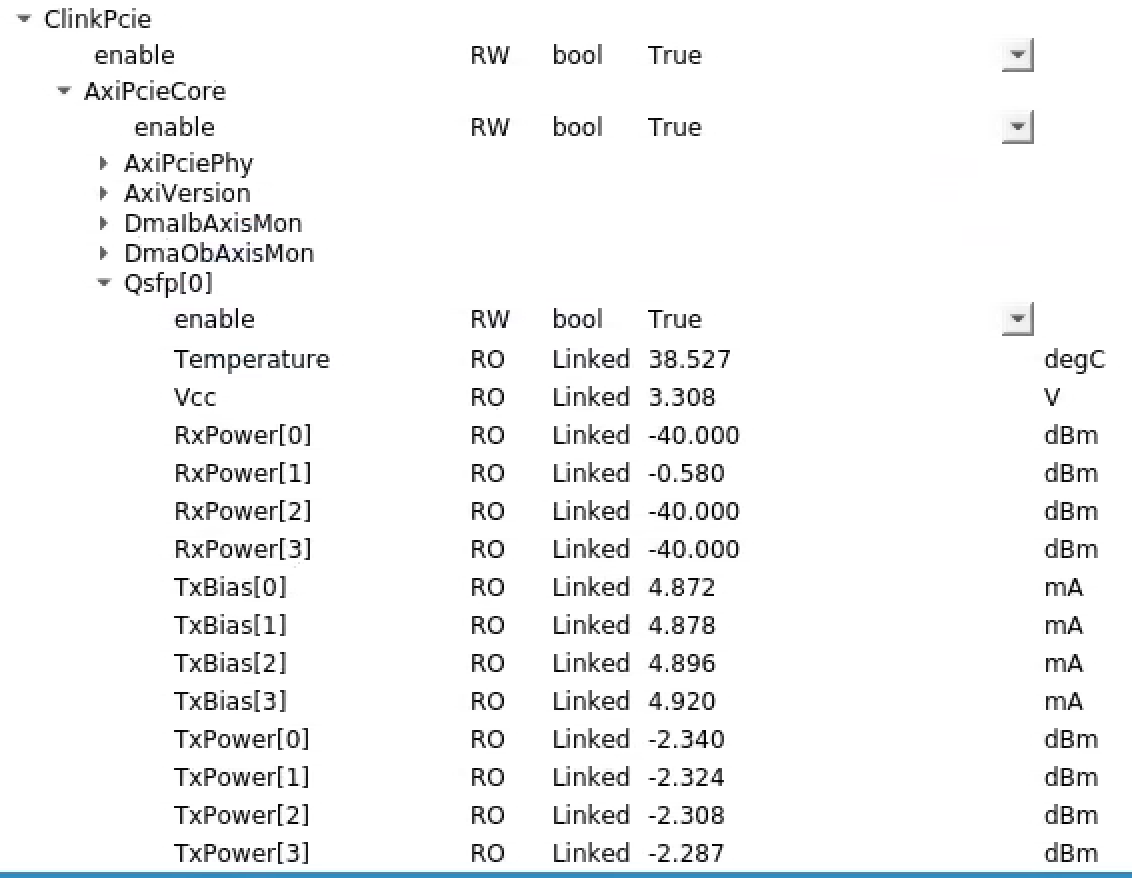

Fiber Optic Powers

To see the optical powers Matt says we should run the software in psdaq/psdaq/pykcu (the second argument is a base name for exported epics vars). Same commands work for hsd kcu1500. NOTE: cpo thinks that we may have to use datadev_1 here. I tried with datadev_0 but received an epics timeout from pvget. NOTE: Fiber power readout only works with QSFP's not the "SFP converter" modules we typically use for tdetsim kcu's.

(ps-4.5.11) claus@drp-srcf-cmp005:srcf$ pykcu -d /dev/datadev_1 -P DAQ:SRCF & Start: Started zmqServer on ports 9103-9105 ClkRates: (0.0, 0.0) RTT: ((0, 0), (0, 0), (0, 0), (0, 0)) 2022-03-16T18:24:31.976 Using dynamically assigned TCP port 56828. Then run pvget to see optical powers: (ps-4.5.11) claus@drp-srcf-cmp005:srcf$ pvget DAQ:SRCF:DRP_SRCF_CMP005:MON DAQ:SRCF:DRP_SRCF_CMP005:MON 2022-03-16 18:24:31.983 RxPwr TxBiasI FullTT nFullTT 1.017 37.312 0 0 0.424 37.376 0 0 0.3964 38.272 0 0 0.3208 36.992 0 0

For a timing system node we would have only expected fiber power on the first, but Riccardo saw power on the first and fourth. Matt writes about this: "I agree with your observation and the conversion formula. In general, I'm finding the RxPwr number on lane 0 is inconsistent or suspicious.". (conversion formula is what we use for xpm: dBm=10*log(10)(val/1mW))

this is the correct firmware to program into the kcu:

drp-neh-cmp001:~$ ls -rtl ~weaver/mcs/*TDet*2020* -rw-r--r-- 1 weaver ec 31345008 Jun 12 2020 /cds/home/w/weaver/mcs/DrpTDet-20200612_primary.mcs -rw-r--r-- 1 weaver ec 31345008 Jun 12 2020 /cds/home/w/weaver/mcs/DrpTDet-20200612_secondary.mcs drp-neh-cmp001:~$

Rogue

Running a register-reading devGui at the same time the daq is running. You may need to click "read all" to update the register values, and also update the port number with what your instance of the rogue software in the daq prints out:

python -m pyrogue gui --server='localhost:9103'

A useful introduction to rogue: https://docs.google.com/presentation/d/1dyNqnSorvWl0j6kYO3NItaAnzBfNsMmWjGOJtaxrFaI/edit#slide=id.g172bb84825_0_48

KCU Firmware

NOTE: Some KCU firmware (notably the hsd image named DrpPgpIlv) is reprogrammed over /dev/datadev_1. If an attempt to update the firmware fails with updatePcieFpga.py throwing 'ValueError: Invalid promType', try again with '--dev /dev/datadev_1' added to the arguments. Also, some images don't automatically detect the prom type. A general KCU firmware reprogramming command would be:

python software/scripts/updatePcieFpga.py --path ~weaver/mcs --dev /dev/datadev_1

As of Jan. 20, 2021, Matt has production DrpTDet and DrpPgpIlv firmware here:

- https://github.com/slaclab/l2si-drp

- ~weaver/mcs/DrpTDet-20200612*.mcs (tdetsim)

- /afs/slac/u/ec/weaver/projects/cameralink-gateway/firmware/targets/ClinkKcu1500Pgp2b/images/ClinkKcu1500Pgp2b-0x04090000-20201125220148-weaver-dirty*.mcs (timetool opal, but compatible with normal opal ... he will merge into Larry's repo).

Riccardo's notes:

one can find the KCU firmware in https://github.com/slaclab/pgp-pcie-apps/releases

in this case provide with --path the folder where the firmware is stored

in case of promType error one can typically fix it by switching to the other datadev_N interface, otherwise can add "--type SPIx8"

Whenever updating the firmware via JTAG, one should follow the same instructions as per this link

High Speed Digitizer (HSD) Firmware Programming

Programming HSD Firmware in LCLS Hutches

and use: MT25QU512 x2_x4_x8

datadev Driver

- user "systemctl list-unit-files" to see if tdetsim.service or kcu.service is enabled

- associated .service files are in /usr/lib/systemd/system/

- to see if events are flowing from the hardware to the software: "cat /proc/datadev_0" and watch "Tot Buffer Use" counter under "Read Buffers"

- if you see the error "rogue.GeneralError: AxiStreamDma::AxiStreamDma: General Error: Failed to map dma buffers. Increase vm map limit: sysctl -w vm.max_map_count=262144". This can be caused by that parameter being too low, but it can also be caused by loading the driver with CfgMode=1 in tdetsim.service (we use CfgMode=2). This CfgMode parameter has to do with the way memory gets cached.

- make sure the tdetsim.service (or kcu.service) is the same as another working node

- make sure that the appropriate service is enabled (see first point)

- check that the driver in /usr/local/sbin/datadev.ko is the same as a working node

- check that /etc/sysctl.conf has the correct value of vm.max_map_count (NOTE: after changing sysctl.conf parameter they need to be reloaded with "sudo sysctl -p". Values can be checked with, e.g., sysctl vm.max_map_count)

- It seems that vm.max_map_count must be at least 4K (the page size = the MMU granularity, perhaps?) larger than the datadev service's cfgRxCount value, and in some cases much larger (doubled or more) than the cfgRxCount value if Rogue is also used in the executeable

- we have also seen this error when the datadev.ko buffer sizes and counts were configured incorrectly in tdetsim.service.

- have also seen this error running opal updateFebFpga.py because it loops over 4 lanes which increases the usage of vm.max_map_count by 4. devGui and DAQ worked fine because they only used one lane. worked around it by hacking the number of lanes in the script to 1. Ryan Herbst is thinking about a better fix.

- if you see the error "Failed to map dma buffers: Invalid argument"

- we think we see this if the buffer sizes in kcu.service and tdetsim.service are not multiples of 4kB (a page-size, perhaps?).

- For high rate running, many DMA buffers (cfgRxCount parameter) are needed to avoid deadtime. The number of DMA buffers is also used to size the DRPs' pebble, so slow but large DRPs like PvaDetector and EpicsArch need a comparatively small cfgRxCount value. Neglecting to take this into account can result in attempting to allocate more memory than the machine has, resulting in the DRP throwing std::bad_alloc.

- The number of buffers returned by dmaMapDma() is the sum of the cfgTxCount and the cfgRxCount values. The DRP code rounds this up to the next power of 2 (if it's not already a power of 2) and uses the result to allocate the number of pebble buffers. To avoid wasting up to half the allocation, set the sum of cfgTxCount and cfgRxCount to a power of 2.

- DMA buffers can be small (cfgSize parameter) for most (all?) tdetsim applications. 16 KB is usually sufficient.

An (updated) example kcu.service for HSDs:

drp-srcf-cmp005:~$ cat /lib/systemd/system/kcu.service [Unit] Description=KCU1500 Device Manager Requires=multi-user.target After=multi-user.target [Service] Type=forking ExecStartPre=-/sbin/rmmod datadev.ko ExecStart=/sbin/insmod /usr/local/sbin/datadev.ko cfgTxCount=4 cfgRxCount=1048572 cfgSize=8192 cfgMode=0x2 ExecStartPost=/usr/local/sbin/kcuStatus -I -d /dev/datadev_1 # To do: The irqbalance service will defeat the following unless it is disabled or the IRQs are banned in /etc/sysconfig/irqbalance ExecStartPost=-/usr/bin/sh -c "/usr/bin/echo 4 > /proc/irq/`grep datadev_0 /proc/interrupts | /usr/bin/cut -d : -f 1 | /usr/bin/tr -cd [:digit:]`/smp_affinity_list" ExecStartPost=-/usr/bin/sh -c "/usr/bin/echo 5 > /proc/irq/`grep datadev_1 /proc/interrupts | /usr/bin/cut -d : -f 1 | /usr/bin/tr -cd [:digit:]`/smp_affinity_list" KillMode=none IgnoreSIGPIPE=no StandardOutput=syslog StandardError=inherit [Install] WantedBy=multi-user.target

Since 6/8/22, the DAQ code was updated to eliminate a separate buffer allocation mechanism (including the associated wait condition when the pool is empty) for the small input/result data buffers for/from the TEB. These buffers are now allocated using the same buffer index with which the pebble is allocated. Since this index is now shared with the TEB, this change has put constraints on its range (set by the cfgRxCount parameter in the tdetsim/kcu service file) across DRPs: a DRP in the common readout group must have the largest range and DRPs in subsidiary/slower readout groups must have smaller or the same range.

Roughly, if a DRP chain is stalled for some reason, the DMA buffers will be consummed at the trigger rate. So in the above example, the HSDs will start back pressuring into firmware after roughly 1 second given a trigger rate of 1 MHz. For a given trigger rate, there is no clear benefit to having one DRP have more or less DMA buffers than another. The first one to run out of buffers will cause backpressure and ultimately inhibit triggers, leaving additional buffers on other DRPs inaccessible. Thus, I suggest making the number of DMA buffers (cfgRxCount + cfgTxCount) the same for each DRP in a given readout group and to roughly keep the cfgRxCounts in the same ratio as the trigger rates of the groups (while still following the 2**N - cfgTxCount rule of above).

taskset

Ric does "taskset -c 4-63" for daq executables to avoid the cores where weka processes are running

Interrupt Coalescing

We think this can help with errors like:

May 25 07:23:59 XXXXXXX kernel: [13445315.881356] BUG: soft lockup - CPU#16 stuck for 23s! [yyyyyyy:81602]

From Matt:

The datadev driver parameter for interrupt coalescing is "cfgIrqHold". It is the number of 200 MHz clocks that an interrupt may be delayed to allow others to be serviced together. You can see its current setting with cat /proc/datadev_0. It looks like the current setting limits interrupts (per pcie device) to ~20 kHz.

[weaver@drp-srcf-cmp005 ~]$ cat /proc/datadev_0

-------------- Axi Version ----------------

Firmware Version : 0x6

ScratchPad : 0x0

Up Time Count : 71501

Device ID : 0x1

Git Hash : 0000000000000000000000000000000000000000

DNA Value : 0x00000000000000000000000000000000

Build String : DrpPgpIlv: Vivado v2019.1, rdsrv302 (x86_64), Built Sun 14 Jun 2020 05:50:49 PM PDT by weaver

-------------- General HW -----------------

Int Req Count : 0

Hw Dma Wr Index : 3634

Sw Dma Wr Index : 3634

Hw Dma Rd Index : 0

Sw Dma Rd Index : 0

Missed Wr Requests : 3530076280

Missed IRQ Count : 5846742

Continue Count : 0

Address Count : 4096

Hw Write Buff Count : 4095

Hw Read Buff Count : 0

Cache Config : 0x0

Desc 128 En : 1

Enable Ver : 0x2010101

Driver Load Count : 1

IRQ Hold : 10000

It appears that IRQ Hold / cfgIrqHold is a 16 bit value.

dmesg gives the IRQ number being used for a datadev device:

[Thu Feb 10 17:35:36 2022] datadev 0000:41:00.0: Init: IRQ 369

dstat can show the IRQ rate (interrupts/sec), here for an idle system with 2 datadev devices:

claus@drp-srcf-cmp005:~$ dstat -tiy -I 369,370 10----system---- -interrupts ---system-- time | 369 370 | int csw10-02 13:23:45| 101 101 |3699 598510-02 13:23:55| 100 100 |3807 6088

With IRQ Hold / cfgIrqHold set to 10000, the IRQ rate is seen to be around 22.4 KHz at a trigger rate of 71 KHz.

Note that the datadev driver can handle multiple buffers (O(1000)) per interrupt, so the IRQ rate tends to fall well below the rate set by the IRQ HOLD / cfgIrqHold value at high trigger rates. At 1 MHz trigger rate, the IRQ rate is a few hundred Hz.

Setting interrupt affinity to avoid 'BUG: Soft lockup' notifications

If there is significant deadtime coming from a node hosting multiple DRPs at high trigger rate and kernel:NMI watchdog: BUG: soft lockup - CPU#0 stuck for 23s! [swapper/0:0] messages appear in dmesg, etc., it may be that the interrupts are being handled for both datadev devices by one core, usually CPU0. To avoid this, the interrupt handling can be moved to two different cores, e.g., CPU4 and 5 (to avoid the Weka cores in SRCF).

This can be done automatically in the tdetsim/kcu.service files with the lines:

ExecStartPost=-/usr/bin/sh -c "/usr/bin/echo 4 > /proc/irq/`grep datadev_0 /proc/interrupts | /usr/bin/cut -d : -f 1 | /usr/bin/tr -cd [:digit:]`/smp_affinity_list" ExecStartPost=-/usr/bin/sh -c "/usr/bin/echo 5 > /proc/irq/`grep datadev_1 /proc/interrupts | /usr/bin/cut -d : -f 1 | /usr/bin/tr -cd [:digit:]`/smp_affinity_list"

The irqbalance service will override the above unless the service is disabled or it is told to avoid the datadev devices' IRQs. Unfortunately I haven't found a way to do that automatically, so for now we modify /etc/sysconfig/irqbalance:

IRQBALANCE_ARGS=--banirq=368 --banirq=369 --banirq=370

All 3 IRQs we typically see in SRCF are listed so that the irqbalance file can be used on all SRCF machines without modification. Note that if another device is added to the PCI bus, the IRQ values may be different.

Timeout Waiting for Buffer

Error messages may arise from rogue that look similar to:

1711665935.215109:pyrogue.axi.AxiStreamDma: AxiStreamDma::acceptReq: Timeout waiting for outbound buffer after 1.0 seconds! May be caused by outbound back pressure.

These arise from a select which times out if no buffer is available. (Code is available here - as of 2024/04/23: https://github.com/slaclab/rogue/blob/de67a57927285d6df9f1a688a8187800ea80fcd8/src/rogue/hardware/axi/AxiStreamDma.cpp#L296C4-L297C4). There are (at least) a couple of scenarios where this may occur.

- Per Larry - "This can happen is the software is not able to keep up with the DMA rate. This message means the DMA is out of buffers because software receiving buffers is not able to keep up"

- Write buffers are stuck in hardware

Write buffers in hardware

This led to the above error when trying to run the high-rate encoder (hrencoder). The Root rogue object could be instantiated but as soon as a register access was attempted (read or write) the software would enter a loop with continual timeout. The DAQ software would continue to run, printing the error every second.

Running cat /proc/datadev_0 showed that most Read buffers were in HW (desired situation); however, the write buffers were stuck in hardware (problematic). This was the case even with no process running. As a result, it was not possible to access registers.

-------------- Write Buffers ---------------

Buffer Count : 4

Buffer Size : 8192

Buffer Mode : 2

Buffers In User : 0

Buffers In Hw : 4 # <-- THESE

Buffers In Pre-Hw Q : 0

Buffers In Sw Queue : 0 # <-- SHOULD BE HERE

Missing Buffers : 0

Min Buffer Use : 150982

Max Buffer Use : 150983

Avg Buffer Use : 150982

Tot Buffer Use : 603929

The solution was to restart the kcu.service service (sudo systemctl restart kcu.service ). Alternatively the kernel modules can be removed and reloaded directly.

sudo rmmod /usr/local/sbin/datadev.ko sudo insmod /usr/local/sbin/datadev.ko <Parameters...>

Potential (Less Disruptive) Solution from Larry Ruckman: Sent via Slack (2024/04/22 17:32:22)

Can you increase increasing the write buffer count? I don’t think I have ever tested with anything less than 16

cpo will try increasing buffer count to see if this minimizes recurrences of the problem.

HSD

Location in Chassis and Repair Instructions

Matt's document showing the location of each hsd in the tmo chassis: https://docs.google.com/document/d/1SzPwrJsoJR0brlQG-mCNILPFh8njXYHGrQz7tl39Thw/edit?usp=sharing.

Supermicro manual for hsd chassis: https://www.supermicro.com/manuals/superserver/4U/MNL-2107.pdf

Supermicro document discussing pcie root complexes for some different systems: https://www.supermicro.com/products/system/4U/4029/PCIe-Root-Architecture.cfm

General Debugging

- look at configured parameters using (for example) "hsdpva DAQ:LAB2:HSD:DEV06_3D:A"

- for kcu firmware that is built to use both QSFP links, the naming of the qsfp's is swapped. i.e. the qsfp that is normally called /dev/datadev_0 is now called /dev/datadev_1

- HSD is not configured to do anything (Check the HSD config tab for no channels enabled)

- if hsd timing frames are not being received at 929kHz (status here), click TxLink Reset in XPM window. Typically when this is an issue the receiving rate is ~20kHz.

- The HSD readoutGroup number does not match platform number in .cnf file (Check the HSD "Config" tab)

- also check that HEADERCNTL0 is incrementing in "Timing" tab of HSD cfg window.

- in hsd Timing tab timpausecnt is number of clocks we are dead (156.25MHz clock ticks). dead-time fraction is timpausecnt/156.25e6

- in hsd expert window "full threshold(events)" sets threshold for hsd deadtime

- in hsd Buffer tab "fex free events" and "raw free events" are the current free events.

- in hsd status window "write fifo count" is number of timing headers waiting for HSD data to associate.

- "readcntsum" on hsd timing tab goes up when we send a transition OR L1Accepts. "trigcntsum" counts L1Accepts only.

- "txcntsum" on PGP tab goes up when we send a transition or l1accepts.

- check kcuStatus for "locPause" non-zero (a low level pgp FIFO being full). If this happens then: configure hsd, clear readout, reboot drp node with KCU

- if links aren't locking in hsdpva use "kcuStatus" to check that the tx/rx clock frequencies are 156MHz. If not (we have seen lower rates like 135MHz) a node power cycle (to reload the KCU FPGA) can fix this. Matt writes: "kcuStatus should have an option to reset the clock to its factory input before attempting to program it to the standard input." It looks like there is a "kcuStatus -R" which "kcuStatus -h" says should reset the clock to 156MHz, but cpo tried this twice and it seems to be stuck at 131MHz still.

- If the drp doesn't complete rollcall and the log file shows messages about PADDR_U being zero, restarting the corresponding hsdioc process may help.

- verify that hsdioc are running

- verify the drp address in the mcs file (srcf, neh,...)

update on variables in hsdpva gui from Matt (06/05/2020):

- timing tab

- timpausecnt (clock ticks of dead time)

- trigcnt: triggers over last second

- trigcntsum: total l1accept

- readcntsum: number of events readout total

- msgdelayset: (units: mhz clock ticks) should be 98 or 99 (there is an "off by one" in the epics variable, and what the hsd sees). if too short, trigger decision is made too early, and there isn't enough buffering in hsd (breaks the buffer model)

- headercntof: should always be zero (ben's module). non-zero indicates that too many l1accepts have been sent and we've overwritten the available buffering

- headercntl0: sum of number of headers received by ben's module.

- headerfifor: the watermark for when ben's stuff asserts dead time

- fulltotrig/nfulltotrig: counters to determine the round trip time from sending an event to getting back the full signal (depends on fiber lengths, for example). nfulltotrig is same thing but with opposite logic.

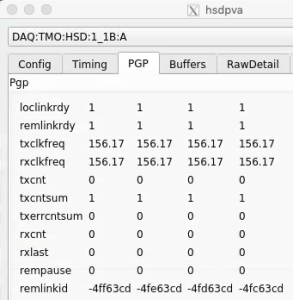

- pgp tab

- loclinkrdy/remlinkrdy should be ready

- tx/rx clk frequencies should be 156.

- txcnt: counts per second of things being send, but only 16 bits so doesn't really display right value

- txcntsum is transitions and l1accepts (only 16 bits so rolls over frequently) (useful for debugging dropped configure-phase-2)

- buffers tab

- freesz: units of rows of adc readout (40samples*2bytes).

- freeevt: number of free events. if they go below thresholds set in config tab: dead time.

- flow tab

- fmask: bit mask of streams remaining to contribute to event

- fcurr: current stream being read

- frdy: bit mask of streams ready to be read

- srdy: downstream slave (byte packer) is ready

- mrdy: b0 is master valid assert, b1 is PGP transmit queue (downstream of byte packer) ready

- rdaddr: current stream cache read pointer

- npend: next buffer to write

- ntrig: next buffer to trigger

- nread: next buffer to read

- pkoflow: overflow count in byte packer

- oflow: current stream cache write pointer

On Nov. 18, 2020 saw a failure mode where hsd_4 did not respond on configure phase 2. Matt tracked this down to the fact that the kcu→hsd links weren't locked (visible with "kcuStatus" and "hsdpva"). Note that kcuStatus is currently not an installed binary: has to be run from the build area. This was caused by the fact that we were only running one-half of a pair of hsd's, and the other half is responsible for setting the clock frequencies on the kcu, which is required for link-lock. We set the clock frequencies by hand running "kcuStatus -I" (I for Initialize, I guess) on the drp node. Matt is thinking about a more robust solution.

On May 24, 2023 saw a failure where 3 out of 4 hsd's in rix failed configure phase2. The failing channels did not increment txcntsum in the pgp tab in hsdpva. The timing links looked OK, showing the usual 919kHz (should be 929?) of timing frames. Restarting hsdioc's didn't help. hsdpva timrxrst, timpllrst, reset didn't help either. xpmpva TxLinkReset didn't help. Eventually recovered by restarting hsdioc and waiting longer, so I think I didn't wait long enough in previous hsdioc restart attempts? May also be important to have the daq shutdown when restarting hsdioc. Also saw many errors like this in the hsdioc logs when it was broken:

DAQ:RIX:HSD:1_1A:A:MONTIMING putDone Error: Disconnect DAQ:RIX:HSD:1_1A:A:MONTIMING putDone Error: Disconnect DAQ:RIX:HSD:1_1A:A:MONTIMING putDone Error: Disconnect

When we attempted to test the DAQ in SRCF with a couple of HSDs, we initially had trouble getting phase 2 of transitions through. The two "sides" 1_DA:A and B behaved differently with pgpread. Sometimes some events came through one side but not the other, but with significant delay from when the groupca Events tab Run box was checked and not when the transition buttons were clicked. Also some of the entries in hsdpva's tabs were odd (Buffers:raw:freesz reset to 65535 for one, 4094 for the other). Some work had been done on the PV gateway. hsdioc on daq-tmo-hsd-01 had been restarted and got into a bad state. Restarting it again cleared up the problem.

Changing the number of DMA buffers (cfgRxCount) in kcu.service can sometimes lead to the node hanging. In one case, after recovery from the hang using IPMI power cycling, the tdetsim service was started instead of the kcu service. After fixing that and starting the kcu service, the KCU was still unhappy. kcuStatus showed links unlocked and rx/txClkFreq values at 131.394 instead of the required 156.171. After power cycling again, kcuStatus reported normal values. We then found the hsdioc on daq-tmo-hsd-01 had become unresponsive. After restarting it, the HSD DAQ ran normally.

DeadTime vs. DeadFrac

Matt measures both deadtime and deadfrac. The first is amount of time the system is unable to accept triggers, while the second is the fraction of events lost because of dead time. Deadfrac is, ultimately, more important. The HSD's can produce dead time, but still have deadfrac be small if all the dead time is between triggers. Matt writes about seeing 28% dead time at 10kHz with full waveforms while deadfrac was still zero: "I calculate 25% deadtime given the raw waveform size, trigger rate, and readout bandwidth. One raw waveform is 10us x 12 GB/s = 120 kB. That's enough to cross the threshold on the available buffer size. So, we are dead until that event is readout. The readout rate is 148M * 32B = 4.7 GB/s. So, it takes 120kB / 4.7 GB/s = 25 us to readout. That's 25% of the time between 10kHz triggers".

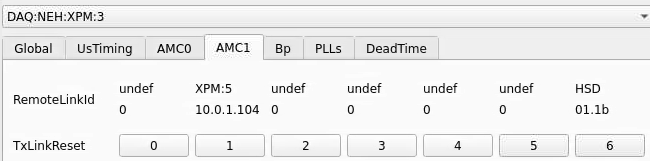

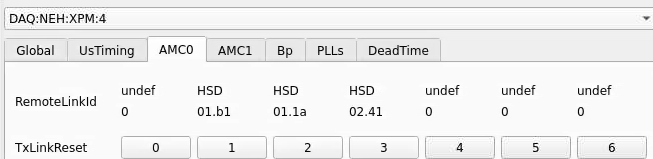

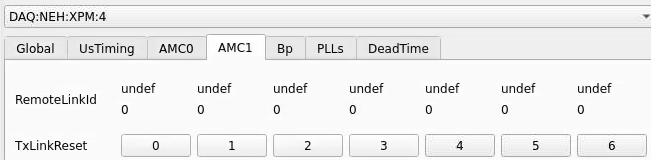

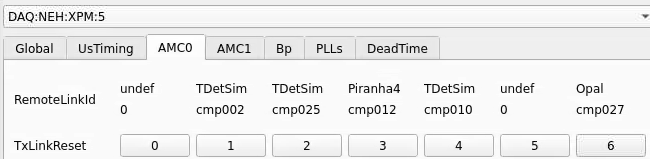

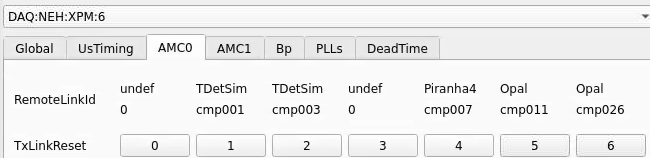

Cable Swaps

hsd cables can be plugged into the wrong place (e.g. "pairs" can be swapped). They must match the mapping documentation Matt has placed at the bottom of hsd.cnf (which is reflected in the lines in hsd.cnf that start up processes, making sure those are consistent is a manual process). Matt has the usual "remote link id" pattern that can be used to check this, by using "kcuStatus" on the KCU end and "hsdpva" on the other end. e.g.

psdev01:~$ ssh drp-neh-cmp020 ~/git/lcls2/psdaq/build/psdaq/pgp/kcu1500/app/kcuStatus | grep txLinkId

txLinkId : fb009c33 fb019c33 fb029c33 fb039c33 fb049c33 fb059c33 fb069c33 fb079c33

psdev01:~$

NOTE: datadev_1 shows up first! You can see this by providing a -d argument to kcuStatus:

(ps-4.5.10) drp-neh-cmp024:lcls2$ ./psdaq/build/psdaq/pgp/kcu1500/app/kcuStatus -d 1 | grep -i linkid

rxLinkId : fc000000 fc010000 fc020000 fc030000

txLinkId : fb009c3b fb019c3b fb029c3b fb039c3b

(ps-4.5.10) drp-neh-cmp024:lcls2$

The lane number (0-7) is encoded in the 0xff0000 bits. The corresponding hsdpva is:

Unfortunately, the remlinkid is currently displayed as a signed integer, so to see the real value one has to calculate, for example, 0x100000000-0x4ff63cd=0xfb009c33 (see output of kcuStatus txLinkId above)

Missing Configuration of KCU Clock Speeds (KCU links not locking)

The clock speed is programmed via datadev_1, so if only a datadev_0 is included then the pgp link between KCU and HSD will not lock. It currently requires the corresponding datadev_1 to be in the cnf file.

Zombie HSD Causing Deadtime

Multi-opal can have this problem too. Can get dead time from an hsd that is not included the partition (or has been removed from the cnf). In the case, the "zombie" has a stuck "enable" bit in the hsdpva config tab. Options:

- segment level should "disable" on restart

- will still have problems when the zombie hsd is removed from the cnf

- better: can also disable on control-C signal (handles case where hsd is removed from cnf)

- another possibility: the collection mechanism reports all participating hsd's/opal's and the "supervisor" drp process (determined by the named-semaphore) could disable the unused ones

Setting Up New HSD Nodes

Remember to stop hsd processes before installing firmware:

Connect to tmo-daq as tmoopr and use the "procmgr stopall hsd.cnf" command

Also, remember to stopall hsd.cnf before making any changes in the hsd.cnf file itself since port numbering is incremental and order will change if one introduces new processes.

Firmware Programming

Github repo for hsd card firmware: https://github.com/slaclab/l2si-hsd

Some useful instructions from Matt:

High Speed Digitizer (HSD) Firmware Programming

Programming HSD Firmware in LCLS Hutches

Note that as of April 2024 firmware can also be programmed over pcie and Matt has moved to the standard datadev driver (although need to pick up the latest version from git to get support for the use of multiple hsd's in a crate.

Matt writes about programming hsd's over pcie: I think your software is slightly out-of-date. It should identify the PROM as something like AxiMicronMt28ew, I think. Also, you forgot the command line option --type BPI. Expect that it will be very slow still. About 1/2 hour for the full reprogramming. You can cd to ~weaver/ppa/software and try my checkout of the script. I usually execute source setup_l2si.sh from there and then the script.

Here is a successful session:

drp-neh-cmp024:scripts$ python updatePcieFpga.py --path ~weaver/mcs/hsd --dev /dev/datadev_0 --type BPI Rogue/pyrogue version v6.1.1. https://github.com/slaclab/rogue Basedir = /cds/home/w/weaver/ppa/firmware/submodules/axi-pcie-core/scripts ######################################### Current Firmware Loaded on the PCIe card: ######################################### Path = PcieTop.AxiPcieCore.AxiVersion FwVersion = 0x5000100 UpTime = 0:45:31 GitHash = dirty (uncommitted code) XilinxDnaId = 0x4002000101295e062c1123c5 FwTarget = hsd_6400m BuildEnv = Vivado v2023.1 BuildServer = rdsrv310 (Ubuntu 20.04.6 LTS) BuildDate = Tue 13 Aug 2024 10:01:40 AM PDT Builder = jumdz ######################################### 0 : /cds/home/w/weaver/mcs/hsd/hsd_6400m 1 : /cds/home/w/weaver/mcs/hsd/hsd_6400m_115-0x05000100-20240315145241-weaver-be302c2 2 : /cds/home/w/weaver/mcs/hsd/hsd_6400m_115-0x05000100-20240315080158-weaver-1034960 3 : /cds/home/w/weaver/mcs/hsd/hsd_6400m-0x04010000-20240116112956-weaver-af814fd 4 : /cds/home/w/weaver/mcs/hsd/hsd_6400m_dma_nc-0000000E-20240206070353-weaver-8a73a71 5 : /cds/home/w/weaver/mcs/hsd/hsd_6400m-0000000C-20210524162321-weaver-d7470d1 6 : /cds/home/w/weaver/mcs/hsd/hsd_6400m-0x04010100-20240124220119-weaver-c48e101 7 : /cds/home/w/weaver/mcs/hsd/hsd_6400m-0x05000100-20240424152429-weaver-b701acb 8 : /cds/home/w/weaver/mcs/hsd/hsd_6400m-0x05010000-20240801151506-weaver-8256217 9 : /cds/home/w/weaver/mcs/hsd/hsd_dualv3-000000001-20210319203152-weaver-3e4f06f 10 : Exit Enter image to program into the PCIe card's PROM: 8 PcieTop.AxiPcieCore.AxiMicronMt28ew.LoadMcsFile: /cds/home/w/weaver/mcs/hsd/hsd_6400m-0x05010000-20240801151506-weaver-8256217.mcs Reading .MCS: [####################################] 100% Erasing PROM: [############

Additional Setup

Matt writes to Riccardo:

Riccardo: Hello Matt, I have some confusion regarding how to use the new firmware for the hsd.

The hsd.cnf file still uses /dev/datadev_3e (for example), but in the fee in cmp024 we only have datadev_0.

What do you need to change to switch to the datadev.ko?

Do you use a different kcu.service?

Thanks

Matt: You can either use the old driver with the device name datadev_0, or you can install the updated driver and load it with the argument cfgDevName=1 to get the bus ID tagged onto the device name. I'd recommend just staying with the old driver and changing the expected name to datadev_0.

See the pcie device numbers in with lspci:

daq-tmo-hsd-01:~$ lspci | grep -i slac 1a:00.0 Memory controller: SLAC National Accelerator Lab TID-AIR Device 2100 1b:00.0 Memory controller: SLAC National Accelerator Lab TID-AIR Device 2100 3d:00.0 Memory controller: SLAC National Accelerator Lab TID-AIR Device 2100 3e:00.0 Memory controller: SLAC National Accelerator Lab TID-AIR Device 2100 5e:00.0 Memory controller: SLAC National Accelerator Lab TID-AIR Device 2100 88:00.0 Memory controller: SLAC National Accelerator Lab TID-AIR Device 2100 89:00.0 Memory controller: SLAC National Accelerator Lab TID-AIR Device 2100 b1:00.0 Memory controller: SLAC National Accelerator Lab TID-AIR Device 2100 b2:00.0 Memory controller: SLAC National Accelerator Lab TID-AIR Device 2100 da:00.0 Memory controller: SLAC National Accelerator Lab TID-AIR Device 2100

2 hsd cards can talk to 1 data card, and each data card has 4 LR4 transceivers (2 per hsd-card). hsd card has a "mezzanine" component with analog input connectors, and a JESD connection through the usual xilinx MGT (serial connection) to a pcie carrier card. There is a cable (small coax cables that Matt says have failed in the past) that connects the hsd card(s) (up to two) to the data card. The FPGA is on the hsd pcie carrier card.

Then startup pv servers for both A&B (and potentially 2 cards hooked up to 1 data card) with: "hsdpvs -P DAQ:TMO:HSD:2_41:A" (currently these go on ct002).

Startup the hsdioc process like this: "hsd134PVs -P DAQ:TMO:HSD:2_41 -d /dev/pcie_adc_41". This also programs the right clock frequencies for the timing link to the XPM, so you can check XPM link lock with xpmpva at this point.

One can run kcuStatus on the drp node to set clock frequencies and look at link lock on that side.

Now we can bring up hsdpva like "hsdpva DAQ:TMO:HSD:2_41:A DAQ:TMO:HSD:2_41:B".

NOTE: check for A and B cable swaps as described above using the remote link id's shown in hsdpva and kcuStatus.

Firmware upgrade from JTAG to PCIE

Install firmware newer than "hsd_6400m-0x05000100-20240424152429-weaver-b701acb.mcs".

Install datadev.ko:

- login to daq-tmo-hsd-01 or 02 In hsd-02 one needs to re-mount the filesystem as rw to make any modifications:

> sudo mount -o remount,rw / > git clone git@github.com:slaclab/aes-stream-drivers(we are at tag 6.0.1 last time we installed)> cd aes-stream-drivers/data_dev/driver> make- > sudo cp datadev.ko /usr/local/sbin/

- Create a /lib/systemd/system/kcu.service

- > sudo systemctl enable kcu.service

- > sudo systemctl start kcu.service

Modify hsd.cnf:

procmgr_config.append({host:peppex_node, id:'hsdioc_tmo_{:}'.format(peppex_hsd), port:'%d'%iport, flags:'s', env:hsd_epics_env, cmd:'hsd134PVs -P {:}_{:} -d /dev/datadev_{:}'.format(peppex_epics,peppex_hsd.upper(),peppex_hsd)})

iport += 1

So that it points to /dev/datadev/

Run :

> procmgr stopall hsd.cnf

and restart it all

> procmgr start hsd.cnf

These last 2 steps may be required to be repeated a couple of times.

Start the DAQ and send a configure. Also this step may be required to be repeated a couple of times.

Fiber Optic Powers

You can see optical powers on the kcu1500 with the pykcu command (and pvget), although see below for examples of problems so I have the impression this isn't reliable. See the timing-system section for an example of how to run pykcu. On the hsd's themselves it's not possible because the FPGA (on the hsd pcie carrier card) doesn't have access to the i2c bus (on the data card). Matt says that In principle the hsd card can see the optical power from the timing system, but that may require firmware changes.

Note: On June 4, 2024 Matt says this is working now (see later in this section for example from Matt).

Note: on the kcu1500 running "pykcu -d /dev/datadev_1 -P DAQ:CPO" this problem happens when I unplug the fiber farthest from the mini-usb connector:

(ps-4.5.10) drp-neh-cmp024:lcls2$ pvget DAQ:CPO:DRP_NEH_CMP024:MON DAQ:CPO:DRP_NEH_CMP024:MON 2022-03-21 16:41:49.139 RxPwr TxBiasI FullTT nFullTT 2.0993 41.806 0 0 0.0001 41.114 0 0 0.0001 41.008 0 0 0.0001 42.074 0 0 And the first number fluctuates dramatically: (ps-4.5.10) drp-neh-cmp024:lcls2$ pvget DAQ:CPO:DRP_NEH_CMP024:MON DAQ:CPO:DRP_NEH_CMP024:MON 2022-03-21 16:41:29.127 RxPwr TxBiasI FullTT nFullTT 3.3025 41.946 0 0 0.0001 41.198 0 0 0.0001 41.014 0 0 0.0001 42.21 0 0 (ps-4.5.10) drp-neh-cmp024:lcls2$ pvget DAQ:CPO:DRP_NEH_CMP024:MON DAQ:CPO:DRP_NEH_CMP024:MON 2022-03-21 16:41:39.129 RxPwr TxBiasI FullTT nFullTT 0.0001 41.872 0 0 0.0001 40.932 0 0 0.0001 40.968 0 0 0.0001 42.148 0 0

When I plug the fiber back in I see significant changes but the first number continues to fluctuate dramatically:

(ps-4.5.10) drp-neh-cmp024:lcls2$ pvget DAQ:CPO:DRP_NEH_CMP024:MON DAQ:CPO:DRP_NEH_CMP024:MON 2022-03-21 16:45:19.343 RxPwr TxBiasI FullTT nFullTT 1.1735 41.918 0 0 0.744 41.17 0 0 0.4544 41.008 0 0 0.6471 42.032 0 0 (ps-4.5.10) drp-neh-cmp024:lcls2$ pvget DAQ:CPO:DRP_NEH_CMP024:MON DAQ:CPO:DRP_NEH_CMP024:MON 2022-03-21 16:45:39.363 RxPwr TxBiasI FullTT nFullTT 0.4084 41.972 0 0 0.7434 41.126 0 0 0.4526 41.014 0 0 0.6434 42.014 0 0

Running pykcu with datadev_0 all powers read back as zero, unfortunately:

(ps-4.5.10) drp-neh-cmp024:lcls2$ pvget DAQ:CPO:DRP_NEH_CMP024:MON

DAQ:CPO:DRP_NEH_CMP024:MON 2022-03-21 16:47:40.762

RxPwr TxBiasI FullTT nFullTT

0 0 0 0

0 0 0 0

0 0 0 0

0 0 0 0

Update from Matt on June 4, 2024 that this appears to work now for the hsd kcu's. He supplies this example:

(ps-4.6.3) bash-4.2$ pykcu -d /dev/datadev_0 -P DAQ:TSTQ & [1] 1041 (ps-4.6.3) bash-4.2$ Rogue/pyrogue version v6.1.1. https://github.com/slaclab/rogue ClkRates: (0.0, 0.0) RTT: ((0, 0), (0, 0), (0, 0), (0, 0)) (ps-4.6.3) bash-4.2$ (ps-4.6.3) bash-4.2$ pvget DAQ:TSTQ:DRP_SRCF_CMP005:MON DAQ:TSTQ:DRP_SRCF_CMP005:MON 2024-06-03 14:24:10.300 RxPwr TxBiasI FullTT nFullTT 4.9386 36.16 0 0 0.0233 37.504 0 0 0.0236 38.016 0 0 0.0233 36.224 0 0 and (ps-4.6.3) bash-4.2$ pykcu -d /dev/datadev_1 -P DAQ:TSTQ & [1] 1736 (ps-4.6.3) bash-4.2$ Rogue/pyrogue version v6.1.1. https://github.com/slaclab/rogue ClkRates: (0.0, 0.0) RTT: ((0, 0), (0, 0), (0, 0), (0, 0)) (ps-4.6.3) bash-4.2$ pvget DAQ:TSTQ:DRP_SRCF_CMP005:MON DAQ:TSTQ:DRP_SRCF_CMP005:MON 2024-06-03 14:27:53.234 RxPwr TxBiasI FullTT nFullTT 4.1193 36.16 0 0 0.0233 37.44 0 0 0.0235 38.016 0 0 0.0233 36.096 0 0

No Phase 2

There have been several instances of not being able to get phase 2 of Configure though an HSD DRP. At least some of these were traced to kcuStatus showing 131 MHz instead of the required 156 MHz. This can happen if the tdetsim service starts instead of kcu.service. Sometimes, no amount of kcuStatus -I or kcuStatus -R restores proper operation. In this case the KCU FPGA may be in a messed up state, so power cycle the node to get the FPGA to reload.

PvaDetector

- If you're seeing std::bad_alloc, see note above in 'datadev Driver' about configuring tdetsim.service

- use "-vvv" to get printout of timestamp matching process

- options to executable: "-1" is fuzzy timestamping, "-0" is no timestamp matching, and no argument is precise timestamp matching.

- the IP where the PV is served might not be the same as the IP returned by a ping. For example: ping ctl-kfe-cam-02 returns 172.21.92.80, but the PV is served at 172.21.156.96

- netconfig can be used to determine the IP where the PV is served. For example:

/reg/common/tools/bin/netconfig search ctl-kfe-cam-02-drp

ctl-kfe-cam-02-drp:

subnet: daq-drp-neh.pcdsn

Ethernet Address: b4:2e:99:ab:14:4f

IP: 172.21.156.96

Contact: uid=velmir,ou=People,dc=reg,o=slac

PC Number: 00000

Location: Same as ctl-kfe-cam-02

Description: DRP interface for ctl-kfe-cam-02

Puppet Classes:Found 1 entries that match ctl-kfe-cam-02-drp.

Fake Camera

- use this to check state of the pgp link, and the readout group, size and link mask (instead of kcuStatus): kcuSim -s -d /dev/datadev_0

- use this to configure readout group, size, link mask: kcuSimValid -d /dev/datadev_0 -c 1 -C 2,320,0xf

- I think this hangs because it's trying to validate a number of events (specified with the -c argument?)

Ansible Management of drps in srcf

Through Ansible we have created a suite of scripts that allow to manage the drp nodes.

We have divided the nodes in 5 categories:

- timing high

- timing slow

- HSD

- camera_link

- wave8

Every category is then standardized to have the same configuration, use the same firmware, and use the same drivers.

To retrieve the scripts: gh repo clone slac-lcls/daqnodeconfig

The file structure is:

ansible # main ansible folder

ansible.cfg #local configuration file for ansible, without a local file ansible will try to use a system file

hosts #file defining labels for drp nodes, this files organizes in which category a drp node is

HSD #Ansible Scripts oriented for HSD only

HSD_driver_update.yml # is dedicated on installing the driver by using the .service files

HSD_file_update.yml # is dedicated to copy all needed files in the proper folders, if file is the same it won't be copied

HSD_pcie_firmware_update.yml. # is dedicated to upgrade PCIE firmware in HSD-01 for all the HSD cards

HSD_firmware_update.yml # is dedicated to upgrade KCU firmware in the srcf nodes

nodePcieFpga #Ansible uses pgp-pcie-apps to update firmware. This file is a modified version of updatePcieFpga.py and allows to pass the name of the firmware as a variable, bypassing the firmware selection. This file must be installed in pgp-pcie-apps/firmware/submodules/axi-pcie-core/scripts/nodePcieFpga and a link must be created in pgp-pcie-apps/software/scripts

Timing #Ansible Scripts oriented for Timing nodes only

#Content is similar to HSD

Camera #Ansible Scripts oriented for Camera-link nodes only

#Content is similar to HSD

Wave8 #Ansible Scripts oriented for Wave8 nodes only

#Content is similar to HSD

README.md #README file as per github rules

setup_env.sh # modified setup_env to load ansible environment

shared_files # folder with all the files needed to the system

status #useful clush scripts to investigate nodes status

find_hsd.sh # sends out a clush request to drp-neh-ctl002 to gather information about datadev_1 if the Build String contains the HSD firmware

md5sum_datadev.sh # sends out a clush request to drp-neh-ctl002 to gather information md5 of datadev

check_weka_status.sh # sends out a clush request to drp-neh-ctl002 to gather information about the presence of ls /cds/drpsrcf/

hosts

in the hosts files

[timing_high] drp-srcf-cmp038 [wave8_drp] drp-srcf-cmp040 [camlink_drp] drp-srcf-cmp041 [hsd_drp] drp-srcf-cmp005 drp-srcf-cmp017 drp-srcf-cmp018 drp-srcf-cmp019 drp-srcf-cmp020 drp-srcf-cmp021 drp-srcf-cmp022 drp-srcf-cmp023 drp-srcf-cmp024 drp-srcf-cmp042 drp-srcf-cmp046 drp-srcf-cmp048 drp-srcf-cmp049 drp-srcf-cmp050 [tmo_hsd] daq-tmo-hsd-01

we define which drp is associated with which category

HSD(Timing/Camera/Wave8)_file_update.yml

---

- hosts: "hsd_drp" #DO NOT USE "all", otherwise every computer in the network will be used

become: true #in order to run as root use the "-K" option in the command line

gather_facts: false

strategy: free #Allows to run in parallel on several nodes

ignore_errors: true #needs to be true, otherwise the program stops when an error occurs

check_mode: true #modify to false, this is a failsafe

vars:

hsd_files:

- {src: '../../shared_files/datadev.ko_srcf', dest: '/usr/local/sbin/datadev.ko'}

- {src: '../../shared_files/kcuSim', dest: '/usr/local/sbin/kcuSim'}

- {src: '../../shared_files/kcuStatus', dest: '/usr/local/sbin/kcuStatus'}

- {src: '../../shared_files/kcu_hsd.service', dest: '/usr/lib/systemd/system/kcu.service'} #timing will have tdetSim.service, others kcu.service

- {src: '../../shared_files/sysctl.conf', dest: '/etc/sysctl.conf'}

tasks:

- name: "copy files"

ansible.builtin.copy:

src: "{{ item.src }}"

dest: "{{ item.dest }}"

backup: true

mode: a+x

with_items: "{{ hsd_files }}" #this is a loop over all the elements in the list hsd_files

to run the script, modify check_mode to false and run:

source setup_env.sh ansible-playbook HSD/HSD_file_update.yml -Kand insert the sudo password

HSD(Timing/Camera/Wave8)_driver_update.yml

---

- hosts: "hsd_drp" #DO NOT USE "all", otherwise every computer in the network will be used

become: true #in order to run as root use the "-K" option in the command line

gather_facts: false

strategy: free

ignore_errors: true #needs to be true, oherwise the program stops when an error occurs

check_mode: true #modify to false, this is a failsafe

tasks:

- name: "install driver"

ansible.builtin.shell: |

rmmod datadev

systemctl disable irqbalance.service

systemctl daemon-reload

systemctl start kcu.service #timing will have tdetSim.service

systemctl enable kcu.service

to run the script, modify check_mode to false and run:

source setup_env.sh ansible-playbook HSD/HSD_driver_update.yml -Kand insert the sudo password

HSD(Timing/Camera/Wave8)_firmware_update.yml

---

- hosts: "hsd_drp" # timing_high, wave8_drp,camlink_drp,hsd_drp

become: false #do not use root

gather_facts: false

ignore_errors: true

check_mode: true #failsafe, set to false if you want to run it

serial: 1

vars:

filepath: "~weaver/mcs/drp/"

firmw: "DrpPgpIlv-0x05000000-20240814110544-weaver-88fa79c_primary.mcs"

setup_source: "source ~tmoopr/daq/setup_env.sh"

cd_cameralink: "cd ~melchior/git/pgp-pcie-apps/software/"

tasks:

- name: "update firmware"

shell:

args:

cmd: nohup xterm -hold -T {{ inventory_hostname }} -e "bash -c '{{ setup_source }} ; {{ cd_cameralink }}

; python ./scripts/nodePcieFpga.py --dev /dev/datadev_1 --filename {{ filepath }}{{ firmw }} '" </dev/null >/d

ev/null 2>&1 &

executable: /bin/bash

to run the script, modify check_mode to false and run:

source setup_env.sh ansible-playbook HSD/HSD_firmware_update.yml

configdb Utility

From Chris Ford. See also ConfigDB and DAQ configdb CLI Notes. Supports ls/cat/cp. NOTE: when copying across hutches it's important to specify the user for the destination hutch. For example:

configdb cp --user rixopr --password <usual> --write tmo/BEAM/tmo_atmopal_0 rix/BEAM/atmopal_0

Storing (and Updating) Database Records with <det>_config_store.py

Detector records can be defined and then stored in the database using the <det>_config_store.py scripts. For a minimal model see the hrencoder_config_store.py which has just a couple additional entries beyond the defaults (which should be stored for every detector).

Once the record has been defined in the script, the script can be run with a few command-line arguments to store it in the database.

The following call should be appropriate for most use cases.

python <det>_config_store.py --name <unique_detector_name> [--segm 0] --inst <hutch> --user <usropr> --password <usual> [--prod] --alias BEAM

Verify the following:

- User and hutch (

--inst) as defined in the cnf file for the specific configuration and include as--user <usr>. This is used for HTTP authentication. E.g.,tmoopr,tstopr - Password is the standard password used for HTTP authentication.

- Include

--prodif using the production database. This will need to match the entry in yourcnffile as well, defined ascdb.https://pswww.slac.stanford.edu/ws-auth/configdb/wsis the production database. - Do not include the segment number in the detector name. If you are updating an entry for a segment other than 0, pass

–sgem $SEGMin addition to–name $DETNAME

python <det>_config_store.py --help is available and will display all arguments.

There are similar scripts that can be used to update entries. E.g.:

hsd_config_update.py

Making Schema Updates in configdb

i.e. changing the structure of an object while keeping existing values the same. An example from Ric: "Look at the __main__ part of epixm320_config_store.py. Then run it with --update. You don’t need the bit regarding args.dir. That’s for uploading .yml files into the configdb."

Here's an example from Matt: python /cds/home/opr/rixopr/git/lcls2_041224/psdaq/psdaq/configdb/hsd_config_update.py --prod --inst tmo --alias BEAM --name hsd --segm 0 --user tmoopr --password XXXX

Configdb GUI for multi detector modification

By running "configdb_GUI" in a terminal after sourcing the setup_env, a GUI appears.

- radio buttons allow to select between Prod and Dev databases. Do not change database while progress bar is increasing

- Progress bar shows that data is being loaded (this is needed to populate 4). When starting wait for the progress bar to reach 100.

- After the Progress bar reaches 100, the first column fills up with the devices present in the database

- The dropdown allows to select a specific detector type to show up in the second column

- Clicking on a device shows the detectors associated with it in the second column; multiple detector can be selected

- In the third column the data for the first detector selected is shown

- By selecting a variable in the third column, the value for every detector is shown in the forth column

- when a value is selected in the third column, the Modify button becomes available. In the text field, one can provide the new value to be added to every detector for the selected variable.

- By using "delta:30", it is possible to add or subtract (with negative number) the variable in all the selected detectors

- the value is casted into the same type of the variable in the database, if a different type is provided, the field does not get updated (i.e. string instead of number)

MCC Epics Archiver Access

Matt gave us a video tutorial on how to access the MCC epics archiver.

TEB/MEB

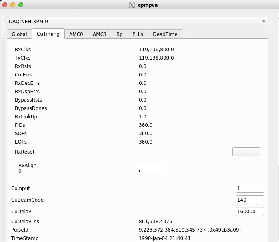

(conversation with Ric on 06/16/20 on TEB grafana page)

BypCt: number bypassing the TEB

BtWtg: boolean saying whether we're waiting to allocate a batch

TxPdg (MEB, TEB, DRP): boolean. libfabric saying try again to send to the designated destination (meb, teb, drp)

RxPdg (MEB, TEB, DRP): same as above but for Rx.

(T(eb)M(eb))CtbOInFlt: incremented on a send, decremented on a receive (hence "in flight")

In tables at the bottom: ToEvtCnt is number of events timed out by teb

WrtCnt MonCnt PsclCnt: the trigger decisions

TOEvtCnt TIEvtCnt: O is outbound from drp to teb, I is inbound from teb to drp

Look in teb log file for timeout messages. To get contributor id look for messages like this in drp:

/reg/neh/home/cpo/2020/06/16_18:19:24_drp-tst-dev010:tmohsd_0.log:Parameters of Contributor ID 8:

Conversation from Ric and Valerio on the opal file-writing problem (11/30/2020):

I was poking around with daqPipes just to familiarize myself with it and I was looking at the crash this morning at around 8.30. I noticed that at 8.25.00 the opal queue is at 100% nd teb0 is starting to give bad signs (again at ID0, from the bit mask) However, if I make steps of 1 second, I see that it seems to recover, with the queue occupancy dropping to 98, 73 then 0. However, a few seconds later the drp batch pool for all the hsd lists are blocked. I would like to ask you (answer when you have time, it is just for me to understand): is this the usual Opal problem that we see? Why does it seem to recover before the batch pool blocks? I see that the first batch pool to be exhausted is the opal one. Is this somehow related?

- I’ve still been trying to understand that one myself, but keep getting interrupted to work on something else, so here is my perhaps half baked thought: Whatever the issue is that blocks the Opal from writing, eventually goes away and so it can drain. The problem is that that is so late that the TEB has started timing out a (many?) partially built event(s). Events for which there is no contributor don’t produce a result for the missing contributor, so if that contributor (sorry, DRP) tried to produce a contribution, it never gets an answer, which is needed to release the input batch and PGP DMA buffer. Then when the system unblocks, a SlowUpdate (perhaps, could be an L1A, too, I think) comes along with a timestamp so far in the future that it wraps around the batch pool, a ring buffer. This blocks because there might already be an older contribution there that is waiting to be released. It scrambles my brain to think about, so apologies if it isn’t clear. I’m trying to think of a more robust way to do it, but haven’t gotten very far yet.

- One possibility might be for the contributor/DRP to time out the input buffer in EbReceiver, so that if a result matching that input never arrives, the input buffer and PGP buffer are released. This could produce some really complicated failure modes that are hard to debug, because the system wouldn’t stop. Chris discouraged me from going down that path for fear of making things more complicated, rightly so, I think.

If a contribution is missing, the *EBs time it out (4 seconds, IIRR), then mark the event with DroppedContribution damage. The Result dgram (TEB only, and it contains the trigger decision) receives this damage and is sent to all contributors that the TEB heard from for that event. Sending it to contributors it didn’t hear from might cause problems because they might have crashed. Thus, if there’s damage raised by the TEB, it appears in all contributions that the DRPs write to disk and send to the monitoring. This is the way you can tell in the TEB Performance grafana plots whether the DRP or the TEB is raising the damage.

Ok thank you. But when you say: "If that contributor (sorry, DRP) tried to produce a contribution, it never gets an answer, which is needed to release the input batch and PGP DMA buffer". I guess you mean that the DRP tries to collect a contribution for a contributor that maybe is not there. But why would the DRP try to do that? It should know about the damage from the TEB's trigger decision, right? (Do not feel compelled to answer immediately, when you have time)

The TEB continues to receive contributions even when there’s an incomplete event in its buffers. Thus, if an event or series of events is incomplete, but a subsequent event does show up as complete, all those earlier incomplete events are marked with DroppedContribution and flushed, with the assumption they will never complete. This happens before the timeout time expires. If the missing contributions then show up anyway (the assumption was wrong), they’re out of time order, and thus dropped on the floor (rather than starting a new event which will have the originally found contributors in it missing (Aaah, my fingers have form knots!), causing a split event (don’t know if that’s universal terminology)). A split event is often indicative of the timeout being too short.

- The problem is that the DRP’s Result thread, what we call EbReceiver, if that thread gets stuck, like in the Opal case, for long enough, it will backpressure into the TEB so that it hangs in trying to send some Result to the DRPs. (I think the half-bakedness of my idea is starting to show…) Meanwhile, the DRPs have continued to produce Input Dgrams and sent them to the TEB, until they ran out of batch buffer pool. That then backs up in the KCU to the point that the Deadtime signal is asserted. Given the different contribution sizes, some DRPs send more Inputs than others, I think. After long enough, the TEB’s timeout timer goes off, but because it’s paralyzed by being stuck in the Result send(), nothing happens (the TEB is single threaded) and the system comes to a halt. At long last, the Opal is released, which allows the TEB to complete sending its ancient Result, but then continues on to deal with all those timed out events. All those timed out events actually had Inputs which now start to flow, but because the Results for those events have already been sent to the contributors that produced their Inputs in time, the DRPs that produced their Inputs late never get to release their Input buffers.

A thought from Ric on how shmem buffer sizes are calculated:

- If the buffer for the Configure dgram (in /usr/lib/systemd/system/tdetsim.service) is specified to be larger, it wins. Otherwise it’s the Pebble buffer size. The Pebble buffer size is derived from the tdetsim.service cfgSize, unless it’s overridden by the pebbleBufSize kwarg to the drp executable

On 4/1/22 there was an unusual crash of the DAQ in SRCF. The system seemed to start up and run normally for a short while (according to grafana plots), but the AMI sources panel didn't fill in. Then many (all?) DRPs crashed (ProcStat Status window) due to not receiving a buffer in which to deposit their SlowUpdate contributions (log files). The ami-node_0 log file shows exceptions '*** Corrupt xtc: namesid 0x501 not found in NamesLookup' and similar messages. The issue was traced to there being 2 MEBs running on the same node. Both used the same 'tag' for the shared memory (the instrument name, 'tst'), which probably led to some internal confusion. Moving one of the MEBs to another node resolved the problem. Giving one of the MEBs a non-default tag (-t option) also solves the problem and allows both MEBs to run on the same node.

Pebble Buffer Count Error

Summary: if you see "One or more DRPs have pebble buffer count > the common RoG's" in the teb log file it means that the common readout group pebble buffers needs to be made the largest either by modifying the .service file or setting the pebbleBufCount kwarg. Note that only one of the detectors in the common RoG needs to have more buffers than the non-common RoG (see example that follows).

More detailed example/explanation from Ric:

case A works (detector with number of pebble buffers):

group0: timing (8192) piran (1M)

group3: bld (1M)

case B breaks:

group0: timing (8192)

group3: bld (1M) piran (1M)

teb needs buffers to put its answers on its own local AND on the drp teb sends back an index to all drp's that is identical even timing allocates space for 1M teb answers (learns through collection that piranha has 1M and also allocates the same)

workaround was to increase dma buffers in tdetsim.service, but this potentially wastes those, really only need pebble buffers which can be individually on the command line of the drp executable with a pebbleBufCount kwarg

note that the teb compares the SUM of tx/rx buffers, which is what the pebble buf count gets set to by default, unless overridden with pebbleBufCount kwarg.

BOS

See Matt's information: Calient S320 ("The BOS")

- Create cross-connections:

curl --cookie PHPSESSID=ue5gks2db6optrkputhhov6ae1 -X POST --header "Content-Type: application/json" --header "Accept: application/json" -d "{\"in\": \"1.1.1\",\"out\": \"6.1.1\",\"dir\": \"bi\",\"band\": \"O\"}" --user admin:pxc*** "http://osw-daq-calients320.pcdsn/rest/crossconnects/?id=add" - Activate cross-connections:

curl --cookie PHPSESSID=ue5gks2db6optrkputhhov6ae1 -X POST --header "Content-Type: application/json" --header "Accept: application/json" --user admin:pxc*** "http://osw-daq-calients320.pcdsn/rest/crossconnects/?id=activate&conn=1.1.1-6.1.1&group=SYSTEM&name=1.1.1-6.1.1"- This doesn't seem to work: Reports '

411 - Length Required'

Use the web GUI for now

- This doesn't seem to work: Reports '

- List cross-connections (easier to read in the web GUI):

curl --cookie PHPSESSID=ue5gks2db6optrkputhhov6ae1 -X GET --user admin:pxc*** 'http://osw-daq-calients320.pcdsn/rest/crossconnects/?id=list' - Save cross-connections to a file:

- Go to http://osw-daq-calients320.pcdsn/

- Navigate to

Ports→Summary - Click on '

Export CSV' in the upper left of the Port Summary table - Check in the resulting file as

lcls2/psdaq/psdaq/cnf/BOS-PortSummary.csv

BOS Connection CLI

Courtesy of Ric Claus. NOTE the dashes in the "delete" since you are deleting a connection name. In the "add" the two ports are joined together with a dash to create the connection name, so order of the ports matters.

bos delete --deactivate 1.1.7-5.1.2 bos delete --deactivate 1.3.6-5.4.8 bos add --activate 1.3.6 5.1.2 bos add --activate 1.1.7 5.4.8

XPM

Link Quality

(from Julian) Looking at the eye diagram or bathtub curves gives really good image of the high speed links quality on the Rx side. The pyxpm_eyediagram.py tool provides a way to generate these plots for the SFPs while the XPM is receiving real data.

How to use (after loading conda env.)

python pyxpm_eyescan.py --ip {XPM_IP_ADDR} --link {AMC_LINK[0:13]} [--hseq {VALUE}] [--eye] [--bathtub] [--gui] [--forceLoopback] [--target {VALUE}]

Where:

- hseq VALUE: set the high speed equalizer value (see: High-speed repeater configuration below)

- eye: ask for eye diagram generation

- bathtub: ask for the bathtub curve generation

- gui: open debugging view

- forceLoopback: set the link in loopback during the qualification run

High-speed repeater configuration ("equalizer" settings)

High speed repeater, located on the AMC cards, which repeat (clean) the link from/to SFP/FPGA, have to be configured. The equalizer default value does not provide a safe environment and link might not lock with this configuration.

How to use (after loading conda env.). Note that XPM_ID is the usual DAQ ID number (e.g. zero is the supervisor xpm in room 208). This allows Julian's software to talk to the right epics registers:

python pyxpm_hsrepeater.py --ip {XPM_IP_ADDR} --xpmid {XPM_ID} --link {AMC_LINK[0:13]}

What:

- Runs a equalizer scan and reports the link status for each value

- Set a value where no error have been detected in the middle of the working window (the tool does not automatically set the best value)

See results here: Link quality and non-locking issue

An example for XPM 10, AMC 1, Link 3:

(ps-4.6.2) claus@drp-neh-ctl002:neh$ python ~/lclsii/daq/lcls2/psdaq/psdaq/pyxpm/pyxpm_hsrepeater.py --ip 10.0.5.102 --xpmid 10 --link 10

Rogue/pyrogue version v5.18.4. https://github.com/slaclab/rogue

Start: Started zmqServer on ports 9103-9105

To start a gui: python -m pyrogue gui --server='localhost:9103'

To use a virtual client: client = pyrogue.interfaces.VirtualClient(addr='localhost', port=9103)

Scan equalizer settings for link 10 / XPM 10

Link[10] status (eq=00): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=01): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=02): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=03): Ready (Rec: 20635201 - Err: 0)

Link[10] status (eq=07): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=15): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=0B): Ready (Rec: 20635201 - Err: 0)

Link[10] status (eq=0F): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=55): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=1F): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=2F): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=3F): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=AA): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=7F): Ready (Rec: 20635200 - Err: 0)

Link[10] status (eq=BF): Ready (Rec: 3155 - Err: 5702)

Link[10] status (eq=FF): Not ready (Rec: 0 - Err: 9139)

[Configured] Set eq = 0x00

^CException ignored in: <module 'threading' from '/cds/sw/ds/ana/conda2/inst/envs/ps-4.6.2/lib/python3.9/threading.py'>

Traceback (most recent call last):

File "/cds/sw/ds/ana/conda2/inst/envs/ps-4.6.2/lib/python3.9/threading.py", line 1477, in _shutdown

lock.acquire()

KeyboardInterrupt:

Crate ID is 5 according to the table in Shelf Managers below, and slot is 2 according to the crate photos below. Ctrl-C must be given to terminate the program.

NEH Topology

Before May 2021:

https://docs.google.com/drawings/d/1IX8qFco1tY_HJFdK-UTUKaaP_ZYb3hr3EPJJQS65Ffk/edit?usp=sharing

Now (May 2021):

https://docs.google.com/drawings/d/1clqPlpWZiohWoOaAN9_qr4o06H0OlCHBqAirVo9CXxo/edit?usp=sharing

Eventually:

https://docs.google.com/drawings/d/1alpt4nDkSdIxZRQdHMLxyvG5HsCrc7kImiwGUYAPHSE/edit?usp=sharing

XPM topology after recabling for SRCF (May 10, 2023):

globalTiming -> newxpm0 -> 2 (tmo) -> 4 (tmo)

-> 6 (208) -> BOS

-> 3 (rix) -> 5 (208) -> BOS

-> 1 (rix)

XPM0 (208) -> 10 (fee) -> 11(fee)

NEH Connections

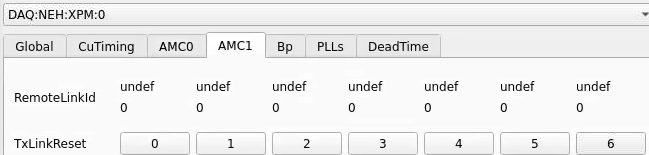

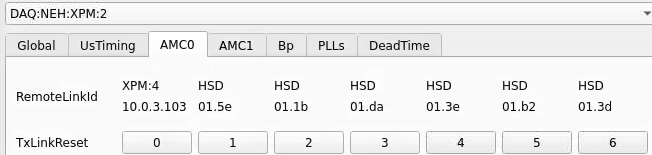

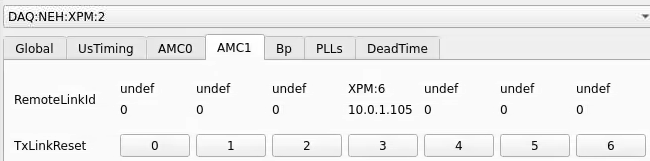

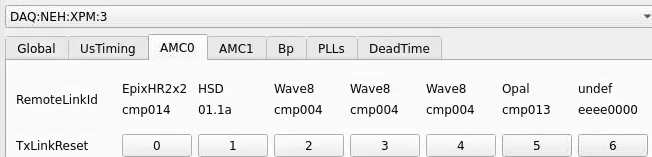

From xpmpva on Oct. 31, 2022.

Numbering

amc1 on left, amc0 on right

ports count 0-6 from right to left, matching labelling on xpm, but opposite the order in xpmpva which goes left to right

LEFTMOST PORT IS UNUSED (labelled 7 on panel)

in fee, xpm0 was lowest slot (above switch), xpm1 one up

pyxpm 2 is fee (currently the master), 4 is hutch, 3 unused

epics numbering of xpm 0 2 1

IP Addresses

From Matt: The ATCA crate in the FEE has a crate number that must differ from the other crates on the network. That crate number comes from the shelf manager. So, when we get a shelf manager, we'll need to set its crate ID. That determines the third byte for all the IP addresses in the crate. 10.0.<crate>.<slot+100>. See LCLS-II: Generic IPMC Commands#HowtosetupATCAcrateID for setting the ATCA crate ID.

An example session from Matt:

[weaver@psdev02 ~]$ ssh root@shm-neh-daq02

key_load_public: invalid format

Warning: Permanently added 'shm-neh-daq02,172.21.156.127' (RSA) to the list of known hosts.

root@shm-neh-daq02's password:

sh: /usr/bin/X11/xauth: not found

# clia shelfaddress

Pigeon Point Shelf Manager Command Line Interpreter

Shelf Address Info: "L2SI_0002"

Timing Link Glitches

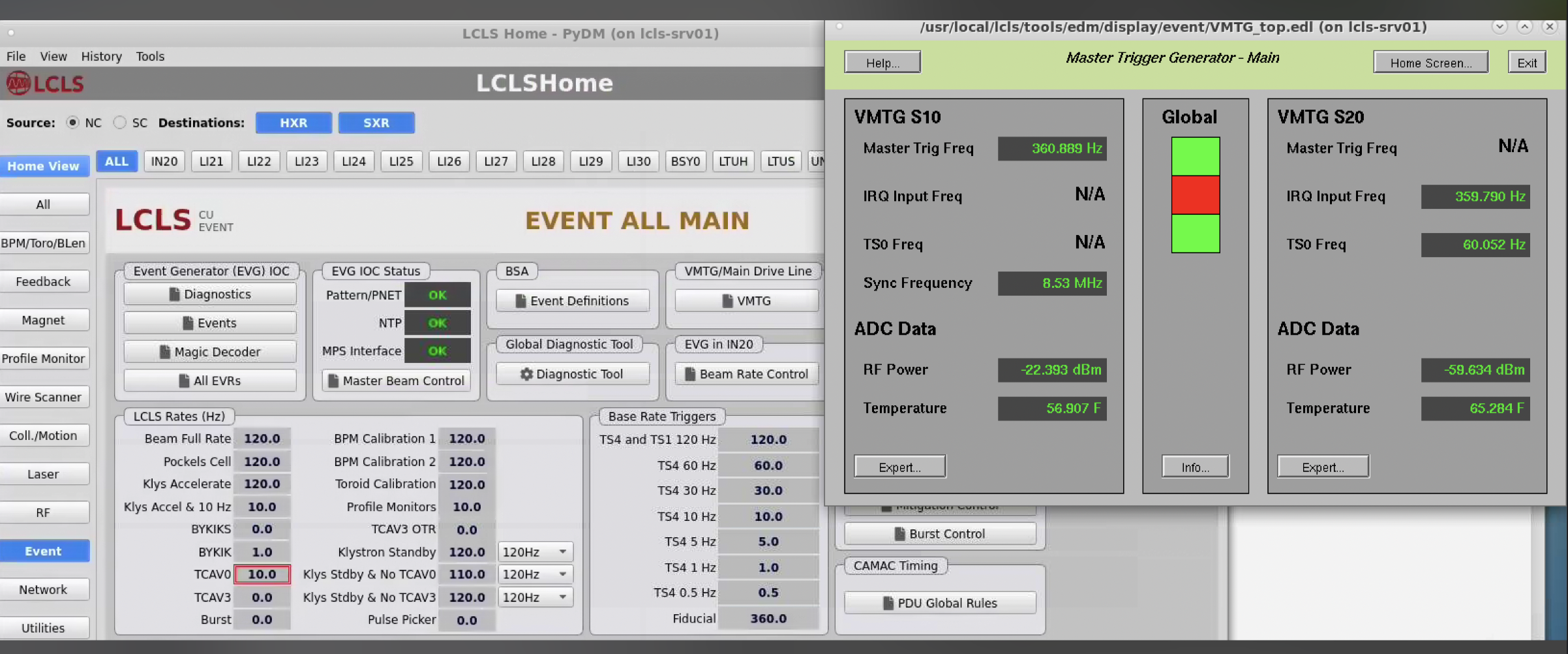

LCLS1

Timing Missing

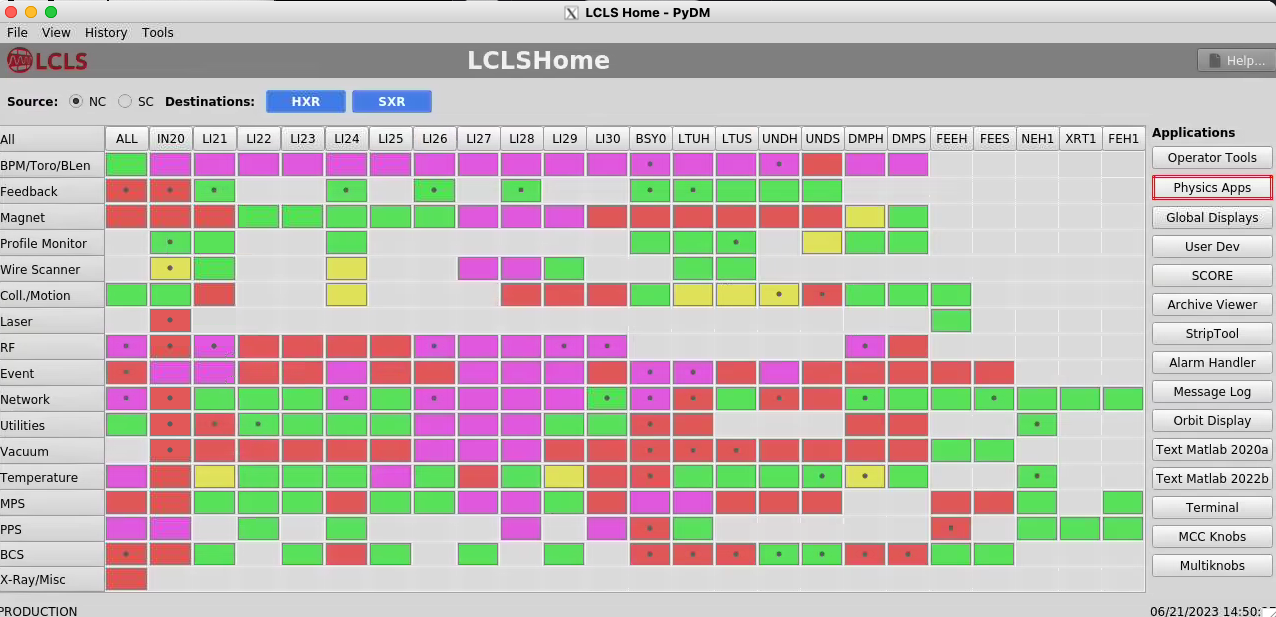

Matt writes that he logs in lcls-srv01 (see below) and launches "lclshome" to see that the LCLS1 timing is down. If you see an "xcb" error it means X-forwarding is broken, perhaps because of a bad key in .ssh/known_hosts.

Matt says to look at the "Event" row:

Timing Not Down-Sampled Correctly

Debugging with Matt on March 12, 2024

Matt says you can see this with epics on lcls-srv01:

[softegr@lcls-srv01 ~ ]$ caget IOC:GBL0:EV02:SyncFRQMHZ IOC:GBL0:EV02:SyncFRQMHZ 8.44444

Here's a screen shot from LCLS home that shows it. I'm just looking at it for the first time. Where it says 8.53 MHz should be 71 kHz.

My first clue was in xpmpva at the very bottom of this tab where it says Fiducial Err

That's a check to make sure that the LCLS1 fiducials are arriving on boundaries of 71kHz (any multiple of 1666 - 119 MHz clocks).

LCLS2

Upstream Timing Issues

This does not apply to LCLS1 timing, I believe.

- Look on main hutch grafana page at upstream-link-status graph

- ssh mcclogin (Ken Brobeck should be contacted to get access, in particular may need access to softegr account).

- ssh softegr@lcls-srv01 (Matt chooses "user 0")

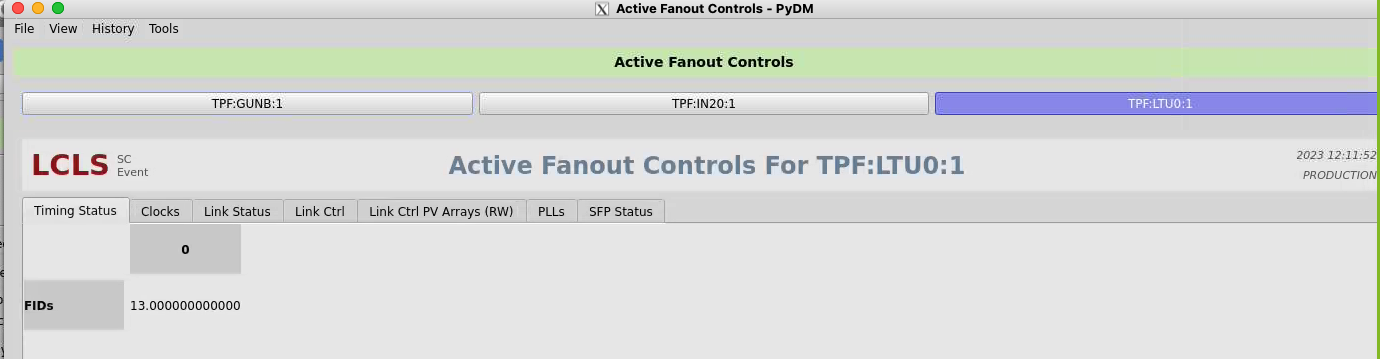

- look at fanouts that are upstream of xpm:0. three layers to get all the way back to the source (the TPG which runs the whole accelerator, has a clock input from master source. clock could conceivably go away). See below for example of this working on rix-daq. Moving upstream:

- caget TPF:LTU0:1:RXLNKUP (immediately upstream)

- caget TPF:IN20:1:RXLNKUP

- caget TPF:GUNB:1:RXLNKUP (fanout that TPG drives)

- caget TPG:SYS0:1:TS (prints out timestamp from TPG, which is a check to see that TPG is running)

- caget TPG:SYS0:1:COUNTBRT (should be 910000 always)

- PERHAPS MORE USEFUL? to determine if problem is on accelerator side: look at the active fanout upstream of xpm0 (in building 5): TPF:LTU0:1:CH14_RXERRCNTS TPF:LTU0:1:FIDCNTDIFF (should be 929kHz) AND TPF:LTU0:1:CH0_PWRLNK.VALB (optical power in to the fanout).