Target Release Date

Final edits due

Introduction

This newsletter is packed to the brim. This edition is extra special as we have for the first time a section for LCLS IT recording some of the improvements and maintenance activities of Omar's team!

Safety Stand-down and EEIP

The newsletter is being released approximately 1 week later than we originally planned due to the SLAC-wide safety stand-down on Wednesday 5/4. While the circumstances prompting the stand-down are not good, the outcome of the activity was positive.

ECS took a bit of time to refamiliarize ourselves with the WPC and Electrical safety aspects of our work. We also spent some time collecting data from the division on (over)work, including consecutive hours, and days work, as well as peak number of assignments in an effort to better understand the issues Mike Dunne mentioned in his all hands.

ECS would like to take a moment to emphasize the importance of EEIP at LCLS and SLAC in general and remind anyone reading this newsletter to look into what EEIP is, and how to make sure your equipment is compliant. You can learn more about EEIP here: https://slacspace.slac.stanford.edu/sites/pcd2/eeip/default.aspx

LCLS-II-HE update

The ECS team continues making progress, advancing instruments’ designs, and ramping up efforts with greater engagement from instrument’s leads. The goal for the remainder of FY22 will be to refresh the overall cost estimate as recommended by the committee, while at the same time remaining committed to advancing the design maturity of all Experiment control systems to Preliminary Design Review (PDR) (60%) level. The PDR for controls is slated for August of 2022.

Roles and Team Fully Released

All major roles in LCLS-II HE Experiment Controls have been assigned to ECS team members. Check out the list below to determine who you can talk to in the ECS team for help with HE. If you have any questions please contact Margaret Ghaly

L2HE Project Roles and assignment table can be found here. (This will be the updated list at any time.)

MEC-U update

Rack allocations

We spent some time doing rough estimates of rackspace for MECU, here are some of the figures we developed for your consideration. These don't include the other partner labs in the count, their estimates are elsewhere. Other systems outside of this count include Safety (PPS/BCS/HPS/LSS), Timing and IT infrastructure.

| Full depth racks (42U) | Shallow racks (42RU, for small depth devices) | |

| Laser BTS controls | 4 | 2 |

| Xray BTS | 3 | 3 |

| TCX | 6 | 3 |

| TCO | 4 | 2 |

| Total | 17 | 10 |

Other controls requirements

More controls requirements were developed in March and April. These requirements were specifically focused on CA network size, DAQ bandwidth, EPICS archiver size and reliability, as well as the logging systems. High-level requirements are being developed as ConOps are updated/ generated. If there is any particularly important requirement of the control system, high-level functionality please let Alex Wallace or Jing Yin know.

LLE+LLNL Onsite Visit

The Rochester and Livermore team came to SLAC on March 22nd and 23rd to learn more about MEC operations and collaborate in person on the project. This was the first time all three partner labs met in person to work on MEC-U. The controls breakout session reviewed the operations and the high-level supervisory control software of the Omega laser facility. We also learned more details about the mechatronics, industrial and timing system controls of the LLE system.

Hutch Python Update

Hutch python's conda environment was updated to pcds-5.3.1 near the start of April.

You can read the full release notes here: PCDS Conda Release Notes: pcds-5.3.1

Robert S. Tang-Kong Zachary L Lentz

New Dual-Acting Valve Widget

Dual acting valves are now integrated into pcdswidgets with a nice looking icon to represent the functionality of the valve.

Extensive documentation on the process of making a new pcdswidget was provided as well as scripts for testing widgets in development.

Position Lag Monitoring

- Add metric plots

- Add any conclusions drawn regarding this issue, and corrections.

- Would be nice to see the counts trending down on account of some PLM threshold changes.

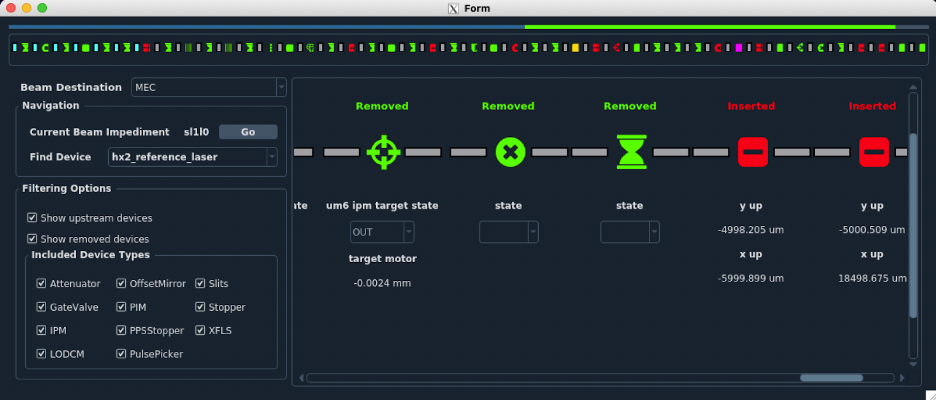

Lightpath Campaign

Lightpath is a tool that aims to show users and scientists which components are blocking the beam path, greatly expediting troubleshooting and recovery of operation.

Unfortunately the tool has been plagued with bugs and efficiency issues for quite some time. We are planning a substantial rework of the tool, with the goal of making the tool available for all hutches.

A JIRA Epic has been created to gather existing lightpath issues/bug reports. If you have ideas for features or bugs to report, feel free to add an issue to the epic. We will be basing the re-design on requested features, so submitting an issue now is the best way to see those issues solved. We will continue to reach out for input throughout development, so be ready to hear from us!

New ECS PD Campaign: Code Review

ECS currently has nearly all of our hutch-python and related Python source code in the pcdshub organization on GitHub.

We utilize a standardized workflow that improves understanding among our team, provides automated quality and style fixes, and in the end results in a higher quality set of code.

We want to take our same standards that we apply to Python development and bring it forward to nearly all PLC, EPICS module and EPICS IOC development.

This will be an ongoing effort over the course of months.

See more details on the initiative page here - ECS GitHub and Code Review Campaign - feedback is welcome.

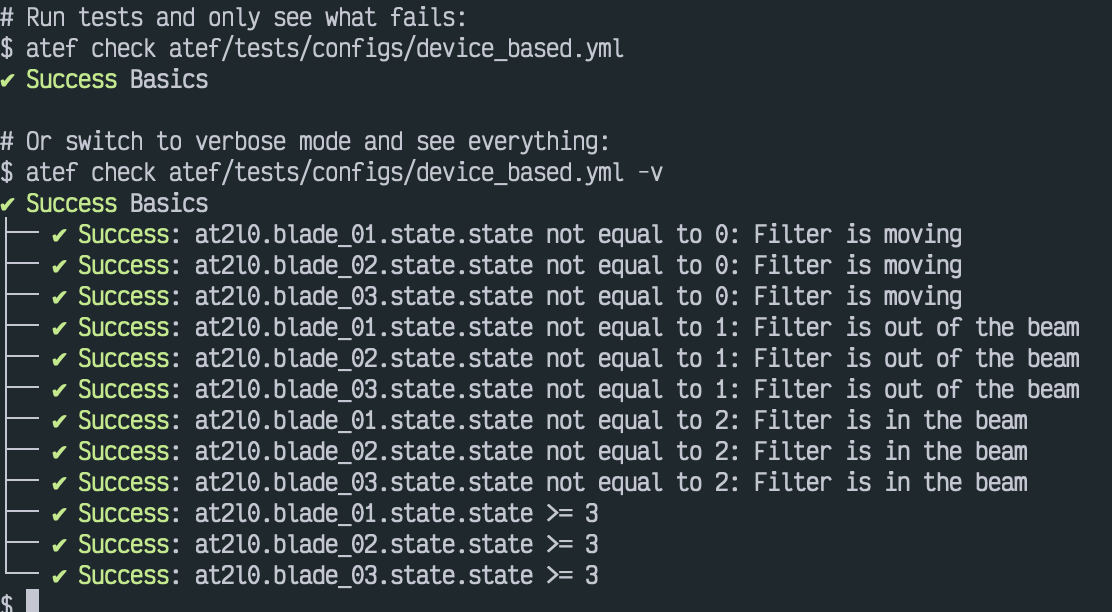

ATEF Status Update

We are continuing on our refocused ATEF development effort, targeting passive testing of control system devices. The "passive" portion of this means that it will be fully-automated and non-intrusive (that is, it will not move your motors or otherwise directly cause a PV to change).

We are making progress on the user-facing components of atef for passive checks. This includes a work-in-progress set of GUI elements, allowing for a more straightforward specification of a passive checkout configuration, with a happi device search tool and ophyd device inspector. A preliminary command-line based passive check running tool has also been developed.

Future development will include: active tests (guided, with humans in the loop) including integration with bluesky, a synoptic for viewing the atef-reported status of all devices, integration with Grafana, views of devices in typhos/hutch-python at a given time in the past, and many other things.

shared-dotfiles

What are dotfiles?

- Dotfiles are configuration files that sit in your home directory, like

.bashrc. - Dotfiles set per-user configuration settings for different applications, like the text editor vim or your shell.

- If you have a unix account, you likely have dotfiles in your home directory on pslogin, whether you have customized them or not.

In a survey of users' configurations, we found that many were similar, likely copied and tweaked from one user's home directory to another when they joined ECS.

In some strange form of the telephone game, a bit of meaning was lost between each successive copy, with configuration lines remaining with not a clue as to why they were there.

We then set off on a goal to create a shared repository that allows for a good starting point for new engineers, or existing ones that want to improve (or just better understand) their settings.

Almost every line contains an explanation as to why it is there, with some offering suggestions as to how to adjust it to your preference.

Here is a link to that repository:

→ https://github.com/pcdshub/shared-dotfiles

It includes a variety of suggested configuration files for various basic tools like ssh, bash, vim, and so on that ECS staff and others who use the same computing infrastructure may find useful.

It also explains a bit about what scripts are available and how to better navigate your environment in its documentation.

Vacuum System

Adding a new supported gauge! Instrument hot cathode gauge IGM401 will be used in TMO. Please chick here. We also gave a seminar on the LCLS II vacuum control system. If you missed the seminar, you can find the slides and meeting video here .

QRIX Controls

The Spectrometer Arm has been installed and checked out. The spectrometer arm has a total of 27 axes installed with coupled and coordinated motion with strict EPS requirements. The acceptance test went very well with Toyama and even were complete ahead of schedule.

The QRIX vacuum PLC code has been merged with that of the spectrometer arm. The vacuum controls integration is complete for all the installed devices.

TXI

Cables were successfully pulled in the FEE for patch panels for both K and L lines.

TMO

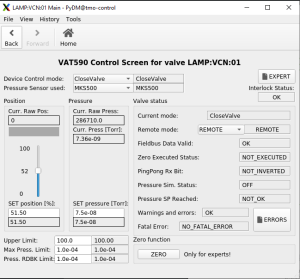

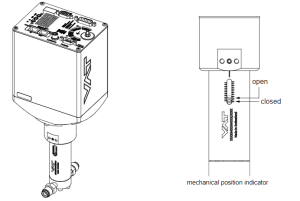

First deployment of VAT590 variable valve in LAMP. Jing Yin Govednik, Janez Maarten Thomas-Bosum

This was used with the Magnetic Bottle (MBES) iteration of the LAMP endstation.

TMO requested this very-low flowrate leak valve for experiment gas delivery and vacuum level control in the IP1 chamber.

The valve plate acts as a throttling element and varies the conductance of the valve opening.

Actuation is performed with a stepper motor and controller. The stepper motor/controller version ensures accurate pressure control due to exact gate positioning. The onboard controller and built-in PID loop were integrated into the control network of the LAMP vacuum PLC and IOC, and a custom PyDM GUI was developed as a user interface for this device.

In the future, this device will be integrated into the vacuum control systems of other endstations in TMO. Furthermore, work will be done to allow users to set and tune the PID loop parameters themselves, which are currently locked into the lower level CoE settings. This is because the control loop needs to be adjusted for chambers of different volume, gas flow rate etc.

Documentation page: LAMP MBES Variable Leak Valve Commissioning 3/22

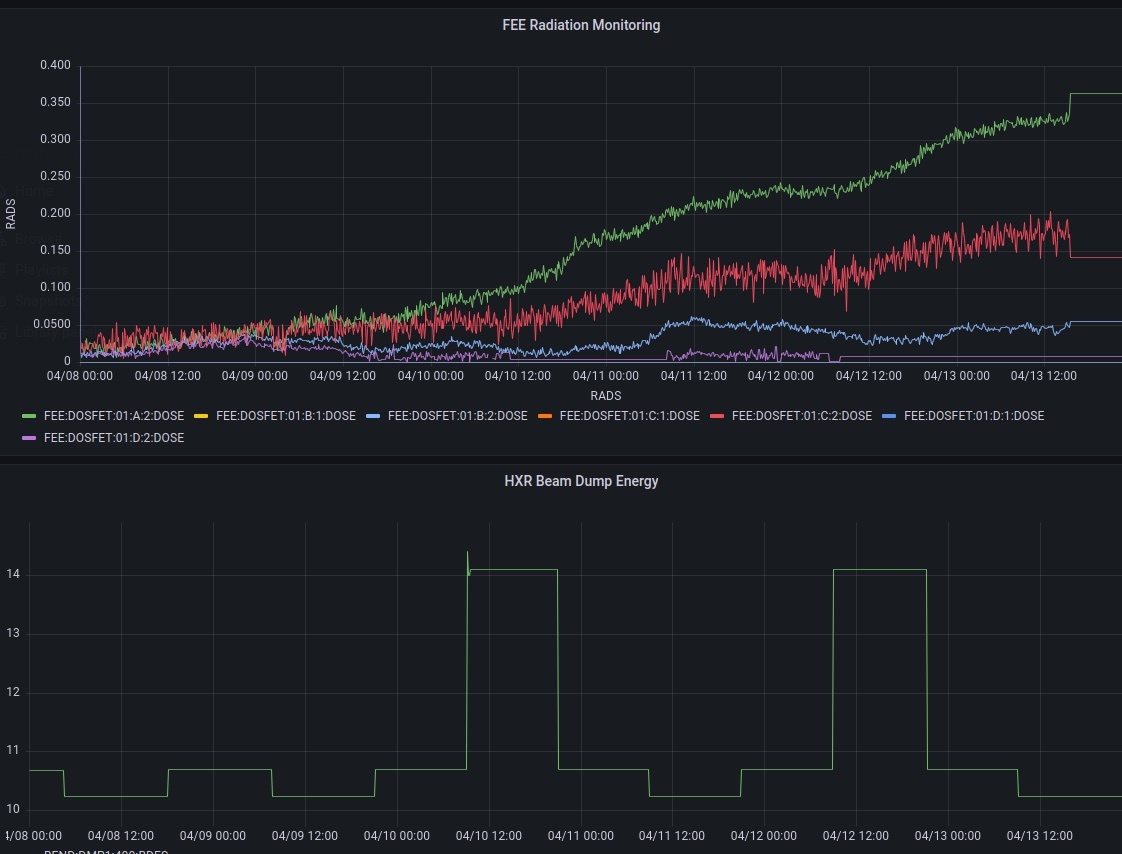

Radiation Monitoring in the FEE

We began taking initial Radiation measurements in the FEE! From four different locations we gained 2 sensor readouts each. Here we see Hard Xray beam energy mapped against dose rates.

A shift was made to bring the rest of the sensors online. Looking forward to collecting more data this month!

X-ray Optics Controls Updates

MR1L3, MR2L3, and MR1L4 XRT HOMS Mirrors

- Temperature sensors added to XRT HOMS Temperature

- State Control fully implemented! We can now reach mirror coating positions and move the mirrors in and out of the beam with one click!

MR1K4 FEE TMO Mirror

- Newly upgraded with coating state control.

- PMPS states implemented.

MR3K4, MR4K3, MR2L1, and MR3L1 TXI Mirror

- Site Acceptance complete: TXI SAT

MR4K4, MR5K4 TMO DREAM Mirror

- Granite installed/Mirror moved into the hutch.

- Controls fully checked out: DREAM Install

- Coming: Internal RTD, Pressure, and Flow Sensors.

MR2K4, MR3K4 TMO LAMP Mirror

- Look for some higher performance in the axis control

- Control loops changed and Deadbands tightened

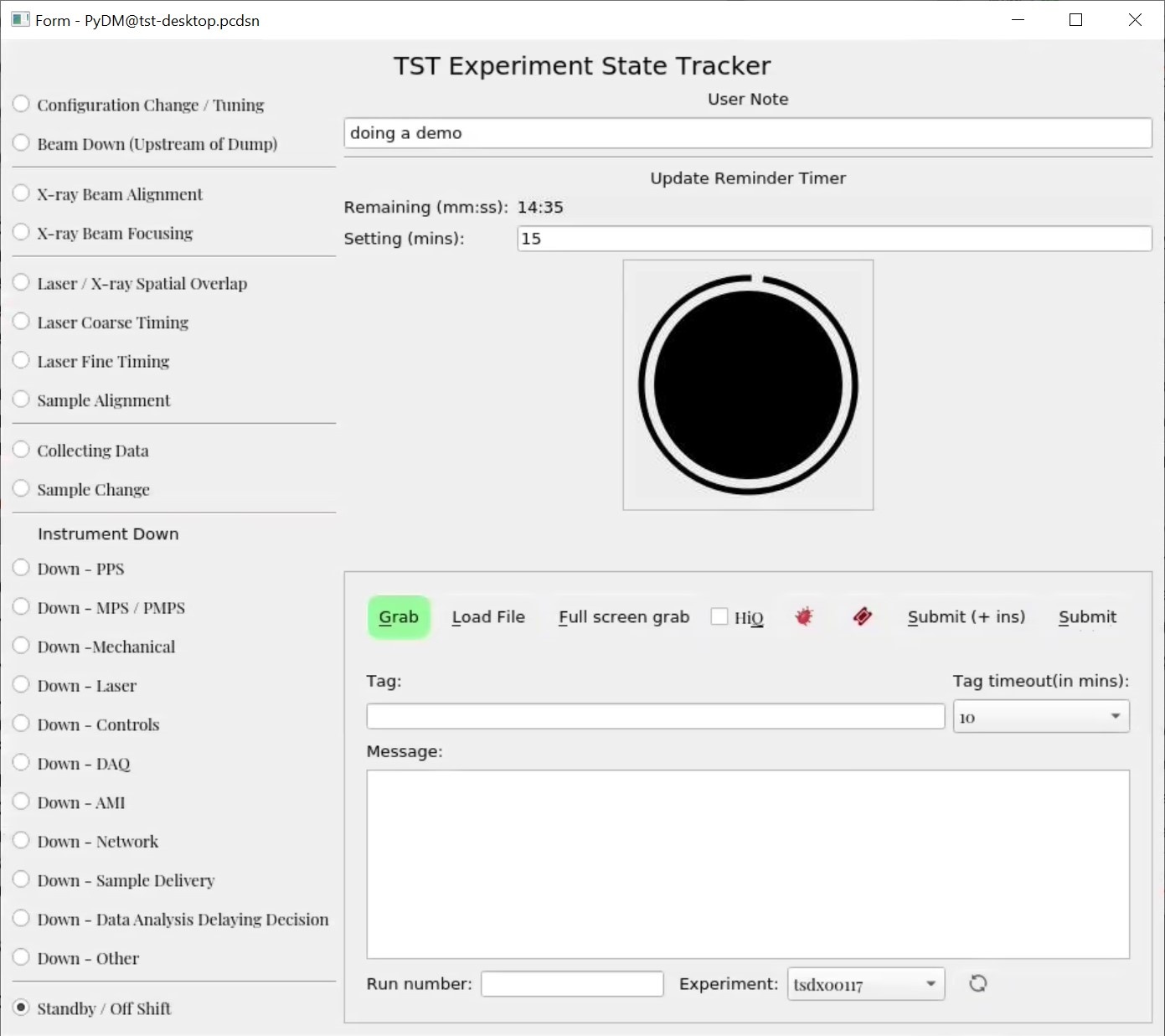

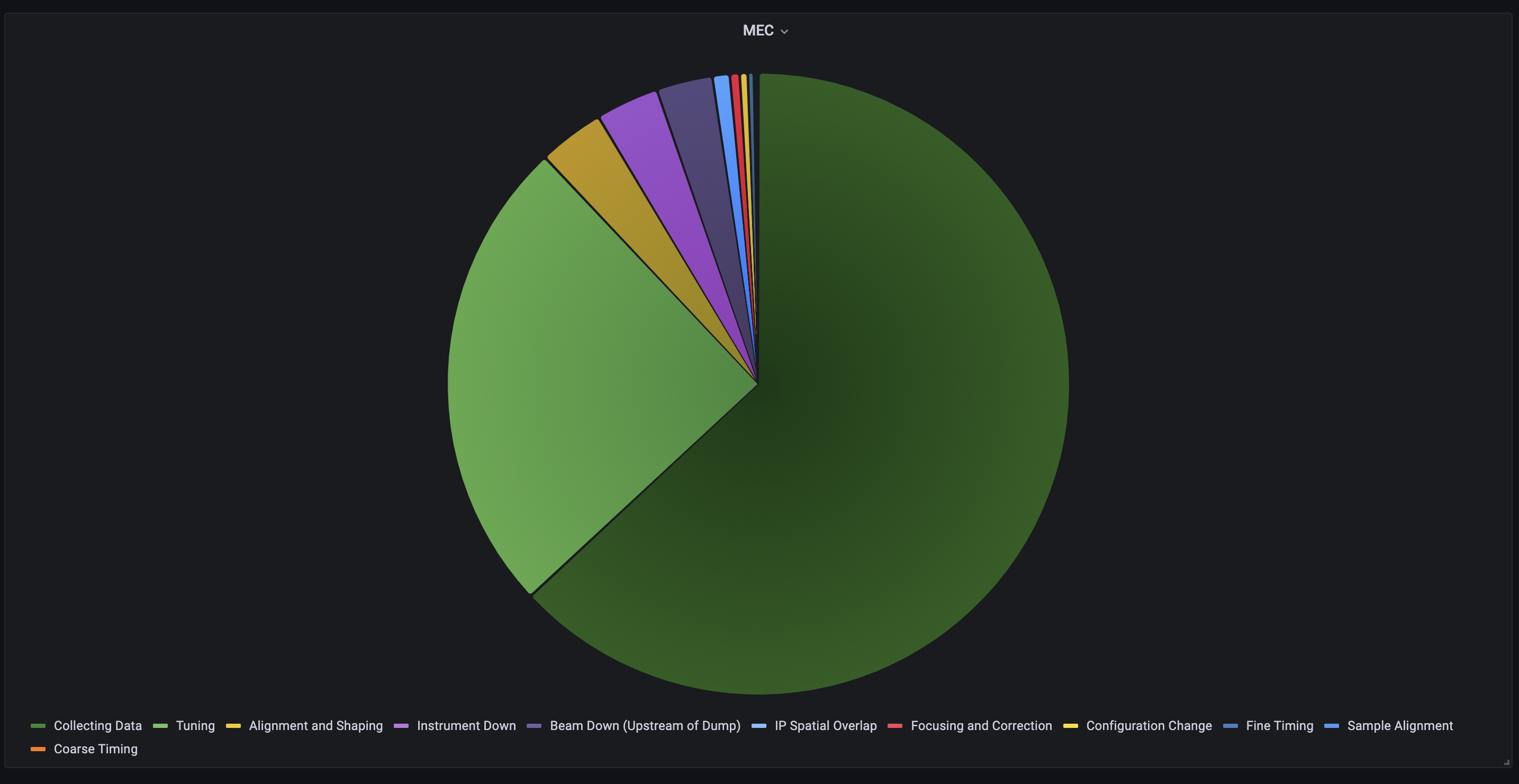

Experiment State Tracker and Eloggrubber

Based on popular demand, the Experiment State Tracker has been integrated with the eloggrubber and the possible state list has been extended (with much more detail for 'instrument down' in particular.)

The eloggrubbers JIRA implementation has changed to allow automatic assignment to the instruments as well as posting of the attachments Murali Shankar

Grafana has also been extended to allow the creation of piechart data from the archived Experiment State Tracker output.

Jungfrau [0.5/1]M IOC

The smaller jungfrau detectors which use a Rohde-Schwartz power supply now have an IOC (ioc/common/roving_jf) to make the process of turning them on/off consistent with the various epix-detectors. This IOC will trip the detector should a problem with the chiller flow or power supply is detected.

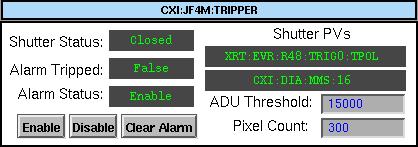

Jungfrau4M Tripper

Thanks in large part to Daniel Scott Damiani, a tripper has been created for the Jungfrau 4M detector in CXI. This is similar to the CSPAD tripper and will help to protect the detector from intense X-ray by blocking the beam when too many hot pixels are detected. The tripper runs through a combination of an IOC and AMI plugin. The IOC host PVs that for input and output to the tripper and closes the shutter when necessary. The window below can be easily seen on the CXI Home Screen, and can be used to adjust the threshold or clear a trip. From now on, the tripper should always be enabled to prevent burning out any more pixels.

The "ADU Threshold" is the number of ADU below the saturation in low gain allowed before a pixel is considered too high. The "Pixel Count" is the number of high pixels allowed before tripping the beam. Please do not adjust these values without discussing with Meng or I beforehand.

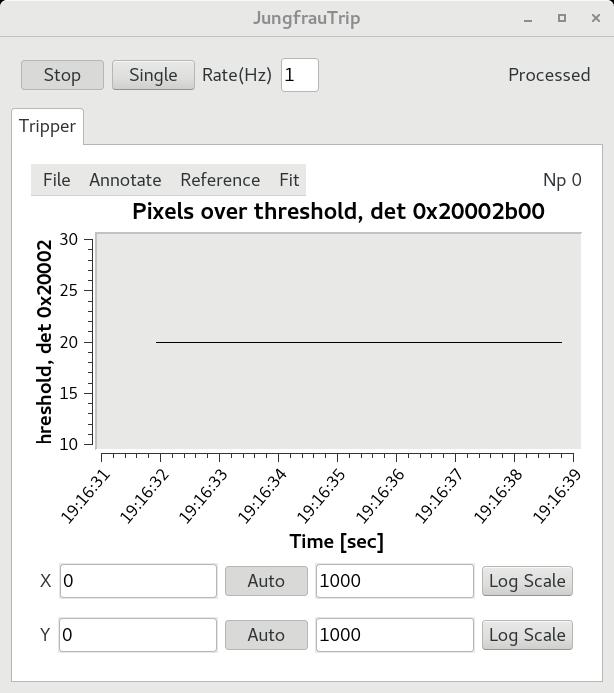

As another form of feedback, the AMI plugin adds an option in the AMI window called "JungfrauTrip" that allows the user to watch the number of pixels above the threshold over time. Below, we can see a constant 20 pixels above the threshold, using the current settings without beam, due to the misbehaving already-burnt pixels. If you have any questions about the tripper, please feel free to reach out to me!

CSU Chico Engineering Capstone Collaboration

This year Diling Zhu has sponsored the collaboration project (many thanks Diling!), which is to develop a prototype system for determining interaction point alignment using machine vision. In early March, the CSU Chico Engineering Capstone Collaboration project team visited XPP to do some final fit measurements around the interaction point for installation of their project later this year.

The team won Best Project this year at the CSUC design expo (May 13th), competing with ~20 other project teams from the program. The team will also present their project this summer in Dallas TX at a national competition for engineering capstone projects.

For the past five years LCLS departments (SED and ME) have sponsored projects with the Mechanical/Mechatronic Engineering departments of CSU Chico for their engineering degree capstone. Each year the collaboration project offers a chance for LCLS to develop prototype concepts, or even to offload a manageable portion of work to a team of 4-5 students and a faculty advisor (for a nominal fee + M&S). More importantly these projects offer us a chance to discover talented candidates that we can hire. If you have an idea for a project, please contact Alex Wallace for details. On a final note, this year we are excited to announce that we hired another student from the collaboration project, Mitchell Cabral. He will join ECS as a control system integrator in June.

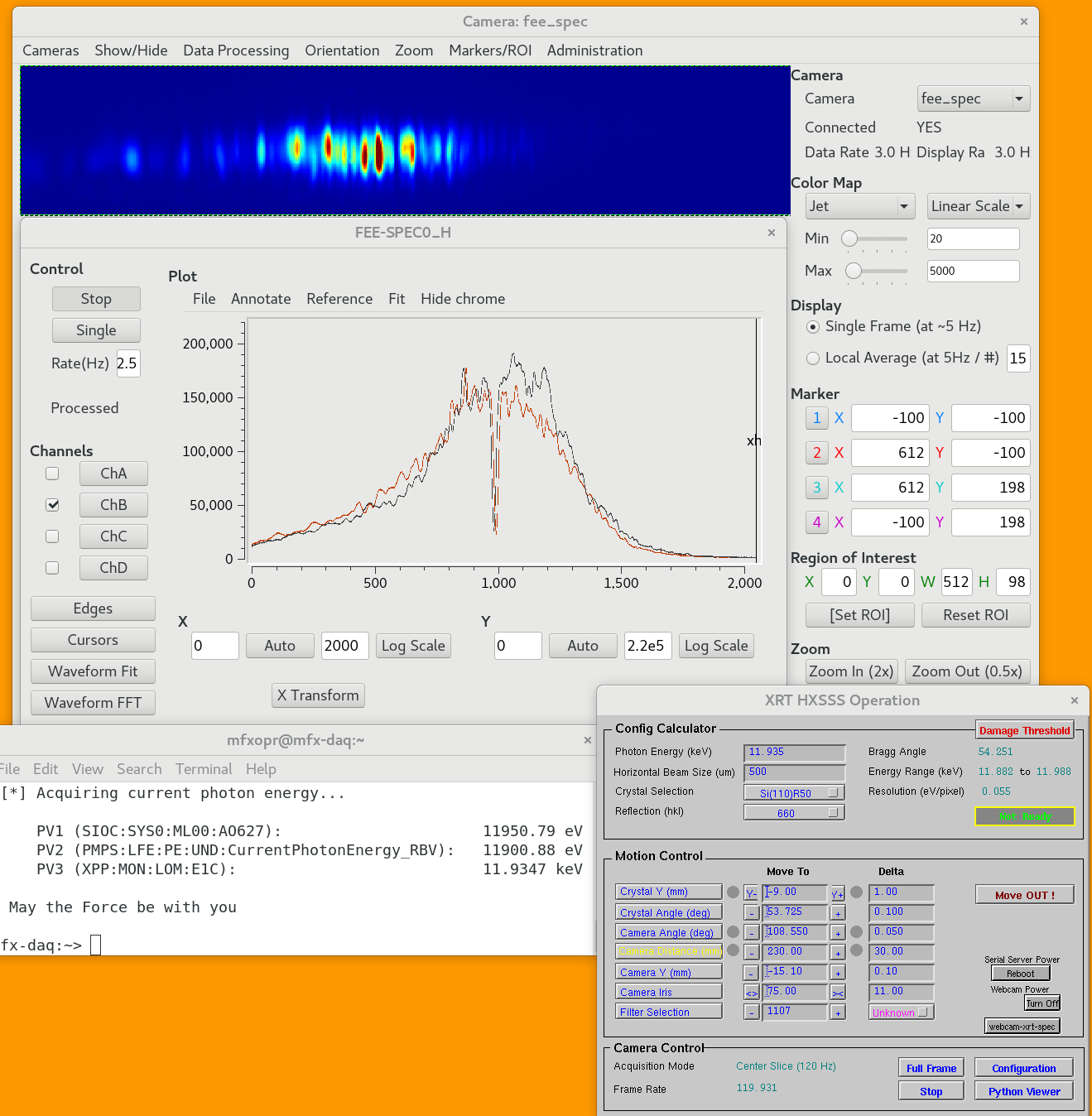

HXR Single Shot Spectrometer Updates

Restoration of the HXR spectrometer mechatronics has been completed and has successful commissioning times. The spectrometer has been a vital part in experiments including those in MFX. Included below are some images taken from the orca camera. Further updates to the gui have been made including ways to power cycle devices as well provide an easier way to access the webcam used for remotely viewing how the spectrometer is behaving.

Full design and motion system details can be found here

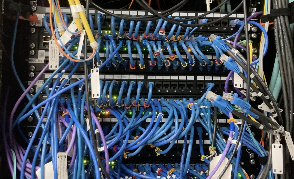

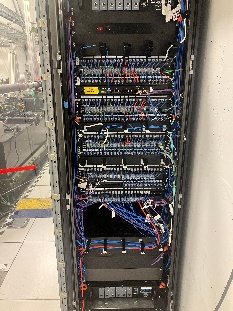

Hutch Rack Cleanup Efforts

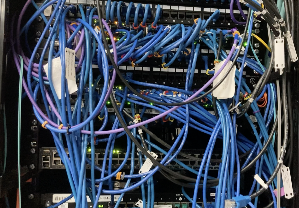

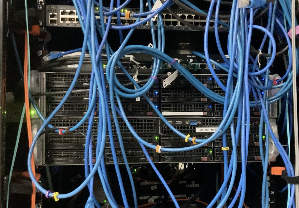

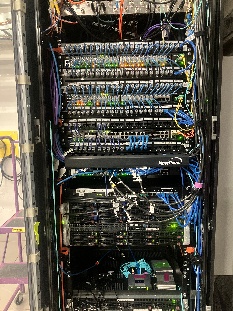

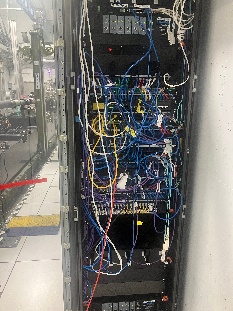

Progress in hutch cleanup has began with network switch rack organization in both XCS and XPP, below are before and after images taken from each:

XCS:

| Before | After |

|---|---|

XPP:

| Before | After |

|---|---|

IT Updates

Spencer, Aalayah I Omar Quijano

Network Reorganization

Over the past few months, the CDS-IT (hereafter IT) team has worked to upgrade and simplify the network set up across the NEH and FEH Hutches, XRT, and FEE. Currently, CXI is the only Hutch remaining to undergo the new network restructuration.

The purpose is to provide 100GbE stacking and/or uplinks for maximum reliability and multigigabit access. The first 24x (1 - 24) ports provide 1000 Mbps connection and the next 24x (25 - 48) ports provide 10000 Mbps connectivity. All ports provide POE+ with up to 1500W power budget.

Ruckus ICX 7850-48ZP

WEKA Home/FFB Cluster Upgrade

LCLS has 2 WEKA storage clusters: i) provides the home directories and software for all users and staff, and ii) the FFB for the user's experiments. There was a bug with the previous release, so the latest version was installed to fix the quota bug and improve performance. The team is still working with the WEKA team to provide the users with the output of their appropriate space usage (quota).

DSS and MON Nodes

There have been many incidents where the Experimental Controls and DAQ team is not aware of the available DSS and MON nodes during a given experiment. To provide transparency across the multiple teams, the IT team has created a confluence page that explains the validation methodology and provides a log of the current functional DSS and MON servers. This will prevent the teams from guessing which nodes are available and allow the IT team to fix those that are not available. Coming soon, the IT team will deploy a new image with monitoring that will provide alerts so that actions can be taken in a timely manner.

SRCF Datamover Bandwidth Upgrade

There has been a request by the Data Management team to provide more bandwidth to the SRCF datamovers to keep up with the current data rates. A 2 x 10Gb bond was created to support a bandwidth of 20Gbps. There are 5 servers used by the Data Management team as SRCF datamovers to support the various experiments.

Workstations and Servers Matrix

To organize, clean, and validate the current host database, the IT team decided to check each Hutch for the currently existing online and offline devices. After inspecting in detail the host database, information was gathered with the objective of removing the devices that were no longer required. This process involved the participation of the POCs, SEAs, instrument leads, and the IT team to ensure that the information was coherent. Currently, the team is still working with the various staff to finalize this extensive activity.

Remote Operations Hardware Maintenance Schedule

Although LCLS has devices for making remote operations more efficient, each hutch counts with a Realwear HMT-1 headset and the Double3 robot. The Realwear HMT-1 headset can be used for an interactive hands-free video conferencing call (expert troubleshooting) and documenting by taking pictures/recording of such call. The Double3 is a telepresence robot with video conferencing and remote driving capabilities. There has been a lack of support for these devices, so the IT team is working on providing a weekly and monthly maintenance guide. In addition, each device will be transferred to its respective custodian, and training will be provided to more IT members so that they can provide assistance to the various staff personnel. A weekly status report will be logged for the continued maintenance and support of these devices: ROH Maintenance Logs.

Integrate UED to LCLS Standards

There is an effort in progress to support UED's restructuration to the LCLS standard. Now, UED has a main system named ued-daq with multiple screens, which support both the LCLS and ASTA controls. The idea is to integrate the Accelerator Network into the LCLS managed devices to provide the current LCLS IOCs and DAQ systems with the capability of accessing the Accelerator PVs. This step will provide two key functions: i) removal of the UED local gateway and leverage the LCLS gateways, and ii) allow UED to slowly transition their control system to the LCLS environment.

New Ciara Purchase

A new purchase for workstations and 1U AMD Rome servers has been made. There will be an additional 10 control room workstations and 65 servers. The servers will be deployed across LCLS for new DAQ and Control Systems, or to replace faulty ones. The workstations will be deployed across the different control rooms and hutches.

Pit Cleanup

Over the past few weeks, members of TID have been supporting the Pit Cleanup effort. Old infrastructure and non-working devices have been set aside to be sent to salvage. At this time, the team is trying to coordinate a time with the RPFO office to do the radiological survey for all unwanted hardware. Once the survey has been completed with the appropriate paperwork, TID staff will move the hardware to salvage.

Upcoming IT Tasks

The following are short-term tasks the IT team is working to deliver:

- CXI network upgrade.

- Switch tool for user management.

- Diskless server upgrade to provide resilience.

- Diskless monitoring to make sure all DSS and MON nodes are operational

- Extra 20 batch nodes for the FEH queue.

- Finalize the ioc-xrt-rec07 image to validate 10G network.

- Finalize and validate GNOME key binding shortcuts process.

- Deploy NoMachine extended capabilities to support remote operations.