- Build Workflow example

- TODO: Make a flow chart ppt when done creating

- Overall plan: runner checks out repo to /sdf/group/ad/eed/ad-build/<user>/ → runners request to backend build cluster to start new build container -> builder pod starts a new container on our ad-build cluster -> write log to database

- Steps broken down:

Runners request to backend build cluster, uses a rest api to send a POST request to 'build' endpoint with data like this:

curl -X POST \

'https://accel-webapp-dev.slac.stanford.edu/api/cbs/v1/component/build' \

-H 'accept: application/json' \

-H 'Content-Type: application/json' \

-d '{

"organization": "str",

"componentName": "str",

"branchName": "str",

"actionUser": "str"

}'

- Backend builder pod talks to component database, grabs the build environment based off the organization, componentName and branchName

Backend builder pod then starts the build environment using build-deployment.yml with parameters (componentName, branchName, imageName, actionUser, buildInstructions)

- Use Declarative Management of Kubernetes Objects Using Kustomize | Kubernetes to configure the build-job.yml for the component

- build-job.yml will be a job with a volumeMount to s3df-dev-container-ad-group, and template parameters to label the container (componentName-branchName), and provide image name, and command to start build (buildInstructions)

- backend builder pod then logs to branches_in_development.

- backend builder pod sends the name of the build container thats running, back to runner,

- runners last job is to print the filepath to where the container is being built, and othe useful info like 'status'

- if triggered by action (push to main/ pull request), then we want to provide the Report to user which can include

- filepath to user of the build output

- status (successful built / fail).

- time duration

- LAST STEP: have the build container push its artifact somewhere where a test container can download the artifact then do its testing.

Backend meeting with Claudio/Jerry/Patrick 5-28-24

- The backend should do most everything, capture status, log, start build, etc.

- talked about api version,

- creating the new branch, do we want just the cli, or what if they use just regular git. We will still think about this. CLI can checkout using the url of the component repo

- User should be able to build remotely (using ad-build) or build locally in a container

- CLI should have 'completed' command to merge to main

- create a tag should be associated with the version that a

- dev needs a tool starting from now, this version associate this branch. Problem is when you create a new tag, when the user update an old branch or old version, you associate the tag that belong to that branch. We have to manage different versions of the same time.

- have a main that always point to most recent version

- then have development branch that points to respective version

- version automatically managed by backend

- We should have external/internal components. We should have a way to checkout external component and build, but not tracked by build system. CLI should be similar for both

- ex: Epics component should be external component,

- we need to carve up space for user

- every user can have environment variables for github username, s3df username, and we can pass to the backend rest api if needed

- todo: come up with http header from cli to backend and fill out his excel sheet for cbs-api.

when they create repo or create new branch, they need authorization, which is the information you (may) pass to backend rest api, that can authorize.

TODO: Add claudio to ad-build-test org - claudio wants github to store the docker images for now, but long-term goal we want a docker registry on s3df

core-build-system notes

- Example: Adding fields to the component schema, as well as the api. What classes to change:

- Rest API:

- controller/ComponentController.java → @RestController()

- This is where you define the api commands

- @PostMapping, @GetMapping, @DeleteMapping

- mapper/ComponentMapper.java → @Mapper

- This is only made when you have a field that has fields of its own, and you need to map it to main schema

- Service:

- This is the backend logic that the rest api commands call

- service/ComponentService.java → @Service()

- ex: create() - check no conflict, check dependencies, then create the component and save to mongodb, then return the created component id back to user

- Repository:

- This is backend logic that extends MongoRepository for functions regarding the repository like 'boolean existsByName(string name)"

- repository/ComponentRepository.java

- Schema:

- model/Component.java

- dto/ComponentDTO.java → @Schema()

- This is where you define the db document schema

- dto/newComponentDTO.java →@Schema()

- What's the difference between componentDTO and newComponentDTO?

- Exception Handling:

- exception/ComponentAlreadyExists → @ResponseStatus

- exception/ComponentNotFound→ @ResponseStatus

- Testing:

- test/....../controller/ComponentControllerTest.java

- test/....../service/ComponentServiceTest.java

Proof of concept development

Used configMap to pass in information from backend to build container. So build container can echo the build parameters passed in

- Commands:

- kubectl create configmap build-config --from-file=build-request.

properties - kubectl describe configmaps build-config

- kubectl create -f build-config.yml

todo: Work on test project like test-ioc, with bom. claudio can use this to test

- we can make the lsit of components just have boost and epics for now

- Then we want to get to the point where a pod is executed and start_build.py can read the bom of the ioc

todo: Work on getting testing flow designed

- Unit tests firsts, the ones you can run right after the build

- may want a specific directory name that the container test script can run

- Come up with backend api for it, which just records to db i think,

- We can assume basic/unit tests are automatically ran if a build is started

Flow:

- User command CLI

$ bs run build which does the build flow.- Pass to backend the component, branch, and user headers

- backend looks into component DB for the development image

- backend mounts

/mnt at /sdf/scratch/ad/ad-build/ for downloading src code - backend mounts

/build at /sdf/groups/eed/ad/ad-build/ for the build scripts - backend mounts configMap at

/config/build_config.json for build request information - starts the build container and calls

start_build.py start_build.py then performs a build and outputs its results at the top of repo directory

start_build.py will then call start_test.pystart_test.py will then look into directories in the src code called /test/unit_tests/ and run those

Group meeting 6-6-24

Group Meeting 6-6-24 - LCLSControls - SLAC Confluence (stanford.edu)

Backend core-build-system codebase introduction

eed-web-application/core-build-system (github.com)

Meet with Claudio to introduce codebase 6-25-24

Hi Claudio, let us know when you can introduce the codebase.

I think would be helpful for a brief overview of the different components

Rest Controller/API

- controller/

- dto/

- mapper/

Service

Repository

Model

An example of how it all ties together would be helpful, like how we go from user making API request to start a build, the code calls some service to start k8s pod, the read/write to repository mongodb, then goes back to api to return status in JSON.

And what your workflow is for building, testing, and deploying. Looks like a combination of gradle, docker compose, gh actions.

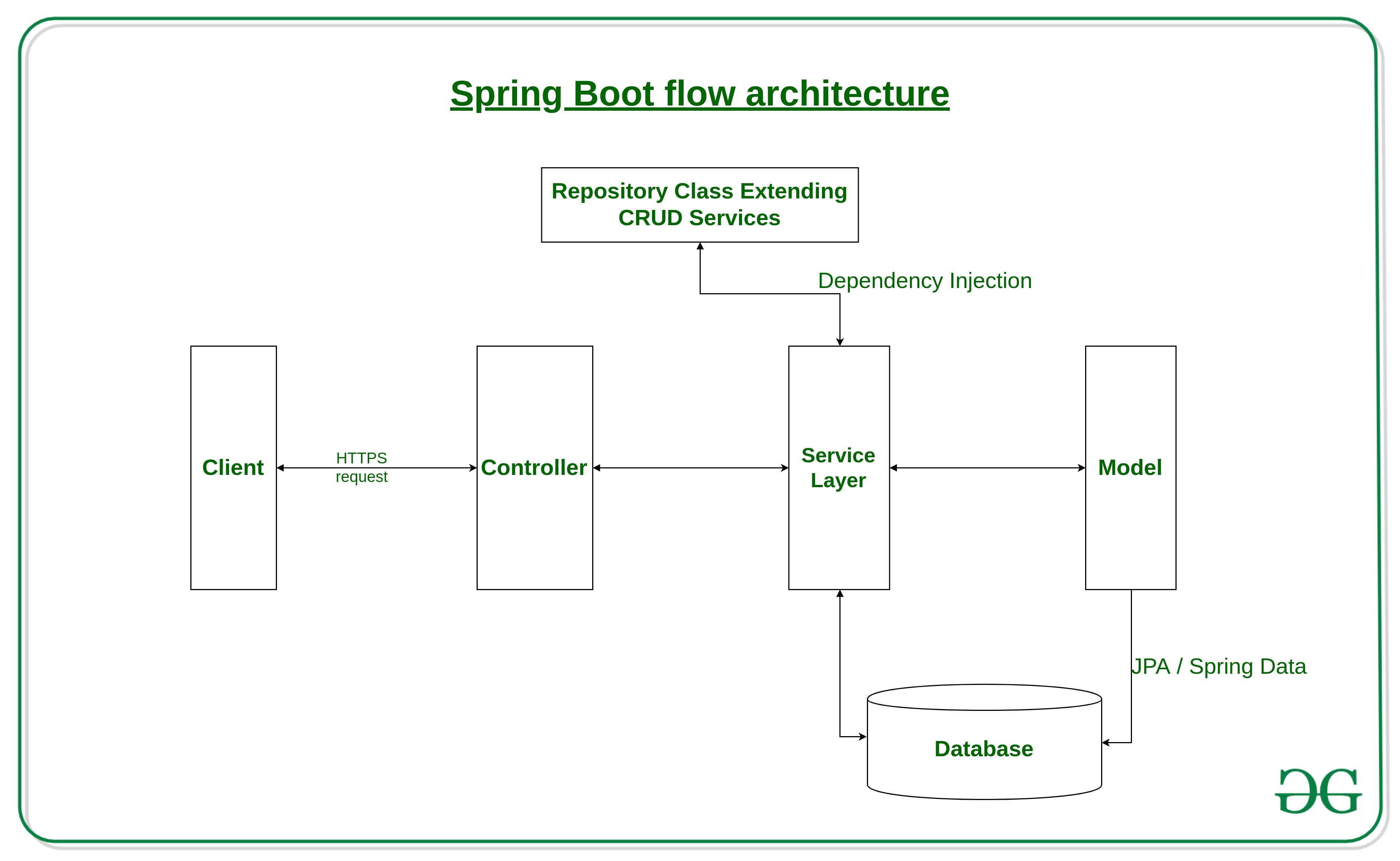

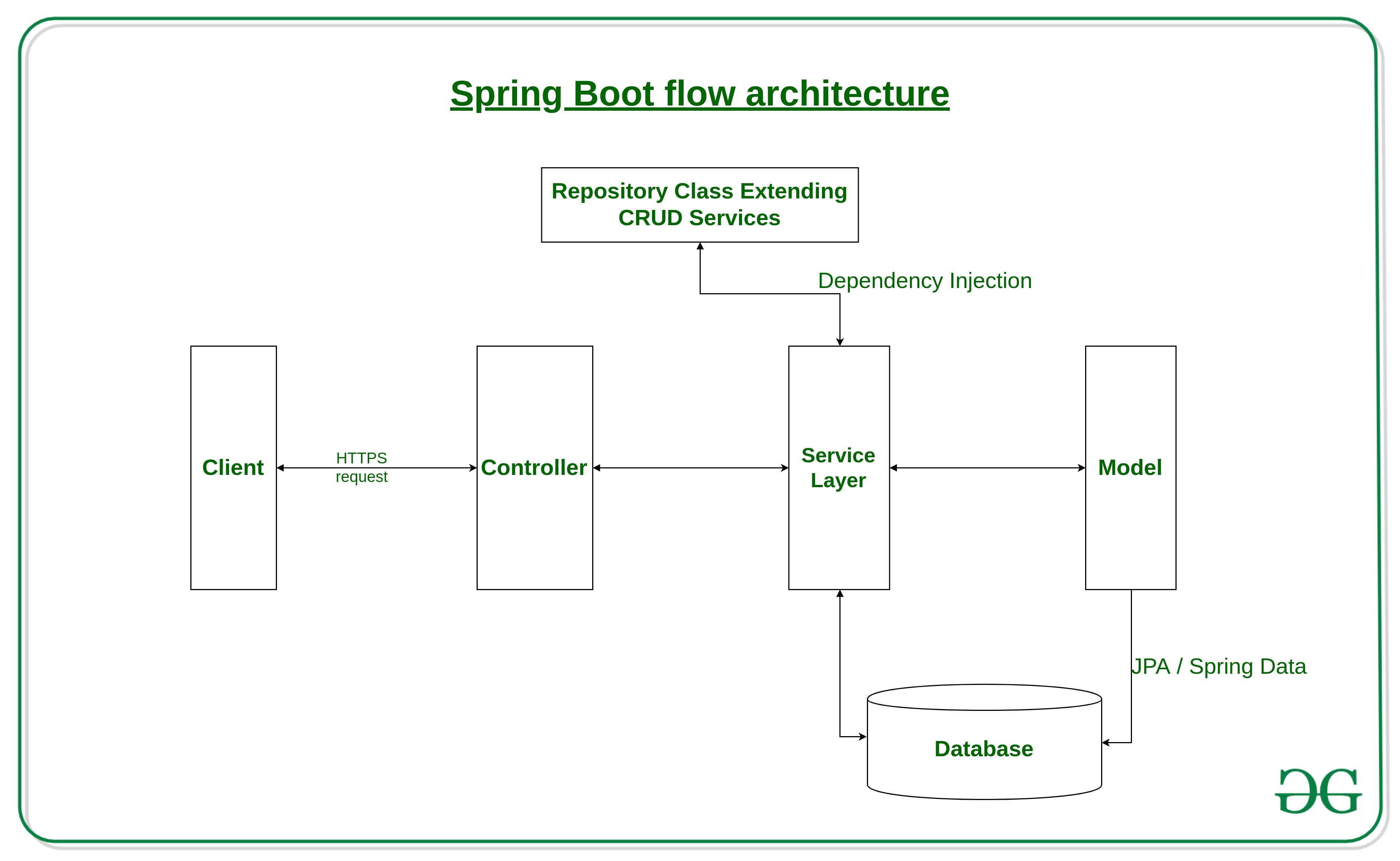

spirng now make a lot of stuff using been auto-wiring so you will discover that some object are used but never instantiated because the life cylcle of the bean is managed internally by spring. generally a spring app, ofr rest backend, is composed by:

RestController-> Service -> Repository (edited)

RestController => receive data from the UI or web call and perform the authentication and authorization check

Service => contains high level api used by the rest controller to perform operation

Repository => abstract the data backend (database , filesystem, and other microservices) and expose low level api that fiscally manages the data

all my app but also other spring app are generally done in this way, is a proper spring paradigm

Notes during meeting

- Build is done through gradle, and github action to:

1) execute the build,

2) startup backend for the test

3) execute the test

4) build docker image - Big benefit of java spring is dependency injection, which essentially avoids hardcoding objects, and allows for easier testing by injecting dependencies to objects (done by the framework spring)

- Helpful diagram of Java Spring architecture:

the flow architecture of a Spring Boot application typically follows this sequence:

- Client Request

- Controller (API Layer)

- Service (Business Logic Layer)

- Repository (Data Access Layer)

- Database

- Response to Client

Begin actual meeting notes here

- Spring framework is composed by configuration classes, repository (classes that access the data directly), service layer (app logic). Any output from spring is serialize to JSON

- Configuration package

- The sequence for the configuration doesn't need to be manually tweaked, spring does it for you.

- Instaniates classes

- Migration

- Packagfe that contains all data needed for microservices

- Model

- The object data schema if using SQL database, but for mongodb its a document

- DTO

- Data transmission object - The object that is needed to send to user as input and output from the REST api

- Usually a one-to-one mapping from model to DTO. but can have multiple DTO for a model

- Ex: for the component model there is a ComponentDTO and ComponentSummaryDTO

- Repository

- Always works on the model whether SQL or NOSql

- read and write data

- accesses either storage, db, or external service. Our 'repositories' is GitHub, kubernetes, our mongodb.

- Service

- constructor is hidden, instead a framework called lombok is used and is one liner AllArgsConstructor@

- service is logic and validation

- what is mapper? The mapper to automate the process. Best practice to put logic on the application, so you can change database easier.

- All the logic and input validation exists in service layer.

- The mapper is made to communicate from DTO to model, model to DTO. Example: a service gets a java object from the model, so it will map it to DTO, to return to user.

- There is logic to prevent locking of the database for every API that touches the database

- Rest Controller

- Entry point from the clients

- Authorization

- Best practice when updating API, create a new API directory instead of altering the old one, thats why there is a 'v1' dir.

- Ex: BuildController.java, RestController@ is a spring bean, AllArgsConstructor@ is the constructor for the class. RequestMapping@ is the base path of url endpoint, Spring automatically handles the https requests.

- rest controller takes care of authorization as well

- Types of authorization

- open - can do anything

- preauthentorize

- postauthorize

- Spring is one of the most powerful for database, rest api, authorization apps.

- Spring will automatically inject bean to class

- Repository@ annotation will tell spring to automatically instaniate an instance of a repo class like ComponentBranchBuildRepositoryImpl and then Autowired@ annotation means it will try to find an instance, if not found then it will create an instance.

- Test

- I startup the backend i need, the mongodb, minikube to docker, etc. This way we can test any images. Also use this tests for merge requests for the core-build-system. Also use local git server for test. The pod is executed in the minikube.

- test services in test/java/edu.../service/

- Example:

- if you know java, you can start within 1-2 weeks of the backend code.

- Multi-threaded

- TODO: Digest this, and get list of questions to ask for understanding

- dev environment Claudio use for this, i have many undefined symbols.

- To fix: do ctrl+shift+p and Java: Compile Workspace. But i get gradle failure because 'username must not be null!'.

FAQs (todo)

- How is this built

- Gradle - use script ./gradlew in the project. but project is fully built/tested/deployed using the github action workflow on core-build-system-deployment repo

- For example if used "./gradlew build", then it will try to run tests before it builds the .jar (but will fail unless you start the docker compse and the minikube). So instead can use "./gradlew assemble" to just see if the app builds, then makes the jar. Real testing is done through github actions, where it starts up the backend, and runs the tests accordingly.

- How i got my vscode env setup

- installed java extension, disabled Java:on save: organize imports. (To prevent vscode from adding its own imports). Then it should be good to go

- use "./gradlew assemble" to build. Then for testing/deploying, use github actions (unless can figure out docker compose and minikube locally).

- How tests are ran

- I recommend looking through one of the workflows ran in github actions BuildAndTest. Then doing a ctrl+f on the test class, like

ComponentControllerTest that'll give you the tests for the controller (API).

Other - gradle / java setup

in /etc/environment or .bashrc

PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin:$JAVA_HOME/bin" JAVA_HOME=/usr/lib/jvm/java-21-openjdk-amd64

I kept having errors with gradle saying it cant find my java even though its installed properly and can compile and run a basic java file.

- to fix this, I just removed my entire ~/.gradle/ directory and it worked.

For the core-build-system itself

Add a gradle.properties file at the project root. Looks like following:

gpr.user=<github username>

gpr.key=<token>

- Then from project root, do a ./gradlew assemble

If using VScode (Highly recommended since project is highly object oriented and code indexing is a blessing)

- Found that not all symbols are resolved, so do this:

- Install the java extensions, the spring boot extensions

Refresh Project in VSCode

- Open the Command Palette (

Ctrl+Shift+P). - Select

Java: Clean Java Language Server Workspace. - Then select

Java: Update Project.

- Add jdk to settings.json if doesnt already exist

"java.home": "/usr/lib/jvm/java-21-openjdk-amd64"