Introduction

Do us a favor and give a thumbs up or leave a comment to let us know you saw this newsletter!

Check out the RIX newsletters when you get a chance as well!

PMPS UI Fixes and Updates

We had a few fixes and feature updates for the PMPS UI diagnostic tool in July.

- Fixed a bug where the rate and transmission readbacks on the line beam parameters page were showing the live totals instead of the controlled readback value, causing some confusion.

- Add a default "Beam Permitted: False" filter on the fast faults page. This makes the GUI load slightly faster because it doesn't have to render all the fast fault widgets on load, and it lets us get to the most important view first.

- Disable the grafana web views for now, these are causing crashes on operator consoles.

- Rearrange the "Arbiter Outputs" page that was previously difficult to see which status was connected to which PLC.

Hutch Python and Conda Updates

The following environments were released during May, June, and July:

- PCDS Conda Release Notes: pcds-5.4.0

- PCDS Conda Release Notes: pcds-5.4.1

- PCDS Conda Release Notes: pcds-5.4.2

pcds-5.4.2 is the first environment with "gentler" dependency updates to minimize the potential for picking up unexpected behavior on update.

Note that any applications using pcds-5.3.1 should update if they plan to use hutch-python in an experiment setting. There is a bug in this version that can lead to dangerous results where the history of separate ipython sessions will get mixed during execution, so someone else's "move" command can end up in your history and it is very very easy to accidentally run their command instead of re-running yours.

Python Performance Project (Ongoing)

We've been working on tracking down the reasons why various Python apps are slow to load (vs. expectations) and trying to optimize what we can. To this end, we have already found a number of potential improvements and work is ongoing. Some of these are tricky because, while startup speed is important, having a design tradeoff that adds slowness later can be just as bad.

A project page is opened here and work is ongoing: Hutch Python/Lightpath Device Loading Slowdown Findings/Fixes

L2HE Update

Margaret Ghaly Vincent Esposito

Over the past couple of months, the ECS HE team has been making significant progress on the new controls infrastructure designs. The cost estimates for each hutch has been mostly completed and we are approaching PDR-level readiness in many areas. PDR is now planned for September 2022 (exact date TBD).

The team is working on refining the designs and continues to focus on the PDR, collaborating closely with the mech-E leads and Cosylab.

As a new member of ECS, Mitch Cabral has joined the effort and is working on Jama integration, a collaborative web-based tool for reviewing, approving, and exporting system requirements for release. We have begun capturing L2SI Motion and Vacuum System Functional Requirement Specifications (FRS), and although these requirements are still being reviewed and revised, the hope is to make these documents more accessible for projects to follow, and possibly establish these as standards for our control systems. Transferring to Jama will also allow for agile navigation to find conflicts with upstream or downstream alterations in future project requirements between stakeholders and the team.

We released a major ICD detailing the R2A2 between ECS and other groups in HE. It details many important divisions of work and everyone should at least take a quick look through to better understand what ECS does:

https://slac.sharepoint.com/sites/pub/Publications/LCLSII-HE-1.4-IC-0488.pdf

MEC-U Update

Electrical safety compliance has been a primary focus of recent collaboration between LLNL, LLE and ECS controls teams in MECU. We convened a meeting between the Collective (handle of the partner lab collaboration on controls for MECU) AHJ and LCLS's SO to begin to discuss how the electrical designs of all the partners will be accepted by SLAC ES&H. The discussion was fruitful and identified a number of action items that will ensure MECU's laser systems can be installed without issue.

Thanks to the efforts of the ECS division, the patience of MECU project management, and the collaborative spirit of the other labs in the Collective, we are happy to report that the MECU control system will be developed with a uniform technology stack throughout the experiment, beam delivery, and the LLNL, LLE laser systems. This result is a reflection the ECS team's dedication to their work and their commitment to the support of the MECU project. Writing as PD department head I want to say I am very proud and grateful for the team's candor and stamina in their presentations to the Collective.

Cosylab was introduced to LLNL and the ECS workflow with Cosylab was demonstrated. Thanks to Jing, Maggie and Dan for establishing and running a very productive and exemplary workflow with Cosylab. This also played a large part in reassuring our partners in the Collective of the feasibility of using a common control system stack.

We have been working on the resource plan. The MEC-U resource plan has been integrated with ECS resource allocation planning. A SOW for engineering support for MEC-U control system design has been sent out for review.

Lightpath Campaign

We've been working to rebuild the lightpath application, a tool that aims to give a high-level summary of the beam, where it's pointing, and which devices are blocking. In order to properly represent the facility, significant changes were made both how lightpath organizes devices and how those devices are represented. As of the writing of this newsletter, we have completed the major infrastructural changes to lightpath, implemented a new device interface, and begun to spot check the app's performance for select end stations.

Design details and FAQ's are being gathered at this page, which (like the lightpath app) is a work in progress.

We look forward to the redeployment of this tool with the hope that it will more accurately track beamline state, addressing these kinds of scenarios.

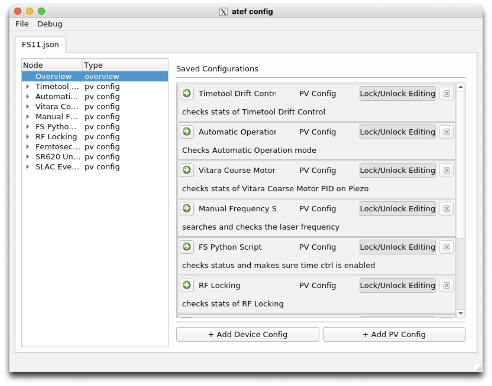

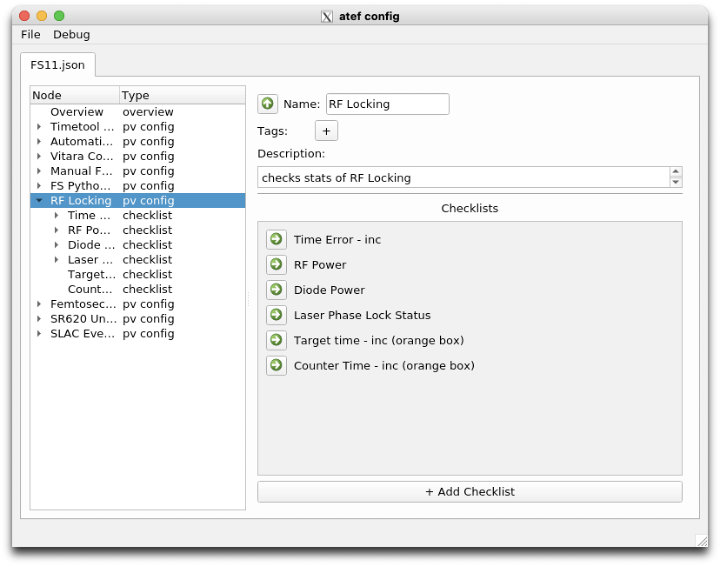

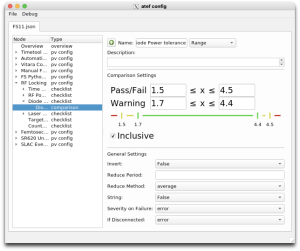

ATEF

atef has seen some improvements since our last update:

- The atef passive check GUI is now easier to use and more feature-complete

- ECS engineers that work in the laser hall have been trialing ATEF.

- They have been providing the development team with helpful and actionable feedback (thanks, Liggett, Andrew and Christina Pino !)

- See below for a few screenshots of their effort, courtesy of Liggett, Andrew

- Tool configurations have been added

- The first tool is "ping" - verifying that a given host is online prior to starting a test

There is also much work left to be done. Next on our list is a final restructuring of the passive check mechanism. The result of this effort, which is now underway, will allow more flexibility for the user to group their checks in intuitive ways. We will also be integrating dynamic values of a variety of sources into the comparison mechanism, meaning that PV to PV comparisons will be a possibility.

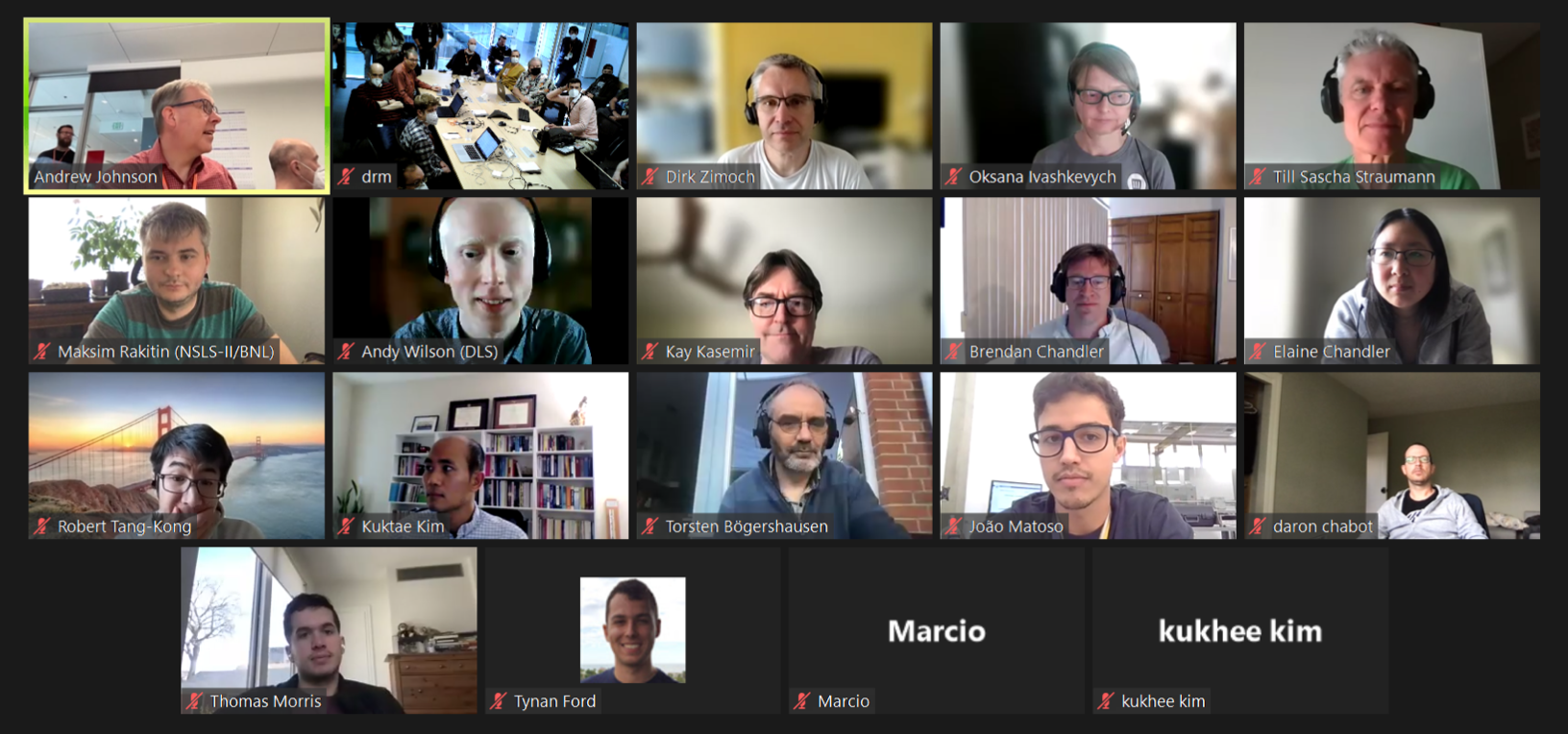

EPICS Codeathon

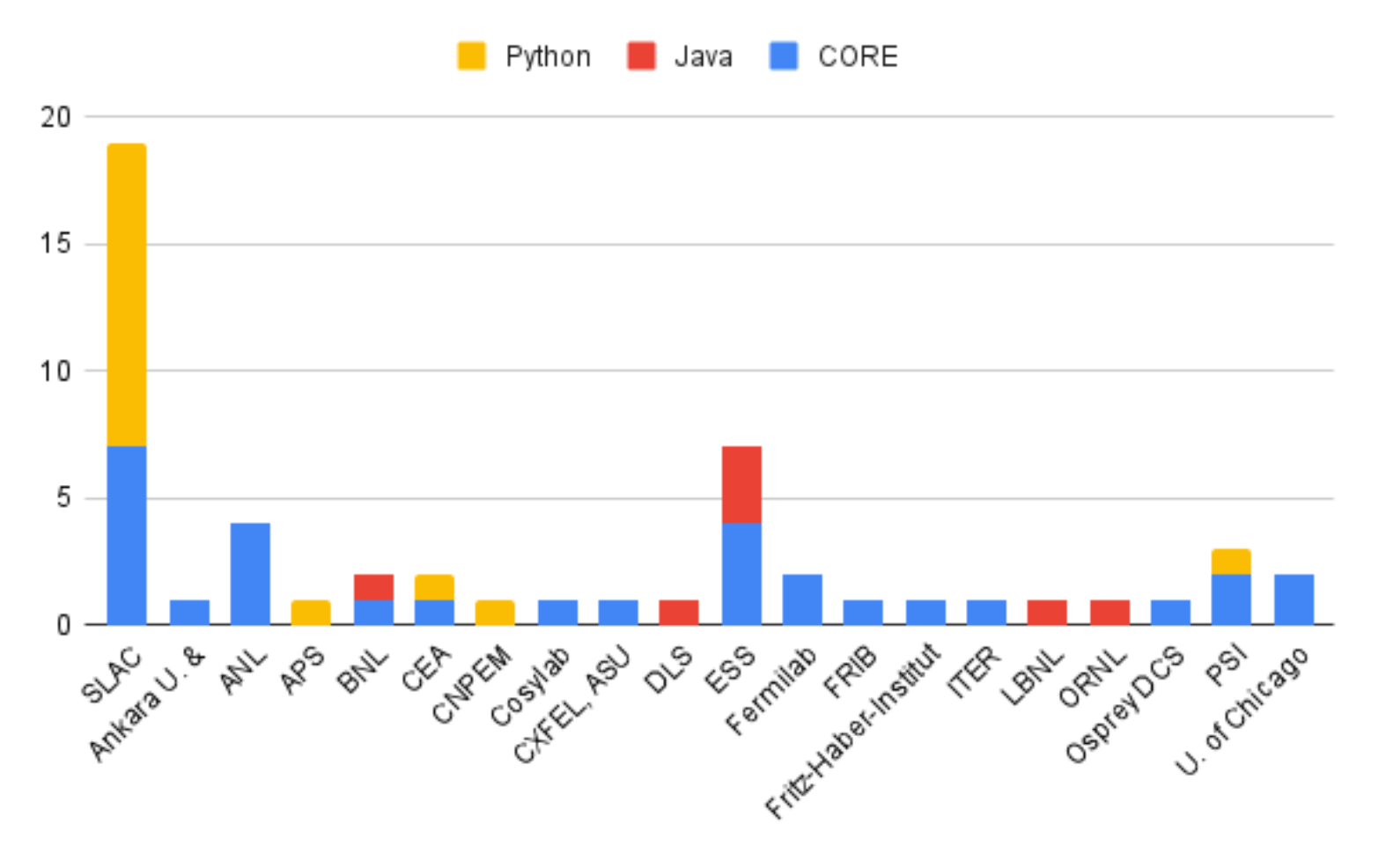

SLAC hosted the May 2022 EPICS Codeathon. There were 3 separate sessions: EPICS core (C/C++), Java tools and extensions, and Python tools and extensions.

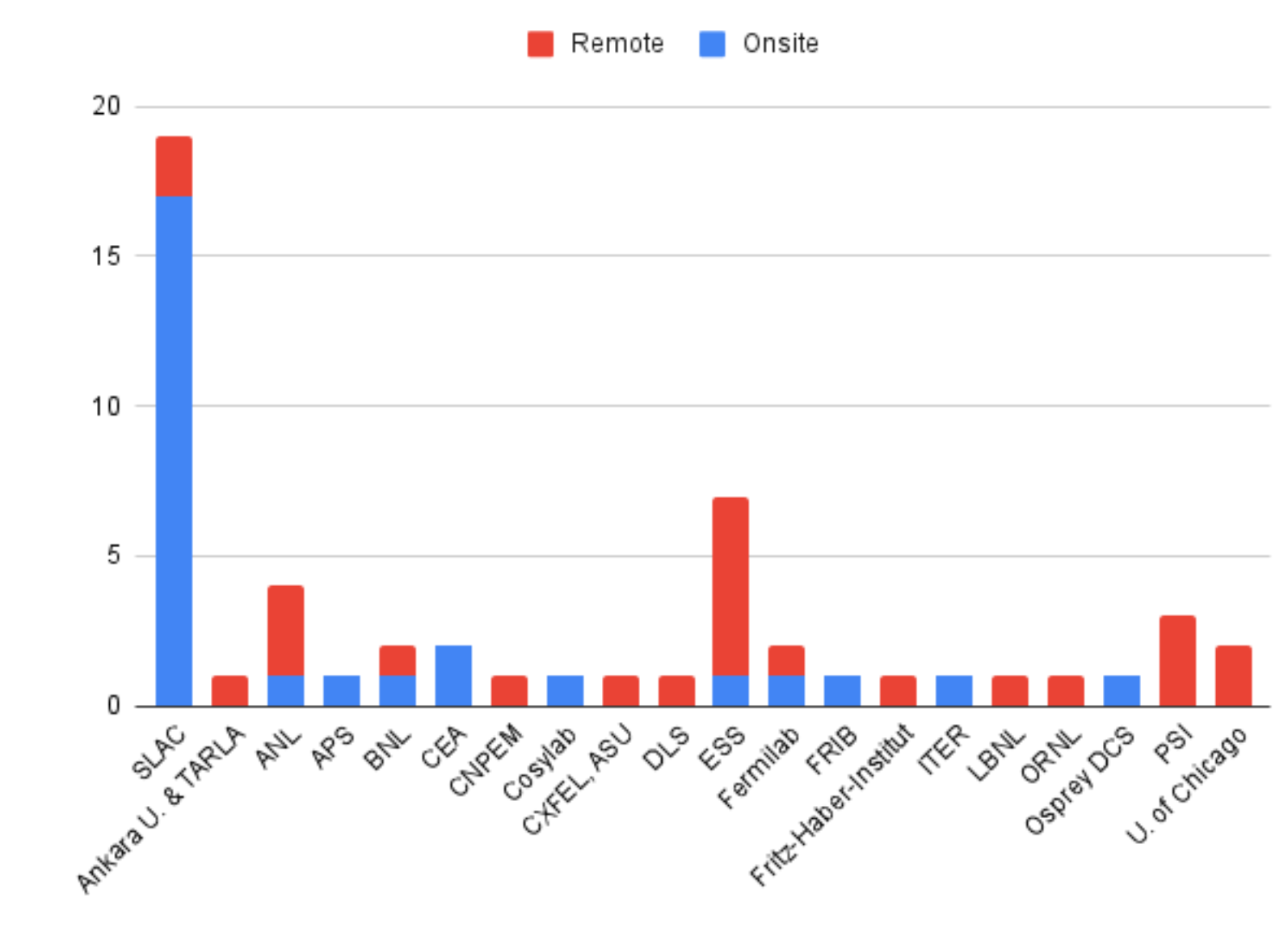

On-site and remote participants for the 2022 EPICS Codeathon (Monday, May 9th 2022)

SLAC saw many on-site participants as well as remote ones from 20 institutions. The following charts break down participant sessions and the number of those remote versus on-site:

Track | Total | Onsite | Remote |

Core (C/C++) | 30 | 13 | 17 |

Java | 7 | 2 | 5 |

Python | 16 | 13 | 3 |

Totals | 53 | 28 | 25 |

Andrew Johnson hosted the core team, Kunal Shroff hosted the Java team, and ECS controls engineer Ken Lauer hosted the Python session.

The Python session tracked our results on GitHub and ended up fixing and working on an impressive (if I do say so myself) number of things over the course of a few days:

Project | Contributors | Total Issues | Total PRs | Merged/Resolved |

adl2pydm | 1 | 2 | 2 | 1 |

happi | 1 | 4 | 4 | 3 |

ophyd | 4 | 7 | 7 | 6 |

pmps-ui | 1 | 1 | 1 | 1 |

pyca | 1 | 1 | 1 | 1 |

pydm | 9 | 19 | 19 | 14 |

pythonSoftIoc | 1 | 1 | 0 | 0 |

timechart | 3 | 10 | 10 | 10 |

typhos | 1 | 3 | 2 | 3 |

whatrecord | 1 | 1 | 1 | 1 |

Total | 15 | 49 | 47 | 40 |

Special thanks to all of the participants, on-site and remote, for helping bring the community together and fix/enhance so many projects.

For information on the other sessions, please see the attached summary slides:

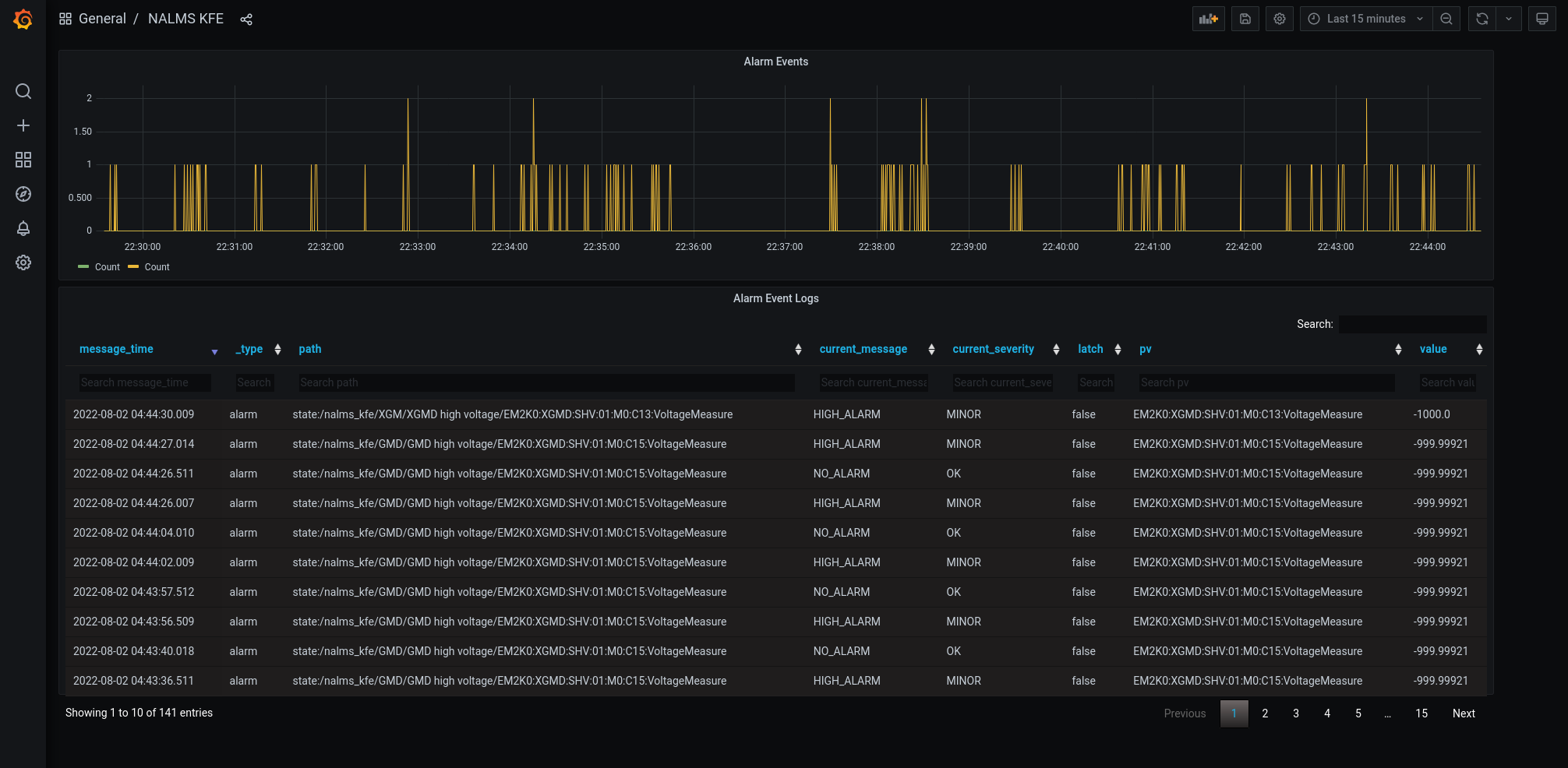

NALMS

The NewALarMSystem (NALMS) is almost ready for deployment. After the last updates on the system, the testing section is almost ready to start. Thanks to Thorsten, Omar, Jesse, Ken, Michael, and Victor for all the effort.

The GMD and XGMD NALMS will be used for testing purposes. To create a dedicated NALMS for GMD and XGMD, several steps were followed:

- Create a spreadsheet that includes all the PV involved in the GMD and XGMD instruments.

- Discuss with the scientists the best value/boolean thresholds that need to be alarmed

- Give a hierarchy to the selected PVs

- Check the PV's value saved on EPICS and update the values and severity according to the spreadsheet

- Create the XML file that will be the core of the NALMS

The picture shows the first attempt of the Grafana board for NALMS GMD-XGMD.

In the future, more PVs will be added to the NALMS. They will be grouped by subsystems (i.g., vacuum, power, common component, etc). For simplicity, to ensure a good understanding and track of possible faults is essential to include in the alarm list only the PVs that are important for the operation purpose. Therefore it is necessary to keep this list as short as possible. Right now, the most critical PVs are being grouped for each subsystem, in the EBD, and in the FEE area, by the DOE summer student Samara Steinfeld. You can follow the upgrade of the NALMS deployment on the NALMS confluence page.

Eventually we hope to summarize our control system status into a navigable alarm tree using NALMS.

TMO

- Granite 3 (all components are checked) is ready to go for DREAM mirror check out experiment(starting from 8/7): IP2(no chamber)-Granite 3 Controls I

Mirror: Waters, Nick Vacuum: Jing Yin Tong Ju Motion: Maarten Thomas-Bosum Zachary L Lentz PMPS: Margaret Ghaly Tong Ju Image: Tong Ju Govednik, Janez

- TMO whole beam line PMPS is completed from the FEE to granite 3 : TMO PMPS System Margaret Ghaly Tong Ju

Scientists will run dream mirror check out experiment and test all PMPS and veto groups at the same time.

DREAM

For the past a couple of months, we have been working on SAT of DREAM components and the integrated DREAM schedule with checkpoints to capture the critical paths.

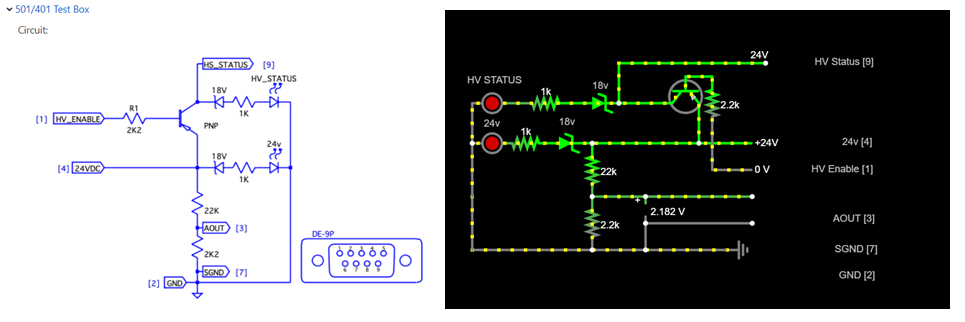

Vacuum

We recently added several new components to the vacuum systems. For the past month we have been working on the designs of test boxes for these new components. The designs have been tested by an online simulator and will be sent out for team review soon (link). These test boxes will facilitate reconfigurations and new installations by simulating expensive devices with cheap hardware.

IM4K4 Motor Resonance Crashing a Turbo

TMO had a strange problem back in April: whenever they would move IM4K4 in or out, it would vibrate the stand violently to the point that it would cause a nearby turbo pump to crash, which was extremely disruptive to endstation operations.

Stepper motors are known for having some problems with resonance and high vibrations. Typically in industry you would pick servo motors if extremely smooth motion was a requirement. This isn't a requirement here, but "do not shake the stand when moving" is absolutely a reasonable ask.

All of the PPM imager/power meter combination units were tuned based on a single instance before it was installed onto the beamline. As such, the parameters were optimized for a lab bench in the temporary clean room in B750 rather than for the beamline as-installed. With the rush to close out the installation of the L2SI components, it looks like this had never been revisited.

In general, we expect every instance of a motor to have slightly different tuning needs based on the precise specifications by which the assembly was installed. This won't always be worked on to completion because often there won't be any problems, and because the most important specification for the majority of our motors is position accuracy and precision of the end position, along with motion reliability, which is not covered by this vibration case.

It was observed that the PPM units have higher vibrations than one would expect in the FEE, RIX, and TMO, culminating in this turbo pump incident. So, we took some time to do the following for every installed/active PPM:

- Make the position correction loop more gentle. The PLC software that governs the in-transit motion was very aggressively conforming the stepper's movement profile to the "optimal" profile, leading to overcorrection and some slight increase in the vibrations. They were also tuned for an expected runtime velocity of 65 mm/s, when in practice we were running them at 5 mm/s.

- Pick a runtime speed for the PPM that minimized vibrations from resonances. It turns out that all of the PPMs have strong resonances with their stands in the 5 mm/s - 8 mm/s movement speed ranges. This is why most of the PPMs could perform OK if they were slowed down. What isn't obvious if you aren't aware of the resonances is that you can also make the PPM perform better by speeding it up. Most of the PPMs had a "best-case" (minimum vibration) speed at around 12 mm/s, though some performed best as high as 15 mm/s or as low as 11 mm/s.

After these adjustments, the PPMs are silky smooth, quiet, and reach their destinations reasonably quickly.

Supported Device List Amendment Process

ECS added a set of steps to our SDL curation page to give insight into how we would like other teams to work with us to add a new device to the list. We also plan to write up a process for how we'll go about changing an existing device's status. Please take a look at this process to learn about how you can get a device added to the list, and let us know what you think!

ECS Supported Devices Curation

ECS+ME SME Teams

ECS and ME are working on arranging subject matter expertise teams to collaborate on system architectures and curation of the supported device lists. They will also work on engineering design templates, eg. how to specify and select mechatronic actuators, or arrange a vacuum system to use already existing and tested interlock logic.

Validated Configuration Database

LCLS has never had a single-source-of-truth for beamline configuration (the arrangement and state of components in the beamline). This has led more than one incident where either equipment was added to the beamline without notification or proper planning leading to last minute mitigation. Projects also suffer as an updated as-built of the beamline configuration is mythical. In some cases the beamline GUI is the best and only as-built reference.

ECS and ME (you could call us together, LCLS engineering), have been collaborating since early 2022 to develop requirements for a database system to address this issue. We considered a number of options, including using an existing system from the AD. Our requirements aim for a highly collaborative platform, with an excellent API, and ease of use as the key to ensuring an accurate as-built record. Essential information to be tracked by this database includes x, y, z, functional component name, and status (planned, installed, commissioned, etc.), with the ability to add fields as desired. We plan to link other modules such as Happi, and the Asset database to this configuration database to keep everything in sync. Development started in June and is proceeding. Learn more here. We're anticipating initial deployment at the end of August.

MEC SPL Upgrade

The MEC Short Pulse Laser new platform work in the target chamber, which included significant changes to hutch radiation monitoring and shielding, is a PEMP goal for SLAC. This was successfully demonstrated with the in-house experiment X455 [Lee: Commissioning of compact HAPG spectrometer with higher X-ray photon energy from 8 keV to 24 keV] and will be used for the upcoming X523 [Khaghani: Commissioning of a standard beam-delivery platform for high-intensity laser experiments at MEC].

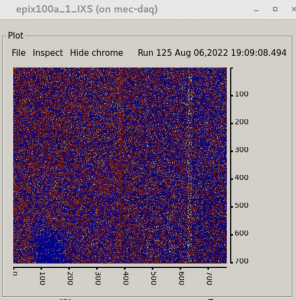

In conjunction with the SPL work MEC is also commissioning two new spectrometers – XRTS and XTCS [X-ray Transmission Crystal Spectrometer]. The Cu K-alpha and K-beta lines are clearly visible in this image from the XTCS on-board camera taken on 6 August 2022.

Vera C. Rubin Observatory Presentation by Peregrine McGehee

Peregrine presented a short introduction to the Vera C. Rubin Observatory at a recent MEC SRD team meeting: PDF of slide deck is attached.

Hello! Goodbye...

Antonio Gilardi has joined the ECS Delivery group as the new MFX and UED Point of Contact. | |

Mitchell Cabral has joined the ECS Platforms Development team as a Control System Integrator, whose focus is supporting developing projects (L2HE and MEC-U primarily). Mitchell is a recent mechatronics engineering graduate from CSU Chico and alumni of the CSUC Engineering Capstone program. His project, sponsored by SLAC for the past year, was to develop a prototype computer vision system robot for Dr. Diling Zhu in XPP. Some of his hobbies/interest include cooking, the Sacramento Kings, volleyball, rock climbing, (hiking to/swimming in) large bodies of water (with a nice beverage). | |

Divya Thanasekaran has joined the ECS Delivery group as a Staff Software Engineer and the new CXI Controls and Data Point of Contact. Divya has a masters in Computer Engineering from New York University and prior to joining SLAC she was the Lead Device Control Software Engineer for Primary Mirror Control System of the Giant Magellan Telescope. In her term there, she was involved in subsystem specific resource priorities and scheduling. She carried the software from design inception, through design reviews, testing and test readiness reviews as well as initial successful system test campaigns. Her background is in Embedded Systems, C/C++, Control Software using EtherCAT. She loves to hike, run and is currently learning how to play tennis. | |

Christian Tsoi-A-Sue has joined the ECS Delivery group as a Staff Engineer 1 and his current focus is providing support for CXI as the SEA. He studied robotics engineering and electrical engineering at UC Santa Cruz and graduated in 2019. His areas of interest are embedded systems/microcontrollers, C and Python. In his free time he enjoys playing pickleball, watching movies and playing Pokemon Go with friends. | |

Josue Zamudio Estrada is a new intern on the LCLS Exp. Control Systems Delivery Team. He studied Computer Engineering and recently graduated from UC Santa Cruz. On his free time he likes to skate board, fish, and spending time with friends. | |

Lana Jansen-Whealey has joined the is a new intern on the LCLS Exp. Control Systems Delivery Team and is excited to continue learning about hutch instrumentation and software interfacing. She recently graduated from Cal Poly, San Luis Obispo with a physics degree and has been working at SLAC since July 12. She prefers to spend her free time visiting national parks, hiking, doing ballet, cooking, and making new friends! |

We said goodbye and farewell to Maarten in July. He will be missed. You can see his Kudoboard here.

Github

It should be noted a huge quantity of our work is done on Github.com, all development is tracked there. Jira issues capture a significant body of work as well, but at least as much work is also captured in the closure of Github tickets (issues) associated with our various codebases. Unlike Jira, getting a consolidated metric of work done in a past period is not possible without a paid subscription to Github. Roughly speaking over 80 projects were touched since April 8th, with multiple changes of various sizes.

Jira

If you can't see these Jira plugins, please log into Jira/ Confluence. If you can't log into Jira, send mail to apps-admin@slac.stanford.edu and ask to be added to Jira.

Getting issues...