Page History

...

Every detector should have XpmPauseThresh: was put in to protect a buffer of xpm messages. Get's or'd together with data pause threshold, can be difficult to disentangle in general.

A small complication to keep in mind: the tmo hsd’s in xpm4 have an extra trigger delay that means they need a different start_ns. The same delay effect happens for the deadtime signal in the reverse direction. In some ideal world those hsd’s would need a different high-water-mark, or we simplify and set the high-water-mark for all hsd’s to the required xpm4 values.

Two methods to test dead time behavior of a new detector:

...

- use this to check state of the pgp link, and the readout group, size and link mask (instead of kcuStatus): kcuSim -s -d /dev/datadev_0

- use this to configure readout group, size, link mask: kcuSimValid -d /dev/datadev_0 -c 1 -C 2,320,0xf

- I think this hangs because it's trying to validate a number of events (specified with the -c argument?)

configdb Utility

From Chris Ford. See also ConfigDB and DAQ configdb CLI Notes. Supports ls/cat/cp. NOTE: when copying across hutches it's important to specify the user for the destination hutch. For example:

| Code Block |

|---|

configdb cp --user rixopr --password <usual> --write tmo/BEAM/tmo_atmopal_0 rix/BEAM/atmopal_0 |

Storing (and Updating) Database Records with <det>_config_store.py

Detector records can be defined and then stored in the database using the <det>_config_store.py scripts. For a minimal model see the hrencoder_config_store.py which has just a couple additional entries beyond the defaults (which should be stored for every detector).

Once the record has been defined in the script, the script can be run with a few command-line arguments to store it in the database.

The following call should be appropriate for most use cases.

| Code Block |

|---|

python <det>_config_store.py --name <unique_detector_name> [--segm 0] --inst <hutch> --user <usropr> --password <usual> [--prod] --alias BEAM |

Verify the following:

- User and hutch (

--inst) as defined in the cnf file for the specific configuration and include as--user <usr>. This is used for HTTP authentication. E.g.,tmoopr,tstopr - Password is the standard password used for HTTP authentication.

- Include

--prodif using the production database. This will need to match the entry in yourcnffile as well, defined ascdb.https://pswww.slac.stanford.edu/ws-auth/configdb/wsis the production database. - Do not include the segment number in the detector name. If you are updating an entry for a segment other than 0, pass

–sgem $SEGMin addition to–name $DETNAME

python <det>_config_store.py --help is available and will display all arguments.

There are similar scripts that can be used to update entries. E.g.:

hsd_config_update.py

Making Schema Updates in configdb

i.e. changing the structure of an object while keeping existing values the same. An example from Ric: "Look at the __main__ part of epixm320_config_store.py. Then run it with --update. You don’t need the bit regarding args.dir. That’s for uploading .yml files into the configdb."

Here's an example from Matt: python /cds/home/opr/rixopr/git/lcls2_041224/psdaq/psdaq/configdb/hsd_config_update.py --prod --inst tmo --alias BEAM --name hsd --segm 0 --user tmoopr --password XXXX

MCC Epics Archiver Access

Matt gave us a video tutorial on how to access the MCC epics archiver.

| View file | ||||

|---|---|---|---|---|

|

TEB/MEB

(conversation with Ric on 06/16/20 on TEB grafana page)

BypCt: number bypassing the TEB

BtWtg: boolean saying whether we're waiting to allocate a batch

TxPdg (MEB, TEB, DRP): boolean. libfabric saying try again to send to the designated destination (meb, teb, drp)

RxPdg (MEB, TEB, DRP): same as above but for Rx.

(T(eb)M(eb))CtbOInFlt: incremented on a send, decremented on a receive (hence "in flight")

In tables at the bottom: ToEvtCnt is number of events timed out by teb

WrtCnt MonCnt PsclCnt: the trigger decisions

TOEvtCnt TIEvtCnt: O is outbound from drp to teb, I is inbound from teb to drp

Look in teb log file for timeout messages. To get contributor id look for messages like this in drp:

/reg/neh/home/cpo/2020/06/16_18:19:24_drp-tst-dev010:tmohsd_0.log:Parameters of Contributor ID 8:

Conversation from Ric and Valerio on the opal file-writing problem (11/30/2020):

Ansible Management of drps in srcf

Through Ansible we have created a suite of scripts that allow to manage the drp nodes.

We have divided the nodes in 5 categories:

- timing high

- timing slow

- HSD

- camera_link

- wave8

Every category is then standardized to have the same configuration, use the same firmware, and use the same drivers.

To retrieve the scripts: gh repo clone slac-lcls/daqnodeconfig

The file structure is:

ansible # main ansible folder

ansible.cfg #local configuration file for ansible, without a local file ansible will try to use a system file

hosts #file defining labels for drp nodes, this files organizes in which category a drp node is

HSD #Ansible Scripts oriented for HSD only

HSD_driver_update.yml # is dedicated on installing the driver by using the .service files

HSD_file_update.yml # is dedicated to copy all needed files in the proper folders, if file is the same it won't be copied

HSD_pcie_firmware_update.yml. # is dedicated to upgrade PCIE firmware in HSD-01 for all the HSD cards

HSD_firmware_update.yml # is dedicated to upgrade KCU firmware in the srcf nodes

nodePcieFpga #Ansible uses pgp-pcie-apps to update firmware. This file is a modified version of updatePcieFpga.py and allows to pass the name of the firmware as a variable, bypassing the firmware selection. This file must be installed in pgp-pcie-apps/firmware/submodules/axi-pcie-core/scripts/nodePcieFpga and a link must be created in pgp-pcie-apps/software/scripts

Timing #Ansible Scripts oriented for Timing nodes only

#Content is similar to HSD

Camera #Ansible Scripts oriented for Camera-link nodes only

#Content is similar to HSD

Wave8 #Ansible Scripts oriented for Wave8 nodes only

#Content is similar to HSD

README.md #README file as per github rules

setup_env.sh # modified setup_env to load ansible environment

shared_files # folder with all the files needed to the system

status #useful clush scripts to investigate nodes status

find_hsd.sh # sends out a clush request to drp-neh-ctl002 to gather information about datadev_1 if the Build String contains the HSD firmware

md5sum_datadev.sh # sends out a clush request to drp-neh-ctl002 to gather information md5 of datadev

check_weka_status.sh # sends out a clush request to drp-neh-ctl002 to gather information about the presence of ls /cds/drpsrcf/

hosts

in the hosts files

| Code Block |

|---|

[timing_high]

drp-srcf-cmp038

[wave8_drp]

drp-srcf-cmp040

[camlink_drp]

drp-srcf-cmp041

[hsd_drp]

drp-srcf-cmp005

drp-srcf-cmp017

drp-srcf-cmp018

drp-srcf-cmp019

drp-srcf-cmp020

drp-srcf-cmp021

drp-srcf-cmp022

drp-srcf-cmp023

drp-srcf-cmp024

drp-srcf-cmp042

drp-srcf-cmp046

drp-srcf-cmp048

drp-srcf-cmp049

drp-srcf-cmp050

[tmo_hsd]

daq-tmo-hsd-01

|

we define which drp is associated with which category

HSD(Timing/Camera/Wave8)_file_update.yml

| Code Block |

|---|

---

- hosts: "hsd_drp" #DO NOT USE "all", otherwise every computer in the network will be used

become: true #in order to run as root use the "-K" option in the command line

gather_facts: false

strategy: free #Allows to run in parallel on several nodes

ignore_errors: true #needs to be true, otherwise the program stops when an error occurs

check_mode: true #modify to false, this is a failsafe

vars:

hsd_files:

- {src: '../../shared_files/datadev.ko_srcf', dest: '/usr/local/sbin/datadev.ko'}

- {src: '../../shared_files/kcuSim', dest: '/usr/local/sbin/kcuSim'}

- {src: '../../shared_files/kcuStatus', dest: '/usr/local/sbin/kcuStatus'}

- {src: '../../shared_files/kcu_hsd.service', dest: '/usr/lib/systemd/system/kcu.service'} #timing will have tdetSim.service, others kcu.service

- {src: '../../shared_files/sysctl.conf', dest: '/etc/sysctl.conf'}

tasks:

- name: "copy files"

ansible.builtin.copy:

src: "{{ item.src }}"

dest: "{{ item.dest }}"

backup: true

mode: a+x

with_items: "{{ hsd_files }}" #this is a loop over all the elements in the list hsd_files

|

to run the script, modify check_mode to false and run:

| Code Block |

|---|

source setup_env.sh

ansible-playbook HSD/HSD_file_update.yml -Kand insert the sudo password |

HSD(Timing/Camera/Wave8)_driver_update.yml

| Code Block |

|---|

---

- hosts: "hsd_drp" #DO NOT USE "all", otherwise every computer in the network will be used

become: true #in order to run as root use the "-K" option in the command line

gather_facts: false

strategy: free

ignore_errors: true #needs to be true, oherwise the program stops when an error occurs

check_mode: true #modify to false, this is a failsafe

tasks:

- name: "install driver"

ansible.builtin.shell: |

rmmod datadev

systemctl disable irqbalance.service

systemctl daemon-reload

systemctl start kcu.service #timing will have tdetSim.service

systemctl enable kcu.service |

to run the script, modify check_mode to false and run:

| Code Block |

|---|

source setup_env.sh

ansible-playbook HSD/HSD_driver_update.yml -Kand insert the sudo password |

HSD(Timing/Camera/Wave8)_firmware_update.yml

| Code Block |

|---|

---

- hosts: "hsd_drp" # timing_high, wave8_drp,camlink_drp,hsd_drp

become: false #do not use root

gather_facts: false

ignore_errors: true

check_mode: true #failsafe, set to false if you want to run it

serial: 1

vars:

filepath: "~psrel/git/daqnodeconfig/mcs/drp/"

firmw: "DrpPgpIlv-0x05000000-20240814110544-weaver-88fa79c_primary.mcs"

setup_source: "source ~tmoopr/daq/setup_env.sh"

cd_cameralink: "cd ~psrel/git/pgp-pcie-apps/software/"

tasks:

- name: "update firmware"

shell:

args:

cmd: nohup xterm -hold -T {{ inventory_hostname }} -e "bash -c '{{ setup_source }} ; {{ cd_cameralink }}

; python ./scripts/nodePcieFpga.py --dev /dev/datadev_1 --filename {{ filepath }}{{ firmw }} '" </dev/null >/d

ev/null 2>&1 &

executable: /bin/bash |

to run the script, modify check_mode to false and run:

| Code Block |

|---|

source setup_env.sh

ansible-playbook HSD/HSD_firmware_update.yml |

configdb Utility

From Chris Ford. See also ConfigDB and DAQ configdb CLI Notes. Supports ls/cat/cp. NOTE: when copying across hutches it's important to specify the user for the destination hutch. For example:

| Code Block |

|---|

configdb cp --user rixopr --password <usual> --write tmo/BEAM/tmo_atmopal_0 rix/BEAM/atmopal_0 |

Storing (and Updating) Database Records with <det>_config_store.py

Detector records can be defined and then stored in the database using the <det>_config_store.py scripts. For a minimal model see the hrencoder_config_store.py which has just a couple additional entries beyond the defaults (which should be stored for every detector).

Once the record has been defined in the script, the script can be run with a few command-line arguments to store it in the database.

The following call should be appropriate for most use cases.

| Code Block |

|---|

python <det>_config_store.py --name <unique_detector_name> [--segm 0] --inst <hutch> --user <usropr> --password <usual> [--prod] --alias BEAM |

Verify the following:

- User and hutch (

--inst) as defined in the cnf file for the specific configuration and include as--user <usr>. This is used for HTTP authentication. E.g.,tmoopr,tstopr - Password is the standard password used for HTTP authentication.

- Include

--prodif using the production database. This will need to match the entry in yourcnffile as well, defined ascdb.https://pswww.slac.stanford.edu/ws-auth/configdb/wsis the production database. - Do not include the segment number in the detector name. If you are updating an entry for a segment other than 0, pass

–sgem $SEGMin addition to–name $DETNAME

python <det>_config_store.py --help is available and will display all arguments.

There are similar scripts that can be used to update entries. E.g.:

hsd_config_update.py

Making Schema Updates in configdb

i.e. changing the structure of an object while keeping existing values the same. An example from Ric: "Look at the __main__ part of epixm320_config_store.py. Then run it with --update. You don’t need the bit regarding args.dir. That’s for uploading .yml files into the configdb."

Here's an example from Matt: python /cds/home/opr/rixopr/git/lcls2_041224/psdaq/psdaq/configdb/hsd_config_update.py --prod --inst tmo --alias BEAM --name hsd --segm 0 --user tmoopr --password XXXX

Configdb GUI for multi detector modification

By running "configdb_GUI" in a terminal after sourcing the setup_env, a GUI appears.

- radio buttons allow to select between Prod and Dev databases. Do not change database while progress bar is increasing

- Progress bar shows that data is being loaded (this is needed to populate 4). When starting wait for the progress bar to reach 100.

- After the Progress bar reaches 100, the first column fills up with the devices present in the database

- The dropdown allows to select a specific detector type to show up in the second column

- Clicking on a device shows the detectors associated with it in the second column; multiple detector can be selected

- In the third column the data for the first detector selected is shown

- By selecting a variable in the third column, the value for every detector is shown in the forth column

- when a value is selected in the third column, the Modify button becomes available. In the text field, one can provide the new value to be added to every detector for the selected variable.

- By using "delta:30", it is possible to add or subtract (with negative number) the variable in all the selected detectors

- the value is casted into the same type of the variable in the database, if a different type is provided, the field does not get updated (i.e. string instead of number)

MCC Epics Archiver Access

Matt gave us a video tutorial on how to access the MCC epics archiver.

| View file | ||||

|---|---|---|---|---|

|

TEB/MEB

(conversation with Ric on 06/16/20 on TEB grafana page)

BypCt: number bypassing the TEB

BtWtg: boolean saying whether we're waiting to allocate a batch

TxPdg (MEB, TEB, DRP): boolean. libfabric saying try again to send to the designated destination (meb, teb, drp)

RxPdg (MEB, TEB, DRP): same as above but for Rx.

(T(eb)M(eb))CtbOInFlt: incremented on a send, decremented on a receive (hence "in flight")

In tables at the bottom: ToEvtCnt is number of events timed out by teb

WrtCnt MonCnt PsclCnt: the trigger decisions

TOEvtCnt TIEvtCnt: O is outbound from drp to teb, I is inbound from teb to drp

Look in teb log file for timeout messages. To get contributor id look for messages like this in drp:

/reg/neh/home/cpo/2020/06/16_18:19:24_drp-tst-dev010:tmohsd_0.log:Parameters of Contributor ID 8:

Conversation from Ric and Valerio on the opal file-writing problem (11/30/2020):

I was poking around with daqPipes just to familiarize myself with it and I was looking at the crash this morning at around 8.30. I noticed that at 8.25.00 the opal queue is at 100% nd teb0 is starting to give bad signs (again at ID0, from the bit mask) However, if I make steps of 1 second, I see that it seems to recover, with the queue occupancy dropping to 98, 73 then 0. However, a few seconds later the drp batch pool for all the hsd lists are blocked. I would like to ask you (answer when you have time, it is just for me to understand): is this the usual Opal problem that we see? Why does it seem to recover before the batch pool blocks? I see that the first batch pool to be exhausted is the opal one. Is this somehow related?

- I’ve still been trying to understand that one myself, but keep getting interrupted to work on something else, so here is my perhaps half baked thought: Whatever the issue is that blocks the Opal from writing, eventually goes away and so it can drain. The problem is that that is so late that the TEB has started timing out a (many?) partially built event(s). Events for which there is no contributor don’t produce a result for the missing contributor, so if that contributor (sorry, DRP) tried to produce a contribution, it never gets an answer, which is needed to release the input batch and PGP DMA buffer. Then when the system unblocks, a SlowUpdate (perhaps, could be an L1A, too, I think) comes along with a timestamp so far in the future that it wraps around the batch pool, a ring buffer. This blocks because there might already be an older contribution there that is waiting to be released. It scrambles my brain to think about, so apologies if it isn’t clear. I’m trying to think of a more robust way to do it, but haven’t gotten very far yet.

- One possibility might be for the contributor/DRP to time out the input buffer in EbReceiver, so that if a result matching that input never arrives, the input buffer and PGP buffer are released. This could produce some really complicated failure modes that are hard to debug, because the system wouldn’t stop. Chris discouraged me from going down that path for fear of making things more complicated, rightly so, I think.

If a contribution is missing, the *EBs time it out (4 seconds, IIRR), then mark the event with DroppedContribution damage. The Result dgram (TEB only, and it contains the trigger decision) receives this damage and is sent to all contributors that the TEB heard from for that event. Sending it to contributors it didn’t hear from might cause problems because they might have crashed. Thus, if there’s damage raised by the TEB, it appears in all contributions that the DRPs write to disk and send to the monitoring. This is the way you can tell in the TEB Performance grafana plots whether the DRP or the TEB is raising the damage.

Ok thank you. But when you say: "If that contributor (sorry, DRP) tried to produce a contribution, it never gets an answer, which is needed to release the input batch and PGP DMA buffer". I guess you mean that the DRP tries to collect a contribution for a contributor that maybe is not there. But why would the DRP try to do that? It should know about the damage from the TEB's trigger decision, right? (Do not feel compelled to answer immediately, when you have time)

The TEB continues to receive contributions even when there’s an incomplete event in its buffers. Thus, if an event

I was poking around with daqPipes just to familiarize myself with it and I was looking at the crash this morning at around 8.30. I noticed that at 8.25.00 the opal queue is at 100% nd teb0 is starting to give bad signs (again at ID0, from the bit mask) However, if I make steps of 1 second, I see that it seems to recover, with the queue occupancy dropping to 98, 73 then 0. However, a few seconds later the drp batch pool for all the hsd lists are blocked. I would like to ask you (answer when you have time, it is just for me to understand): is this the usual Opal problem that we see? Why does it seem to recover before the batch pool blocks? I see that the first batch pool to be exhausted is the opal one. Is this somehow related?

- I’ve still been trying to understand that one myself, but keep getting interrupted to work on something else, so here is my perhaps half baked thought: Whatever the issue is that blocks the Opal from writing, eventually goes away and so it can drain. The problem is that that is so late that the TEB has started timing out a (many?) partially built event(s). Events for which there is no contributor don’t produce a result for the missing contributor, so if that contributor (sorry, DRP) tried to produce a contribution, it never gets an answer, which is needed to release the input batch and PGP DMA buffer. Then when the system unblocks, a SlowUpdate (perhaps, could be an L1A, too, I think) comes along with a timestamp so far in the future that it wraps around the batch pool, a ring buffer. This blocks because there might already be an older contribution there that is waiting to be released. It scrambles my brain to think about, so apologies if it isn’t clear. I’m trying to think of a more robust way to do it, but haven’t gotten very far yet.

- One possibility might be for the contributor/DRP to time out the input buffer in EbReceiver, so that if a result matching that input never arrives, the input buffer and PGP buffer are released. This could produce some really complicated failure modes that are hard to debug, because the system wouldn’t stop. Chris discouraged me from going down that path for fear of making things more complicated, rightly so, I think.

If a contribution is missing, the *EBs time it out (4 seconds, IIRR), then mark the event with DroppedContribution damage. The Result dgram (TEB only, and it contains the trigger decision) receives this damage and is sent to all contributors that the TEB heard from for that event. Sending it to contributors it didn’t hear from might cause problems because they might have crashed. Thus, if there’s damage raised by the TEB, it appears in all contributions that the DRPs write to disk and send to the monitoring. This is the way you can tell in the TEB Performance grafana plots whether the DRP or the TEB is raising the damage.

Ok thank you. But when you say: "If that contributor (sorry, DRP) tried to produce a contribution, it never gets an answer, which is needed to release the input batch and PGP DMA buffer". I guess you mean that the DRP tries to collect a contribution for a contributor that maybe is not there. But why would the DRP try to do that? It should know about the damage from the TEB's trigger decision, right? (Do not feel compelled to answer immediately, when you have time)

The TEB continues to receive contributions even when there’s an incomplete event in its buffers. Thus, if an event or series of events is incomplete, but a subsequent event does show up as complete, all those earlier incomplete events are marked with DroppedContribution and flushed, with the assumption they will never complete. This happens before the timeout time expires. If the missing contributions then show up anyway (the assumption was wrong), they’re out of time order, and thus dropped on the floor (rather than starting a new event which will have the originally found contributors in it missing (Aaah, my fingers have form knots!), causing a split event (don’t know if that’s universal terminology)). A split event is often indicative of the timeout being too short.

- The problem is that the DRP’s Result thread, what we call EbReceiver, if that thread gets stuck, like in the Opal case, for long enough, it will backpressure into the TEB so that it hangs in trying to send some Result to the DRPs. (I think the half-bakedness of my idea is starting to show…) Meanwhile, the DRPs have continued to produce Input Dgrams and sent them to the TEB, until they ran out of batch buffer pool. That then backs up in the KCU to the point that the Deadtime signal is asserted. Given the different contribution sizes, some DRPs send more Inputs than others, I think. After long enough, the TEB’s timeout timer goes off, but because it’s paralyzed by being stuck in the Result send(), nothing happens (the TEB is single threaded) and the system comes to a halt. At long last, the Opal is released, which allows the TEB to complete sending its ancient Result, but then continues on to deal with all those timed out events. All those timed out events actually had Inputs which now start to flow, but because the Results for those events have already been sent to the contributors that produced their Inputs in time, the DRPs that produced their Inputs late never get to release their Input buffers.

...

| Code Block |

|---|

bos delete --deactivate 1.1.7-5.1.2 bos delete --deactivate 1.3.6-5.4.8 bos add --activate 1.3.6 5.1.2 bos add --activate 1.1.7 5.4.8 |

XPM

Link

...

Quality

(from Julian) Looking at the eye diagram or bathtub curves gives really good image of the high speed links quality on the Rx side. The pyxpm_eyediagram.py tool provides a way to generate these plots for the SFPs while the XPM is receiving real data.

...

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b53c b5f7 0080 0001 7d5e 73f3

8d82 0000 ff7c 18fc d224 40af 0040 0c93 0000 0000 0000 0000 0000 0000 0000 0000

4000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0080 0000 b51c 1080 004c e200 f038 1d9c 0010 8000

0000 0000 d72b b055 0017 8000 0000 0000 8000 0000 0000 8804 0002 0000 8000 0000

0000 8000 0000 0000 b51c b5fd 78ec 7689 b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b53c b5f7 0080 0001 7d5f 73f3 8d82 0000 03b1 18fd d224 40af 0060 0c9b

0000 0000 0000 0000 0000 0000 0000 0000 4000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0080 0000

b51c 1080 0051 e300 f038 1d9c 0010 8000 0000 0000 d72b b055 0017 8000 0000 0000

8000 0000 0000 8804 0002 0000 8000 0000 0000 8000 0000 0000 b51c b5fd e3eb 5435

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b53c b5f7 0080 0001 7d60 73f3

8d82 0000 07e6 18fd d224 40af 0040 0ca3 0000 0000 0000 0000 0000 0000 0000 0000

4000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0080 0000 b51c 1080 0064 e400 f038 1d9c 0010 8000

0000 0000 d72b b055 0017 8000 0000 0000 8000 0000 0000 8804 0002 0000 8000 0000

0000 8000 0000 0000 b51c b5fd 6817 792a b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b53c b5f7 0080 0001 7d61 73f3 8d82 0000 0c1b 18fd d224 40af 0040 0cab

0000 0000 0000 0000 0000 0000 0000 0000 4000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0080 0000

b51c 1080 0001 e500 f038 1d9c 0010 8000 0000 0000 d72b b055 0017 8000 0000 0000

8000 0000 0000 8804 0002 0000 8000 0000 0000 8000 0000 0000 b51c b5fd 698f 664c

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b53c b5f7 0080 0000

0001 7d62 73f3

8d82 0000 1050 18fd d224 40af 0040 0cb3 0000 0000 0000 0000 0000 0000 0000 0000

4000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0080 0000 b51c 1080 0001 e500 e600 f038 1d9c 0010 8000 8000

0000 0000 d72b b055 0017 8000 0000 0000 0000

8000 0000 0000 8804 0002 0000 8000 0000

0000 0000 8000 0000 0000 b51c b5fd 698f 664c

b5bc b5bc b5bc b5bc b5bc b5bc fb1f 31be b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b53c b5f7 0080 0001 7d62 73f3

8d82 0000 1050 18fd d224 40af 0040 0cb3 0000 0000 0000 0000 0000 0000 0000 0000

4000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000 0000

0000 0000 0000 0000 0000 0000 0080 0000 b51c 1080 0001 e600 f038 1d9c 0010 8000

0000 0000 d72b b055 0017 8000 0000 0000 8000 0000 0000 8804 0002 0000 8000 0000

0000 8000 0000 0000 b51c b5fd fb1f 31be b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc b5bc

Trigger Delays

See also Matt's timing diagram on this page: Bypass Events

- the total delay of a trigger consists of three pieces: CuDelay, L0Delay (per XPM and per readout group), and detector delays. Note that L0Delay is also called "PartitionDelay" in the rogue registers. L0Delay variable is: DAQ:NEH:XPM:0:PART:0:L0Delay (second zero is readout group). Note: L0Delay only matters for the master XPM.

- Matt writes about CuDelay: The units are 185.7 (1300/7) MHz ticks. You only need to put the PV or change it in xpmpva GUI. It will update the configdb after 5 seconds. You may notice there is also a CuDelay_ns PV (read only) to show the value in nanoseconds. Best not to change CuDelay while running: we have noticed it can cause some significant issues in the past.

- Matt says the units of L0Delay are 14/13 us, because the max beam rate is 13/14 MHz.

- L0Delay has a lower limit of 0 and an upper limit around 100.

- CuDelay is set in the supervisor XPM and affects all client XPMs (e.g. TMO, RIX). Matt tries to not adjust this since it is global.

- In the various detector specific configdb/*config.py the current value of L0Delay for the appropriate readout group is included in the "delay_ns" calculation. So if, for example, L0Delay is adjusted then the "delay_ns" set in the configuration database remains constant.

- Moving timing earlier can be harder, but can be done by reducing group L0Delay.

- In general, don't want to reduce L0Delay too much because that means the "trigger decision" (send L1accept or not, depending on "full") must be made earlier, which increases the buffering requirements. This doesn't matter at 120Hz, but matters for MHz running. The only consequence of a lower L0Delay is higher deadtime.

- Note: if the total trigger delay increases (start_ns) then also need to tweak deadtime "high water mark" settings ("pause threshold"). How do we know if the pause threshold is right: two counters ("trigToFull and "notFullToTrig" for each of the two directions) to try to measure round-trip time, which should allow one to calculate the pause-threshold setting.

- Detectors with minimal buffering (or run at a high rate) need a high L0Delay setting (depends on ratio of buffering-to-rate)

Ric asks: Does XPM:0 govern L0Delay or does XPM:2 for TMO and XPM:3 for RIX do it for each, individually? Matt replies: Whichever is the master of the readout group determines the L0Delay. The other XPMs don't play a part in L0Delay. Only fast detectors (hsd's, wave8's, timing, should have large L0Delay)

- We should try to have slower detectors have a smaller L0Delay (wasn't possible in the past because of a firmware bug that has been fixed)

- the per-readout-group L0Delay settings (and CuDelay) are stored under a special tmo/XPM alias in the configdb and are read when the pyxpm processes are restarted:

Trigger Delays

See also Matt's timing diagram on this page: Bypass Events

- the total delay of a trigger consists of three pieces: CuDelay, L0Delay (per XPM and per readout group), and detector delays. Note that L0Delay is also called "PartitionDelay" in the rogue registers. L0Delay variable is: DAQ:NEH:XPM:0:PART:0:L0Delay (second zero is readout group). Note: L0Delay only matters for the master XPM.

- Matt writes about CuDelay: The units are 185.7 (1300/7) MHz ticks. You only need to put the PV or change it in xpmpva GUI. It will update the configdb after 5 seconds. You may notice there is also a CuDelay_ns PV (read only) to show the value in nanoseconds. Best not to change CuDelay while running: we have noticed it can cause some significant issues in the past.

- Matt says the units of L0Delay are 14/13 us, because the max beam rate is 13/14 MHz.

- L0Delay has a lower limit of 0 and an upper limit around 100.

- With Matt's new technique of generating the triggers for the common readout group as the logical-OR of all other readout groups he also automatically manages the L0Delay setting for that common readout group. He writes about the PV that has the value: "DAQ:NEH:XPM:2:CommonL0Delay, for instance, and it's setting is handled by control.py"

- CuDelay is set in the supervisor XPM and affects all client XPMs (e.g. TMO, RIX). Matt tries to not adjust this since it is global.

- In the various detector specific configdb/*config.py the current value of L0Delay for the appropriate readout group is included in the "delay_ns" calculation. So if, for example, L0Delay is adjusted then the "delay_ns" set in the configuration database remains constant.

- Moving timing earlier can be harder, but can be done by reducing group L0Delay.

- In general, don't want to reduce L0Delay too much because that means the "trigger decision" (send L1accept or not, depending on "full") must be made earlier, which increases the buffering requirements. This doesn't matter at 120Hz, but matters for MHz running. The only consequence of a lower L0Delay is higher deadtime.

- Note: if the total trigger delay increases (start_ns) then also need to tweak deadtime "high water mark" settings ("pause threshold"). How do we know if the pause threshold is right: two counters ("trigToFull and "notFullToTrig" for each of the two directions) to try to measure round-trip time, which should allow one to calculate the pause-threshold setting.

- Detectors with minimal buffering (or run at a high rate) need a high L0Delay setting (depends on ratio of buffering-to-rate)

Ric asks: Does XPM:0 govern L0Delay or does XPM:2 for TMO and XPM:3 for RIX do it for each, individually? Matt replies: Whichever is the master of the readout group determines the L0Delay. The other XPMs don't play a part in L0Delay. Only fast detectors (hsd's, wave8's, timing, should have large L0Delay)

- We should try to have slower detectors have a smaller L0Delay (wasn't possible in the past because of a firmware bug that has been fixed)

- the per-readout-group L0Delay settings (and CuDelay) are stored under a special tmo/XPM alias in the configdb and are read when the pyxpm processes are restarted:

| Code Block |

|---|

(ps-4.3.2) psbuild-rhel7-01:lcls2$ configdb ls tmo/XPM

DAQ:NEH:XPM:0

DAQ:NEH:XPM:2

DAQ:NEH:XPM:3

|

Accessing Switch in ATCA Crate

One issue may arise with the switch unable to switch speed if a 1Gbs transceiver is plugged in (10Gbs v 1Gbs link), the port speed can be manually toggled by SSHing to the switch. There is further documentation on this available here.

The switch may also need to be accessed to investigate other problems with port setup or link issues.

Briefly (default account and password are root , the password may have been changed to the standard password used for, e.g., shelf managers):

| Code Block |

|---|

# Determine the switch name first. Example below is for the FEH Switch for MFX prototype LCLS2 DAQ.

ssh root@swh-feh-daq01

root@swh-feh-daq01's password:

sh: /usr/bin/xauth: No such file or directory

[root@swh-feh-daq01 ~]$ axel_linkstat # Check link status

(FABRIC 1 ) Port 6: LINK

# ....

(SFP+ 0 ) Port 20: NO LINK

# ....

(SWTOSW ) Port 26: LINK

(MANAGE ) Port 27: LINK

[root@swh-feh-daq01 ~]$ axel_sfp_port 20 1G # Change port speed: axel_sfp_port <port> <1G|1g|10G|10g|AUTO|auto> [<repeat|refresh>]

[root@swh-feh-daq01 ~]$ axel_l1stat 'all' # Physical port status

(FABRIC 1 ) Port 6: LINK/ *ALIGN/ *SYNC3/ *SYNC2/ *SYNC1/ *SYNC0

# ....

(SFP+ 0 ) Port 20: NO LINK/ NO SYNC(LCLFAULT)

# ....

(SWTOSW ) Port 26: LINK

(MANAGE ) Port 27: LINK |

| Code Block |

(ps-4.3.2) psbuild-rhel7-01:lcls2$ configdb ls tmo/XPM

DAQ:NEH:XPM:0

DAQ:NEH:XPM:2

DAQ:NEH:XPM:3

|

Shelf Managers

To see the names of the shelf managers use this command:

...

On Nov. 16, 2022: "rix" and "tmo" ones are in the hutches. "fee" one is in the FEE alcove (test stand) and the two "neh" ones are in room 208 of bldg 950. daq01 is for the master crate with xpm's, and daq02 is the secondary crate housing fanout module(s). shm-feh-daq01 is the first one for the far hall initially installed for the MFX LCLS-II DAQ transition.

| name | IP | crate_ID |

|---|---|---|

| shm-tst-hp01 | 172.21.148.89 | no connection |

| shm-tmo-daq01 | 172.21.132.74 | L2SI_0003 |

| shm-neh-daq01 | 172.21.156.26 | 0001 |

| shm-rix-daq01 | 172.21.140.40 | L2SI_0002 |

| shm-daq-drp01 | 172.21.88.94 | no connection |

| shm-las-vme-testcrate | 172.21.160.133 | no connection |

| shm-daq-asc01 | 172.21.58.46 | L2SI_0000 |

| shm-hpl-atca01 | 172.21.64.109 | cpu-b34-bp01_000 |

| shm-las-ftl-sp01 | 172.21.160.60 | needs password to connect |

| shm-las-lhn-sp01 | 172.21.160.117 | needs password to connect |

| shm-neh-daq02 | 172.21.156.127 | L2SI_0002 (should be L2SI_0004) |

| shm-fee-daq01 | 172.21.156.129 | L2SI_0005 |

shm-feh-daq01 | 172.21.88.235 | L2SI_0006 |

Resetting Boards

...

Larry thinks that these are in the raw units read out from the device (mW) and says that to convert to dBm use the following formula: 10*log(10)(val/1mW). For example, 0.6 corresponds to -2.2dBm. The same information is now displayed with xpmpva in the "SFPs" tab.

| Code Block |

|---|

(ps-4.1.2) tmo-daq:scripts> pvget DAQ:NEH:XPM:0:SFPSTATUS

DAQ:NEH:XPM:0:SFPSTATUS 2021-01-13 14:36:15.450

LossOfSignal ModuleAbsent TxPower RxPower

0 0 6.5535 6.5535

1 0 0.5701 0.0001

0 0 0.5883 0.7572

0 0 0.5746 0.5679

0 0 0.8134 0.738

0 0 0.6844 0.88

0 0 0.5942 0.4925

0 0 0.5218 0.7779

1 0 0.608 0.0001

0 0 0.5419 0.3033

1 0 0.6652 0.0001

0 0 0.5177 0.8751

1 1 0 0

0 0 0.7723 0.201 |

Programming Firmware

From Matt. He says the current production version (which still suffers from xpm-link-glitch storms) is 0x030504. The git repo with firmware is here:

https://github.com/slaclab/l2si-xpm

Please remember to stop the pyxpm process associated with the xpm before proceeding.

Connect to tmo-daq as tmoopr and use procmgr stop neh-base.cnf pyxpm-xx.

| Code Block |

|---|

ssh drp-neh-ctl01. (with ethernet access to ATCA switch: or drp-srcf-mon001 for production hutches) ~weaver/FirmwareLoader/rhel6/FirmwareLoader -a <XPM_IPADDR> <MCS_FILE>. (binary copied from afs) ssh psdev source /cds/sw/package/IPMC/env.sh fru_deactivate shm-fee-daq01/<SLOT> fru_activate shm-fee-daq01/<SLOT> The MCS_FILE can be found at: /cds/home/w/weaver/mcs/xpm/xpm-0x03060000-20231009210826-weaver-a0031eb.mcs /cds/home/w/weaver/mcs/xpm/xpm_noRTM-0x03060000-20231010072209-weaver-a0031eb.mcs |

Incorrect Fiducial Rates

In Jan. 2023 Matt saw a failure mode where xpmpva showed 2kHz fiducial rate instead of the expected 930kHz. This was traced to an upstream accelerator timing distribution module being uninitialized.

In April 2023, DAQs run on SRCF machines had 'PGPReader: Jump in complete l1Count' errors. Matt found XPM:0 receiving 929kHz of fiducials but only transmitting 22.5kHz, which he thought was due to CRC errors on its input. Also XPM:0's FbClk seemed frozen. Matt said:

I could see the outbound fiducials were 22.5kHz by clicking one of the outbound ports LinkLoopback on. The received rate on that outbound link is then the outbound fiducial rate.

At least now we know this error state is somewhere within the XPM and not upstream.

The issue was cleared up by resetting XPM:0 with fru_deactivate/activate to clear up a bad state.

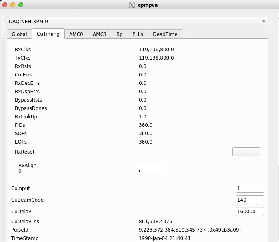

Note that when the XPMs are in a good state, the following values should be seen:

- Global tab:

- RecClk: 185 MHz

- FbClk: 185 MHz

- UsTiming tab:

- RxClks: 185 MHz

- RxLinkUp: 1

- CrcErrs: 0

- RxDecErrs: 0

- RxDspErrs: 0

- FIDs: 929 kHz

- SOFs: 929 kHz

- EOFs: 929 kHz

No RxRcv/RxErr Frames in xpmpva

If RxRcv/RxErr frames are stuck in xpmpva it may be that the network interface to the ATCA crate is not set up for jumbo frames.

Link Issues

If XPM links don't lock, here are some past causes:

- check that transceivers (especially QSFP, which can be difficult) are fully plugged in.

- for opal detectors:

- use devGui to toggle between xpmmini/LCLS2 timing (Matt has added this to the opal config script, but to the part that executes at startup time)

- hit TxPhyReset in the devGui (this is now done in the opal drp executable)

- if timing frames are stuck in a camlink node hitting TxPhyPllReset started the timing frame counters going (and it lighter-weight than xpmmini→lcls2 timing toggle)

- on a TDet node found "kcusim -T" (reset timing PLL) made a link lock

- for timing system detectors: run "kcuSim -s -d /dev/datadev_1", this should also be done when one runs a drp process on the drp node (to initialize the timing registers). the drp executable in this case doesn't need any transitions.

- hit Tx/Rx reset on xpmpva gui (AMC tabs).

- use loopback fibers (or click a loopback checkbox in xpmpva) to determine which side has the problem

- try swapping fibers in the BOS to see if the problem is on the xpm side or the kcu side

- we saw once where we have to power cycle a camlink drp node to make the xpm timing link lock. Matt suggests that perhaps hitting PLL resets in the rogue gui could be a more delicate way of doing this.

- (old information with the old/broken BOS) Valerio and Matt had noticed that the BOS sometimes lets its connections deteriorate. To fix:

- ssh root@osw-daq-calients320

- omm-ctrl --reset

Timing Frames Not Properly Received

- do TXreset on appropriate port

- toggling between xpmmini and lcls2 timing can fix (we have put this in the code now, previously was lcls1-to-lcls2 timing toggle in the code)

- sometimes xpm's have become confused and think they are receiving 26MHz timing frames when they should be 0.9MHz (this can be seen in the upstream-timing tab of xpmpva ("UsTiming"). you can determine which xpm is responsible by putting each link in loopback mode: if it is working properly you should see 0.9MHz of rx frames in loopback mode (normally 20MHz of frames in normal mode). Proceed upstream until you find a working xpm, then do tx resets (and rx?) downstream to fix them, of frames in normal mode). Proceed upstream until you find a working xpm, then do tx resets (and rx?) downstream to fix them,

Only Receiving Fraction of L1Accepts and Transitions

On 2024/09/30 it was observed that only a fraction of l1accepts and transitions were getting through to the high-rate-encoder. Matt mentioned that it might be necessary to click the Clear button on groupca.

This resolved the issue.

26 MHz Signal

On 2024/09/27 XPM5 was reporting 26 MHz in loopback mode on all ports (instead of the expected 928 kHz). Matt mentioned that he had observed this before, but it's unclear exactly where that number comes from. A Tx link reset (upstream at XPM3) returned XPM5 to a nominal state.

Network Connection Difficulty

Saw this error on Nov. 2 2021 in lab3 over and over:

| Code Block |

|---|

WARNING:pyrogue.Device.UdpRssiPack.rudpReg:host=10.0.2.102, port=8193 -> Establishing link ... |

Matt writes:

That error could mean that some other pyxpm process is connected to it. Using ping should show if the device is really off the network, which seems to be the case. You can also use "amcc_dump_bsi --all shm-tst-lab2-atca02" to see the status of the ATCA boards from the shelf manager's view. (source /afs/slac/g/reseng/IPMC/env.sh[csh] or source /cds/sw/package/IPMC/env.sh[csh]) It looks like the boards in slots 2 and 4 had lost ethernet connectivity (with the ATCA switch) but should be good now. None of the boards respond to ping, so I'm guessing its the ATCA switch that's failed. The power on that board can also be cycled with "fru_deactivate, fru_activate". I did that, and now they all respond to ping.

Firmware Varieties and Switching Between Internal/External Timing

NOTE: these instructions only apply for XPM boards running "xtpg" firmware. This is the only version that supports internal timing for the official XPM boards so sometimes firmware must be changed to xtpg to use internal timing. It has a software-selectable internal/external timing using the "CuInput" variable. KCU1500's running the xpm firmware have a different image for internal timing with "Gen" in the name (see /cds/home/w/weaver/mcs/xpm/*Gen*, which currently contains only a KCU1500 internal-timing version).

If the xpm board is in external mode in the database we believe we have to reinitialize the database by running:

python pyxpm_db.py --inst tmo --name DAQ:NEH:XPM:10 --prod --user tmoopr --password pcds --alias XPM

CuInput flag (DAQ:NEH:XPM:0:XTPG:CuInput) is set to 1 (for internal timing) instead of 0 (external timing with first RTM SFP input, presumably labelled "EVR[0]" on the RTM, but we are not certain) or 3 (second RTM SFP timing input labelled "EVR[1]" on the RTM).

Matt says there are three types of XPM firmware: (1) an XTPG version which requires an RTM input (2) a standard XPM version which requires RTM input (3) a version which gets its timing input from AMC0 port 0 (with "noRTM" in the name). The xtpg version can take lcls1 input timing and convert to lcls2 or can generate internal lcls2 timing using CuInput=1. Now that we have switched the tmo/rix systems to lcls2 timing this version is not needed anymore for xpm0: the "xpm" firmware version should be used unless accelerator timing is down for a significant period, in which case xtpg version can be used with CuInput=1 to generate LCLS2 timing (note that in this case xpm0 unintuitively shows 360Hz of fiducials in xpmpva, even though lcls2 timing is generated). The one exception is the detector group running in MFX from LCLS1 timing which currently uses xpm7 running xtpg firmware.

This file puts xpm-0 in internal timing mode: https://github.com/slac-lcls/lcls2/blob/master/psdaq/psdaq/cnf/internal-neh-base.cnf. Note that in internal timing mode the L0Delay (per-readout-group) seems to default to 90. Fix it with pvput DAQ:NEH:XPM:0:PART:0:L0Delay 80".

One should switch back to external mode by setting CuInput to 0 in xpmpva CuTiming tab. Still want to switch to external-timing cnf file after this is done. Check that the FiducialErr box is not checked (try ClearErr to see if it fixes). If this doesn't clear it can be a sign that ACR has put it "wrong divisor" on their end.

...

NOTE: we have an intermittent power-on deadlock: when powering on the opal/feb-box (front-end-board) the LED on the FEB box will stay red until the DAQ has configured it, when it will turn green. Sometimes it affects the "@ID?" and "@BS?" registers and the DAQ will crash (hence the deadlock). We should tolerate ID/BS registers not working when drp executable starts. This lack of configuration is fixable by loading a rogue yaml config file included in the cameralink-gateway git repo by going to the software directory and running with enableConfig=1 like this: "python scripts/devGui --pgp4 0 --laneConfig 0=Opal1000 --pcieBoardType Kcu1500 XilinxKcu1500 --enLclsII 1 --enableConfig 1 --startupMode 1"

...

python scripts/devGui --pgp4 0 --laneConfig 0=Opal1000 --pcieBoardType Kcu1500 XilinxKcu1500 --enLclsII 1 --enableConfig 0 --startupMode 1 --enVcMask 0

...

python scripts/devGui --pgp4 0 --laneConfig 0=Piranha4 --pcieBoardType Kcu1500 XilinxKcu1500 --enableConfig 1 --startupMode 1 --standAloneMode 1

...

python scripts/devGui --pgp4 1 --laneConfig 1=Opal1000 --pcieBoardType Kcu1500XilinxKcu1500

Note that reading the optical powers on the KCU is slow (16 seconds?). Larry has a plan to improve that speed. It's faster on the FEB.

...

- python scripts/wave8DAQ.py --start_viewer 1 --l 2 (for the front-end board)

- Use this to toggle between XpmMini and LCLS2 timing (maybe with --start_viewer 0)

- python scripts/wave8DAQ.py --l 3 --enDataPath 0 --startupMode 1 (run devGui at the same time as the daq)

- As of 2024-06-06 (at least) you can run the Kcu1500 devGui with rogue6 in ps-4.6.3

Code Block python scripts/PgpMonitor.py --numLane 8 --boardType XilinxKcu1500

(new way of running kcu1500 gui from the pgp-pcie-apps/ repo, currently needs rogue5 in ps-4.6.1) python scripts/PgpMonitor.py --numLane 8 --boardType XilinxKcu1500- (OLD way of running kcu1500 gui) python scripts/PcieDebugGui.py --boardType Kcu1500 XilinxKcu1500 (need the flag at the end to get qsfp optical powers)

...

lower-right: EVR0 (controls, for LCLS1 timing, typically not used for LCLS2 now)

lower-left: EVR1 (daq)

upper-right: PGP0 (controls)

upper-left: PGP1 (daq)

NOTE: cpo thinks (but could be wrong) that the two PGP connections are not equal: the dead time settings only apply to the PGP1 link.

Jumping L1Count

cpo saw this error showing up in rix fim1 (MR4K2) on 10/16/21:

...

The xpm remote-id bits (also called rxid in some devGui's). First two These lines come from psdaq/drp/BEBDetectorXpmInfo.cchh:

- int xpm = (reg >> 2016) & 0x0F0xFF;

- int port = (reg >> 0) & 0xFF;

- int ipaddr_low_byte = (reg >> 8) & 0xFF;

JTAG programming

To program fim firmware via JTAG use a machine like pslab04, setup Vivado with:

...

If deadtime issues persist, bring up the IOC screen and verify that the trigger is set up correctly. There is a TriggerDelay setting on the main IOC screen on the Acquisition Settings panel. Large values cause deadtime, but values < ~20000 are fine. This value is calculated from EvrV2:TriggerDelay setting that can be found on the PyRogue Wave8Diag screen on the EvrV2CoreTriggers panel. Its value is in units of 185 MHz/5.4 ns ticks, not ns.

RIX Absolute Mono Encoder

See Chris Ford's page here: UDP Encoder Interface (rix Mono Encoder)

...

| Code Block |

|---|

$ caget SP1K1:MONO:DAQ:{TRIG_RATE,FRAME_COUNT,ENC_COUNT,TRIG_WIDTH}_RBV

SP1K1:MONO:DAQ:TRIG_RATE_RBV 10.2042

SP1K1:MONO:DAQ:FRAME_COUNT_RBV 10045

SP1K1:MONO:DAQ:ENC_COUNT_RBV 22484597

SP1K1:MONO:DAQ:TRIG_WIDTH_RBV 0 |

Fixing "Operation Not Permitted" Error

After time, "tprtrig" process can generate this error, because the tpr driver on drp-neh-ctl002 keeps using up file descriptors and runs out eventually:

...

On Nov. 10 found that above "systemctl start" didn't work, complaining about a problem with avahi-daemon. Matt did "sudo modprobe tpr" and this worked?a problem with avahi-daemon. Matt did "sudo modprobe tpr" and this worked?

Hangs

Gabriel and cpo found in Oct 24 that it looks like larger ratios of event rates to trigger rates for the interpolating encoder can cause 100% deadtime. As a reminder the mono_enc_trig rate can be at most a few hundred hertz (limited by Zach's PLC and/or hardware) while event rates can be much higher. What we found:

- 10kHz 10Hz works

- 71kHz 1Hz fails

- 71kHz 100Hz works

- 10kHz 1Hz fails intermittently

Fixing "failed to map slave" Error

...

Access to accelerators controls IOCs is through testfac-srv01. Timing runs on cpu-as01-sp01 (and sp02?). Shelf manager is shm-as01-sp01-1.

In October 2024 we are starting to run devGui from the slaclab/epix-quad repo. Matt is using this command: "python scripts/epixQuadDAQ.py --pollEn False --initRead False --type datadev --dev /dev/datadev_0 --l 0"

EpixHR

Github repo for small epixhr prototype: https://github.com/slaclab/epix-hr-single-10k

...

| Code Block |

|---|

drp-srcf-cmp007:~$ kinit

Password for cpo@SLAC.STANFORD.EDU:

drp-srcf-cmp007:~$ ssh psdatmgr@drp-srcf-cmp007

Last login: Thu Jun 6 13:34:06 2024 from drp-srcf-cmp007.pcdsn

<stuff removed>

-bash-4.2$ whoami

psdatmgr

-bash-4.2$ weka status

WekaIO v4.3.1 (CLI build 4.2.7.64)

cluster: slac-ffb (48d60028-235e-4378-8f1b-a17d711514a6)

status: OK (48 backend containers UP, 128 drives UP)

protection: 12+2 (Fully protected)

hot spare: 1 failure domains (70.03 TiB)

drive storage: 910.56 TiB total

cloud: connected (via proxy)

license: OK, valid thru 2032-12-16T22:03:30Z

io status: STARTED 339 days ago (192 io-nodes UP, 252 Buckets UP)

link layer: Infiniband + Ethernet

clients: 343 connected, 1 disconnected

reads: 0 B/s (0 IO/s)

writes: 0 B/s (0 IO/s)

operations: 29 ops/s

alerts: 7 active alerts, use `weka alerts` to list them

-bash-4.2$ weka local status

Weka v4.2.7.64 (CLI build 4.2.7.64)

Cgroups: mode=auto, enabled=true

Containers: 1/1 running (1 weka)

Nodes: 4/4 running (4 READY)

Mounts: 1

To view additional information run 'weka local status -v'

-bash-4.2$ |

Slurm and daqmgr

Other useful commands for seeing pending jobs:

- scontrol -d show job 533121 | grep -i reason

- squeue -p drpq | grep PD

| PROBLEM | POSSIBLE CAUSE | KNOWN SOLUTIONS | ||

|---|---|---|---|---|

| daqstat shows PENDING or squeue -u <user> shows ReqNodeNotAvail | Unknown, it appears as the node is in "drain" state. | sudo: YES, run-on-node: ANY

|