Page History

...

S3DF main page: https://s3df.slac.stanford.edu/public/doc/#/

S3DF accounts&access: https://s3df.slac.stanford.edu/public/doc/#/accounts-and-access(your unix account will need to be activated on the S3DF system)

| Warning |

|---|

|

...

| Warning |

|---|

So far data are only copied to the S3DF on request and run restores are to the PCDS Lustre file systems. |

Data access example

Scratch

A scratch folder is provided for every experiment and is accessible using the canonical path

/sdf/data/lcls/ds/<instr>/<expt>/<expt-folders>/scratch

The scratch folder lives on a dedicated high performance file system and the above path is a link to this file system.

In addition to the experiment scratch folder each users has its own scratch folder:

/sdf/scratch/users/<first character of name>/<user-name> (e.g. /sdf/scratch/users/w/wilko)

The users scratch space is limited to 100GB and as the experiment scratch folder old files will be automatically removed when the scratch file system is filling up. The df command will show the usage of a users scratch folder.An example notebook can be found here:/sdf/home/e/espov/s3df_demo.ipynb

Large User Software And Conda Environments

...

| No Format |

|---|

% source /sdf/group/lcls/ds/ana/sw/conda2/manage/bin/psconda.sh |

Other LCLS Conda Environments in S3DF

- There is a simpler environment used for analyzing hdf5 files. Use "source /sdf/group/lcls/ds/ana/sw/conda2/manage/bin/h5ana.sh" to access it. It is also available in jupyter.

Batch processing

The S3DF batch compute link describes the Slurm batch processing. Here we will give a short summary relevant for LCLS users.

a partition and slurm account should be specified when submitting jobs. The slurm account would be lcls:<experiment-name> e.g. lcls:xpp123456. The account is used for keeping track of resource usage per experiment

Code Block % sbatch -p milano --account lcls:xpp1234 ........

You must be a member of the experiment's slurm account which you can check with a command like this:

Code Block sacctmgr list associations -p account=lcls:xpp1234- Submitting jobs using the lcls account allows only to submit preemptable jobs and requires to specify: --qos preemptable . The lcls account is set by --account lcls or --account lcls:default (the lcls name gets automatically translated to lcls:default by slurm, and s-commands will show the default one).

- In the S3DF by default memory is limited to 4GB/core. Usually that is not an issue as processing jobs use many core (e.g. a job with 64 cores would request 256GB memory)

- the memory limit will be enforced and your job will fail with an OUT_OF_MEMORY status

- memory can be increased using the--memsbatch option (e.g.: --mem 16G, default unit is megabytes)

- Default total run time is 1 day, the --time option allows to increase/decrease it.

Number of cores

Warning Some cores of a milano batch node are exclusively used for file IO (WekaFS). Therefore although a milano node has 128 core only 120 can be used.

submitting a task with --nodes 1 --ntasks-per-node=128 would fail with: Requested node configuration is not availableEnvironment Varibales: sbatch option can also be set via environment variables which is useful if a program is executed that calls sbatch and doesn't allow to set options on the command line e.g.:

Code Block language bash % SLURM_ACCOUNT=lcls:experiment executable-to-run [args] or % export SLURM_ACCOUNT=lcls:experiment % executable-to-run [args]

The environment variables are SBATCH_MEM_PER_NODE (--mem), SLURM_ACCOUNT(--account) and SBATCH_TIMELIMIT (--time). The order arguments are selected is: command line, environment and withing sbatch script.

...

| Warning |

|---|

onDemand requires a valid ssh-key (~/.ssh/s3df/id_ed25519) which are auto generated when an account is enabled in the S3DF. However, older accounts might not have this key but can create it running the following command on a login or interactive node: /sdf/group/lcls/ds/dm/bin/generate-keys.sh If you still have issues logging, go to https://vouch.slac.stanford.edu/logout and try loging in again. |

Please note that the "Files" application cannot read ACLs which means that (most likely) you will not be able to access your experiment directories from there. Jupyterlab (or interactive terminal session) from the psana nodes will not have this problem.

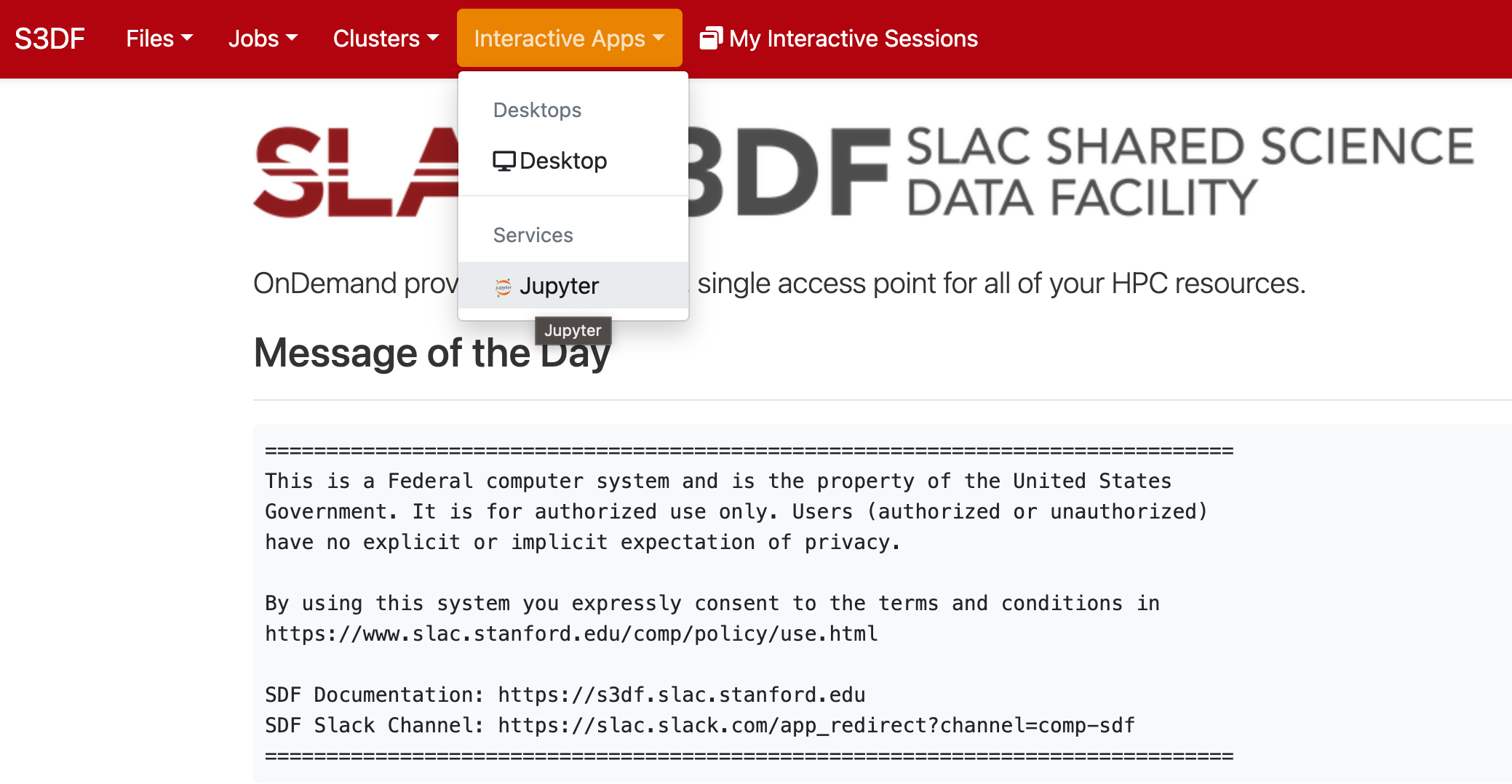

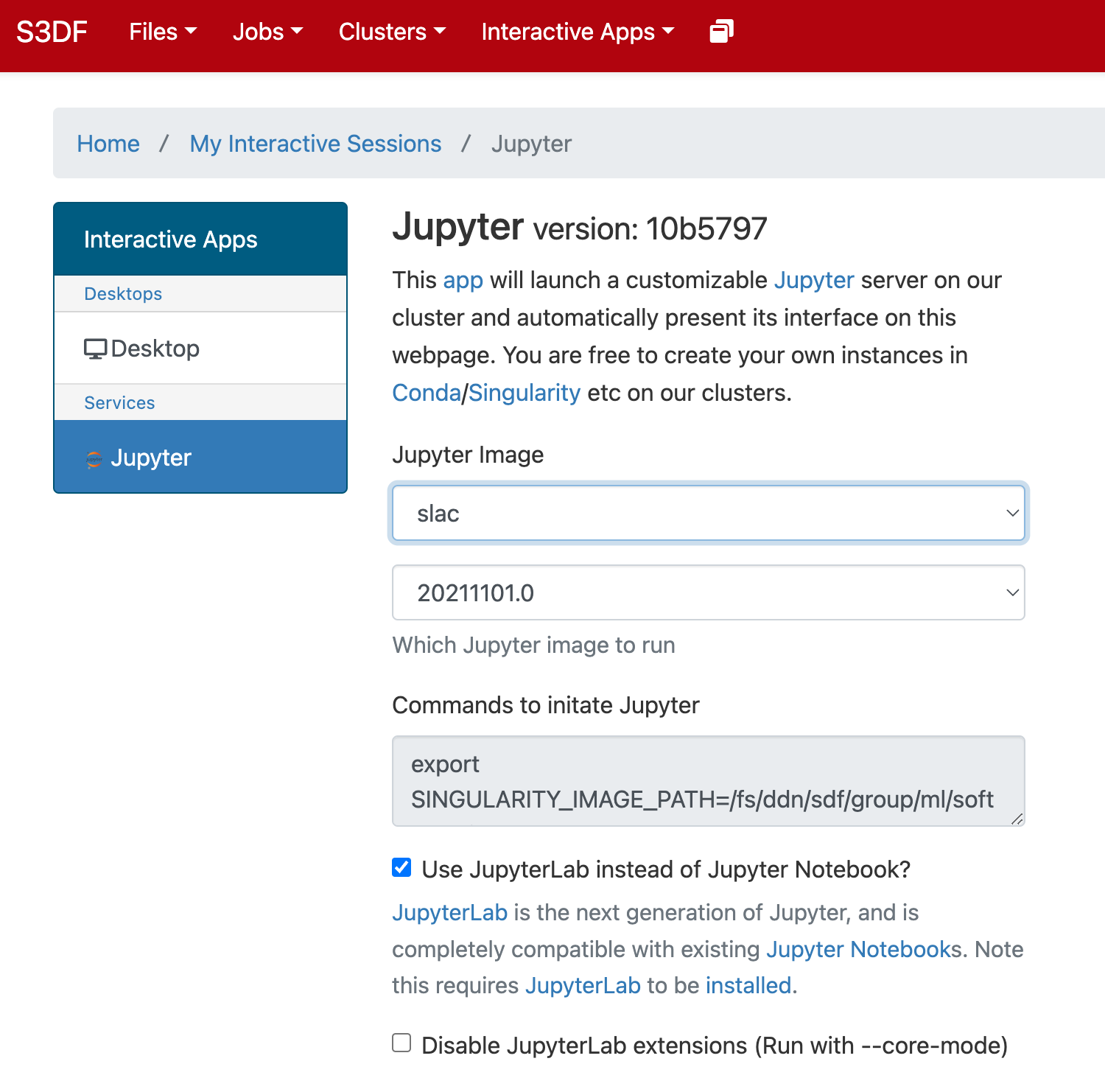

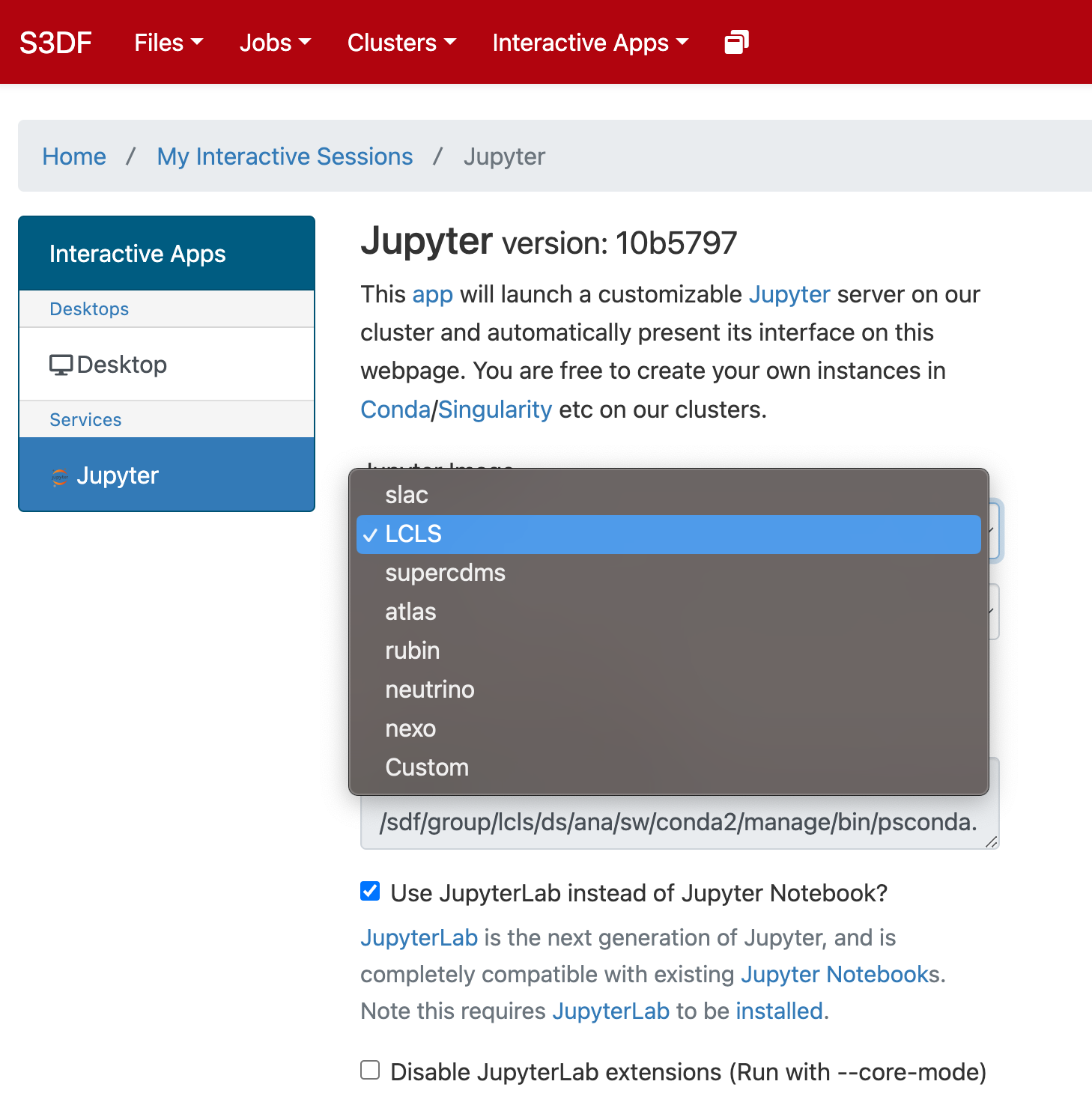

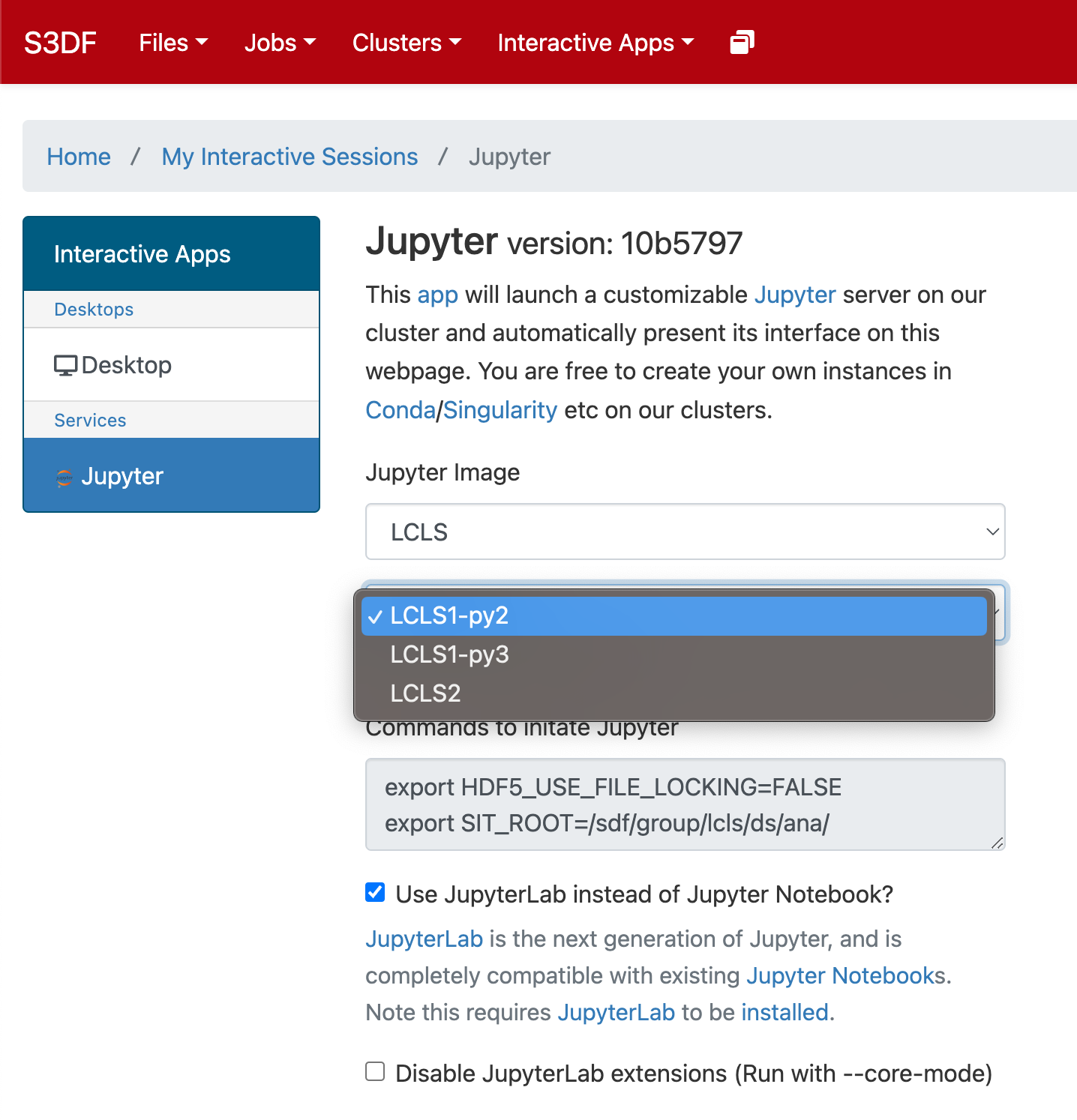

In the "Interactive Apps" select Jupyter, this opens the following form

...