Page History

...

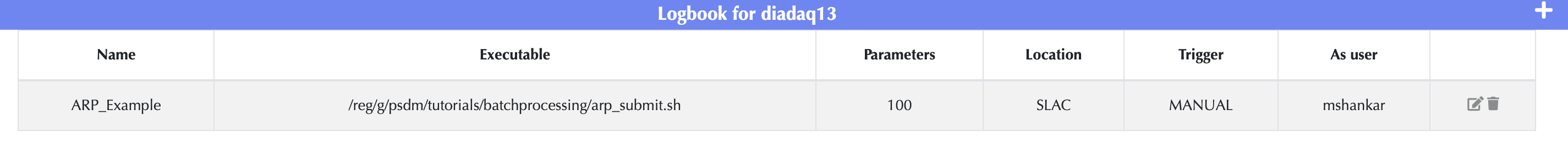

Under the Workflow dropdown, select Definitions. The Workflow Definitions tab is the location where scripts are defined; scripts are registered with a unique name. Any number of scripts can be registered. To register a new script, please click on the + button on the top right hand corner.

1.1.1) Name

...

A unique name given to a registered script; the same script can be registered under different names with different parameters.

...

- EXPERIMENT - The name of the experiment; in the example shown above, diadaq13.

- RUN_NUM - The run number for the job.

JIDARP_UPDATE_COUNTERS - This is a URL that can be used to update the progress of the job. These updates are also automatically reflected in the UI. . In previous releases, this was called JID_UPDATE_COUNTERS.

- ARP_JOB_ID - The id for this job execution; this is an internal identified used in API calls to the other data management systems for example, to update counters or in AirFlow integrations.

- ARP_ROOT_JOB_ID - If using Airflow or other workflow engines; this is the identifier of the initial job in the DAG.

- ARP_LOCATION - The data management location that this job is running at; for example, S3DF or NERSC.

- ARP_SLURM_ACCOUNT - The SLURM account to be used in sbatch calls ( if applicable ).

1.1.4) Location

This defines where the analysis is done. While many experiments prefer to use the SLAC psana cluster (SLAC) or the SRCF (SRCF_FFB) to perform their analysis, others prefer to use HPC facilities like NERSC to perform their analysis.

...

| Code Block |

|---|

#!/bin/bash source /reg/g/psdm/etc/psconda.sh ABS_PATH=/reg/g/psdm/tutorials/batchprocessing sbatch --nodes=2 --partition=psanaq --time=5 --output="arp_example_${RUN_NUM}_%j.log" $ABS_PATH/arp_actual.py "$@" |

This script will submit /reg/g/psdm/tutorials/batchprocessing/arp_actual.py and store the log files in /reg/d/psdm/dia/diadaq13/scratch/<slurm_job_id>.out. /reg/g/psdm/tutorials/batchprocessing/arp_actual.py will be passed the parameters as command line arguments and will inherit the EXPERIMENT, RUN_NUM and JID_UPDATE_COUNTERS environment variables.

Log files:

→ If the --output parameter is not specified to sbatch, then SLURM will store the log output in /reg/d/psdm/dia/diadaq13/scratch/<slurm_job_id>.out

→ In the example above, the log output will be sent to to the default working folder for the job; which is the scratch folder but the file name will be generated using the run number and the job id. For example, the log file for run 25 job id 409327. will be send to /reg/d/psdm/dia/diadaq13/scratch/arp_example_25_409327.log

→ To avoid cluttering the scratch folder, one can use an absolute path in the --output command to specify an alternate location for the job log files. See the "filename pattern" in the sbatch man page for more details.

2.2) arp_actual.py

This Python script is the code that will do analysis and whatever is necessary on the run data. Since this is just an example, the Python script, arp_actual.py, doesn't get that involved. It is shown below.

...