Page History

...

Under the Workflow dropdown, select Definitions. The Workflow Definitions tab is the location where scripts are defined; scripts are registered with a unique name. Any number of scripts can be registered. To register a new script, please click on the + button on the top right hand corner.

1.1.1) Name/Hash

...

The parameters that will be passed to the executable . Any number of name value pairs can be associated with a job definition. These are then made available to the above executable as environment variables. For example, in the above example, one can obtain the value of the parameter LOOP_COUNT (100) in a bash script by using ${LOOP_COUNT}. In addition, the following additional environment variables are made available to the scriptas command line arguments. These parameters can be used as parameters to the bsub/sbatch command to specify the queue, number of cores etc. Or; they can be used to customize the script execution. In addition, details of the batch job are made available as as environment variables.

- EXPERIMENT - The name of the experiment; in the example shown above, diadaq13.

- RUN_NUM - The run number for the job.

JID_UPDATE_COUNTERS - This is a URL that can be used to update the progress of the job. These updates are also automatically reflected in the UI.

...

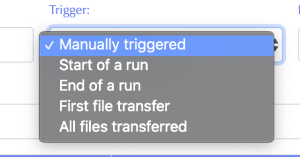

- Manually triggered - The user will manually trigger the job in the Workflow Control tab.

- Start of a run - When the DAQ starts a new run.

- End of a run - When the DAQ closes a run.

- First file transfer - When the data movers indicate that the first registered file for a run has been transferred and is available at the job location.

- All files transferred - When the data movers indicate that all registered files for a run have been transferred and are available at the job location.

1.1.5) As user

The job If the job is automatically triggered, it will be executed as this user. If the job is manually triggered; it will be executed as the user triggering the job manually.

1.1.6) Edit/delete job

...

The executable script used in the workflow definition should be used primarily to set up the environment etc and submit the analysis script to the HPC workload management infrastructure. For example, here's a simple executable script that uses LSF's bsub to submit the analysis script to the psdebugq queue is available here - /reg/g/psdm/tutorials/batchprocessing/jid_submit.sh

| Code Block |

|---|

#!/bin/bash source /reg/g/psdm/etc/psconda.sh ABS_PATH=/reg/dg/psdm/dia/diadaq13/scratch/analyzetutorials/batchprocessing bsub -q psdebugq -o $ABS_PATH/"logs/%J.log" python $ABS_PATH/submitjid_actual.py "$@" |

This script will submit /reg/dg/psdm/diatutorials/diadaq13/scratch/analyze on batchprocessing/jid_actual.sh on psdebugq and store the log files in /reg/d/psdm/dia/diadaq13/scratch/logs/<lsf_id>. Since the job definition parameters and JID parameters are defined as environment variables, /reg/dg/psdm/diatutorials/diadaq13/scratch/analyze batchprocessing/jid_actual.sh will be passed the parameters as command line arguments and will inherit the EXPERIMENT, RUN_NUM and JID_UPDATE_COUNTERS environment variables.

2.2)

...

jid_actual.py

This Python script is the code that will do analysis and whatever is necessary on the run data. Since this is just an example, the Python script, analyzejid_acutal.py, doesn't get that involved. It is shown below.

| Code Block |

|---|

from time import sleep from requests import post from sys import argv from os import environ from numpy import random from string import ascii_uppercase print 'This is a test function for the batch submitting.\n'#!/usr/bin/env python import os import sys import requests import time import datetime import logging logging.basicConfig(level=logging.DEBUG) logger = logging.getLogger(__name__) logger.debug("In the jid_actual script - current time is %s", datetime.datetime.now().strftime("%c")) for k, v in sorted(os.environ.items()): logger.debug("%s=%s", k, v) ## Fetch the URL to post POSTprogress toupdates update_url = os.environ.get('JID_UPDATE_COUNTERS') print 'The update_url is:'logger.debug("The URL to post updates is %s", update_url, '\n') ### These Fetchare the passedparameters argumentsthat asare passed by submit.sh params = argv print 'The parameters passed are:' for n, param in enumerate(params): print 'Param %d:' % (n + 1), param print '\n' in logger.debug("The parameters passed into the script are %s", " ".join(sys.argv)) ## Run a loop, sleep a second, then POST for i in range(10): time.sleep(1) rand_char = random.choice(list(ascii_uppercase)) print 'Step: %d, %s' % (i + 1, rand_charlogger.debug("Posting for step %s", i) requests.post(update_url, json=[{'Example Counter'"key": "<strong>Counter</strong>", "value" : [i + 1, 'red']}, {'Random Char' : rand_char}]) "<span style='color: red'>{0}</span>".format(i+1)}, {"key": "<strong>Current time</strong>", "value": "<span style='color: blue'>{0}</span>".format(datetime.datetime.now().strftime("%c"))}]) logger.debug("Done with job execution") |

2.3) Log File Output

The print statements print out logger.debug statements are sent to the runjob's log file. The output of submit.py is below. The first parameter is the path to the Python script, the second is the experiment name, the third is the run number and the rest are the parameters passed to the script.Part of an example is shown below.

| No Format |

|---|

DEBUG:__main__:In the jid_actual script - current time is Thu Apr 16 11:12:40 2020

...

DEBUG:__main__:EXPERIMENT=diadaq13

...

DEBUG:__main__:JID_UPDATE_COUNTERS=https://pswww.slac.stanford.edu/ws/jid_slac/jid/ws/replace_counters/5e98a01143a11e512cb7c8ca

...

DEBUG:__main__:RUN_NUM=26

...

DEBUG:__main__:The parameters passed into the script are |

| No Format |

This is a test function for the batch submitting. The update_url is: http://psanaphi110:9843//ws/logbook/client_status/450 The parameters passed are: Param 1: /reg/g/psdm/webtutorials/ws/test/apps/logbk_batch_client/test/submit.py Param 2: xppi0915 Param 3: 134261 Param 4: param1 Param 5: param2 Step: 1, R Step: 2, J Step: 3, T Step: 4, P Step: 5, S Step: 6, B Step: 7, E Step: 8, K Step: 9, X Step: 10, Vbatchprocessing/jid_actual.py 100 DEBUG:__main__:Posting for step 0 DEBUG:urllib3.connectionpool:Starting new HTTPS connection (1): pswww.slac.stanford.edu:443 DEBUG:urllib3.connectionpool:https://pswww.slac.stanford.edu:443 "POST /ws/jid_slac/jid/ws/replace_counters/5e98a01143a11e512cb7c8ca HTTP/1.1" 200 195 DEBUG:__main__:Posting for step 1 ... DEBUG:__main__:Done with job execution ------------------------------------------------------------ Sender: LSF System Subject: Job 427001: in cluster Done ... |

3.0 Frequently Asked Questions (FAQ)

...