Participants:

| Institution | Names |

|---|---|

| ANL | Vallary Bhopatkar |

| IHEP | Yubo Han |

| LBNL | Aleksandra Dimitrievska, Maurice Garcia-Sciveres, Timon Heim, Charilou Labitan, Ben Nachman, Cesar Gonzalez Renteria, Mark Standke |

| Louisville | Swagato Banerjee |

| SLAC | Nicole Hartman, Ke Li, Jannicke Pearkes, Murtaza Safdari, Su Dong |

| UCSC | Zach Galloway, Carolyn Gee, Simone Mazza, Jason Nielsen, Yuzhan Zhao |

Schedule

| Day/Week | Dates | Activities |

|---|---|---|

| Monday | May/21 | Installation of EUDAQ 1.7. Last chance to test inside tunnel. |

| Tuesday | May/22 | Caladium to be removed from tunnel due to invasive beam experiment before us. |

| May/23-28 | Caladium parked inside ESA outside beamline but powered on and connected to allow continued new DUT software integration. | |

| Tuesday | May/29 | Dismantle of previous experiment by Test Facilities. Caladium reinstallation in beamline and DUT setup can start in ~afternoon. New user in person training 116PRA: 11:00am–12:00 noon, Building 28 Room 141 |

| Wednesday | May/30 | Installation and integration. Potential first beam. |

| May/31-Jun/5 | Formal run period | |

| Tuesday | Jun/5 | Beam off at 9am |

Data taking run shift list

Data Run Summary List

Event Log

May/7

Test period formally allocated for May/31-Jun/4, which is the very last period before LCLS beam summer shutdown (until Sep/2018) to give as much time as possible to prepare the setup for the RD53A module. 3 other experiments are scheduled before us and some of them are rather invasive so that the Caladium telescope will be removed from the tunnel which we have to reinstall just before our period.

May/9

The experiment before our session will end on Monday May/28 so that dismantling that experiment and moving Caladium back into beam line may already start on at least Tuesday May/29. So we need to be prepared to jump on setup in ESA for Tuesday May/29 already. For regular run periods, Wednesday is normally off for machine development but for this last period of the LCLS Run 16, there is no MD so that we may potentially have ESTB beam already for Wednesday May/31. The whole session ends on Tuesday Jun/5 at 9am.

May/21

Ben is building EUDET 1.7 release on ar-eudaq. It needs various associated software utility updates, such as gcc. Compiling is taking rather long...

/home/tfuser/data space on ar-eudaq is effectively a new directory but inherited the same runnumber series. Older data (taken with 1.5-dev release) are moved to /home/tfuser/data_before_May2018. A new test data directory is created as /home/tfuser/test_root to hold the prompt offline processed data such as test root files which also has a before_May2018 subdirectory for older test files.

Checked out at ESA. Current experiment on shower radio signal detection is ongoing through the night. They are sensitive to other electrical noise, so that the tunnel florescence lights are turned off and no access until 3am. Driving Caladium parasitically can be another source of noise. Decided to not disturb the experiment and try to look at what we can do by 8am when they are done.

The NI crate remained on but login to it has a prompt complaining "JTAG software might be incompatible with the earlier version of the DAQ software Version 2.0 (compiled Sep 28 2011)". Not sure if this mattered.

May/22

Caladium and electronics rack are lifted off the ESTB tunnel and placed on ESA floor just behind the orange network racks for continuing EUDAQ software update tests. Yubo and SD reconnected the Caladium and managed to start Run at some point with ni_autortrig mode which was seeing "particle" (auto trigger) counters going up but no actual trigger or event build. After that, even NI_MIMOSA process would get stuck in config. Ben's 1.7 release build was successful with the exception of the online monitoring which required ROOT6 while building ROOT6 apparently requires the node with full internet access which ar-eudaq does not. We still have easy ESA access for 1-2 days until the next experiment starts.

May/23

Retested Caladium auto trigger run without touching the hardware. This time it just worked all the way through. Not clear what made the difference. Just the cold start of MIMOSA DAQ ?

May/25

Building of EUDAQ 1.7 release continues. Challenge now is installing extern/libusb without internet and system privilege. Settled the new ESTB user 116PRA training session to be Tuesday 11am at Bldg 28-141. Carsten warned that Tuesday May/29 morning will be preoccupied with dismantle of previous experiment and moving concrete shielding.which may use up all access keys. Our access to ESA may need to wait until at least Tuesday afternoon.

May/29

8:00AM Mike Dunning installed the current version of libusb and Ben managed to fully compile EUDAQ 1.7 release.

10:00AM ESA on permitted access so that no longer needs controlled key access until we close the area for beam, but the tunnel area still sealed off due to the Cu dump inside for previous experiment is showing some residual radioactivity that needs a few more hours to cool off.

11:00AM T539 new comers training for 116PRA at Building 28-141. A separate session is schedule for the LBNL T545 gang on Thursday.

11:00AM Some concrete shielding removal still ongoing but target/dump removal will be reevaluated after lunch.

1:30PM New crew Vallary, Ke and Jason exercised the Caladium auto trigger test runs in the parking position. Caladium chiller water needed a bit top up.

4:30pm Update from Keith Jobe: "The target is removed, but that was not as helpful as expected. The copper target had decayed a factor of two from the morning reading to 160 mrem/hr contact, but now we were able to find that the concrete blocks are 70 mrem/hr at the target location. This is still high. Tomorrow the Test Facilities team will proceed as RWT-II with contamination protocols to remove the lead and steel bricks and then the concrete blocks. Once that is done, I expect reinstallation of the Caladium will be relatively easy."

May/30

11:00AM Test FAC crew disconnected Caladium and lifted it back to tunnel.

1:30PM Caladium fully reconnected and some cameras mounted in place.

4:00PM ESA searched and returned to control access.

4:15PM Announced our plan for the coming week at MCC weekly planning meeting. One major upset revealed at the meeting was that the main LCLS experiment coming in for Day shifts will be doing continuous energy scans which will make ESA beam delivery very difficult. Evening shifts will be more conventional. Fortunately this week's accelerator program deputy is Toni Smith who is also the principal expert for the ESTB beam setup. Our more relaxed beam quality requirements leave some avenue for workable day shift beams still. We are not required to show up for the 8am daily operations meetings but welcome to bring requests and success news always.

5:00PM In ESA with Carsten and Keith trying to move Caladium into beamline. After some puzzling positioning anomalies, we realized someone unbolted the telescope stands from the stage base. Reseated the whole telescope and roughly centered it to beam but needs further proper seating of the telescope tomorrow with Test Fac crew. While driving the Caladium stage with Beckhoff, Carsten noticed a disturbing issue that the "Coarse X" drive setup panel step velocity control can in one click jump to max velocity which is rather dangerous. Needs to be very alert on the step sizes of the stage controls. The chiller doesn't seem to drive the cooling water and we all noted we needed to top off water a few times. Test Fac will find a replacement chiller.

5:30PM Also mounted the insulating top plate made by Doug McCormick on the Caladium rotational DUT stage. Must use the black nylon screws provided to mount DUT to see the mounted device electrically isolated from the stage motors.

5:30Pm Quick phone call from Toni Smith discussing whether starting beam or not tonight. Given that we don't have a real DUT in place, we decided follow the canonical schedule to start beam only tomorrow morning at ~10am (accelerator shift crew change at 9am to start setup).

6:00PM Running Caladium in auto trigger mode in situ. Although worked eventually, at some stage probably due to I pushed the MIMOSA config "reload" button by mistake, all subsequent "read" command for checking the EUDET control registers always yielded a whole panel of red bad readings which doesn't seem to matter as there run proceeded OK despite of that. While running Caladium from ar-esaux2 in ESA hall ssh into ar-eudaq, I realized the various puzzling apparent run control process state stuck observation were just the window not automatically updating. Even shaking mouse inside the window is insufficient to wake it up and you have to actually click inside the window to force a display update.

7:00PM Tried to mount more cameras but had a frustrating time with camera 14 focus and eventually abandoned it. Needs one more charger supply to add one more camera.

7:30PM Tried to bring up the esadutdaq1 Sun server for RCE DUT but was unable to ping it after trying various network cables. The front panel LED says "170.20.4.101 / Main power off" which is rather odd. To be followed up.

May/31

Planned activities:

Before 10am: Test Fac: Caladium reseating, chiller replacement. ATLAS crew: investigation of DUT setup related issues - esadutdaq1 connection and a possible FE-I4 module as DUT.

10am: starting beam with MCC with either Caladium alone or including a test DUT. Working with MCC to find a good beam spot tune. Can we replicate a EUDET monitoring window for MCC to let them see it directly ? Try EUDAQ release 1.7 (or wait until Ben arrives?).

1pm: 116PRA training at 28-141 for many LBNL crew arriving, while RD53A DUT setup can also start with Timon and others with training done already.

3pm: At the entrance of ESA, AD106 ESA orientation training for all new comers still need to take it.

Actual activities:

12:15 First beam with Caladium only. Initial spot more like 5mm diameter with ~30 hit clusters/event. After talking to Toni, opened up the collimator some to ~100 cluster/event and ~1 cm diameter spot, but ~ a few mm off-center.

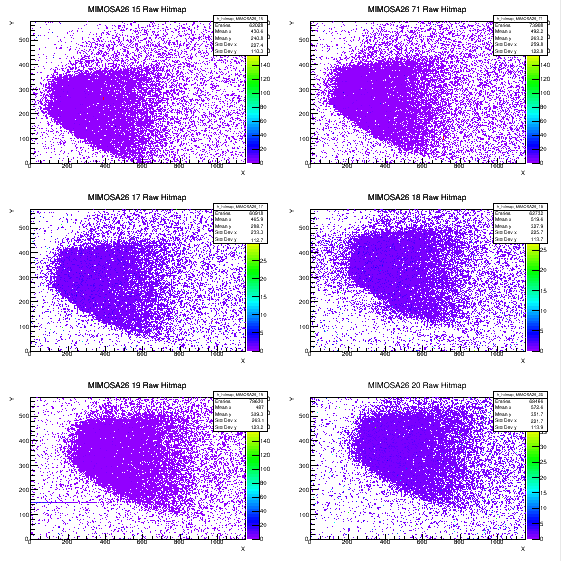

12:30 Ke and Vallary are adjusting the X-Y position of the telescope to make sure the beam is at the center for all telescope planes. We see a reasonable beam spot, with sharp boundaries probably defined by upstream aperture. There is a tilt in the telescope so that the front 3 planes are centered, while the centers of back 3 planes are slightly higher. This was also visible during the Caladium beamline alignment but it was decided to be worth fine tune if really needed as this involves rather cumbersome chipping on one side.

1:30 Ben is testing eudaq 1.7 with new config files. Cannot get TLU producer up. The issue seems to be that the original V1.5 TLUProducer.exe for the NI crate windows process is not compatible with V1.7. This needs to be rebuilt on an Windows 7 a machine. An old laptop from Su Dong's office is brought over to compile the new version but many utlities to install to start with...

3:00 ESTB orientation AD106 starting. Needed to do in two groups as there are only 8 access keys.

4:30 Proceeding to install RD53A module on DUT stage. Also brought in FE-I4 module and RCE DUT readout as a backup program.

5:30 Instructions from Mike Dunning on installing YARR readout computer. There are 4 IP addresses "permanently" allocated for our DUT readout on the ESA internal ESA-RESTRICTED network 172.27.104.xx: xx=45,46 are ESADUTDAQ1,2 used for RCE Sun UNIX host and HSIO2 DTM; xx=52,53 were allocated to YARR readout from previous Run as ESADUTLBNL1,2. The new YARR readout computer is adapting the .52 IP:

| YARR computer | 172.27.104.52 |

|---|---|

| Gateway | 172.27.104.1 |

| Netmask | 255.255.252.0 |

We took a cable at tunnel end labelled with a yellow tag "2" and tagged as "Sun server" which is connected via Belgen patch panel channel 19 that emerged on central rack side connecting to the switch port 34 on the ESA-RESTRCTED side. This worked pretty easily to get on network but installing more software in situ was a real pain.

Jun/1

6:00 Continued conversation between Ben and Jan Dreyling to import pre-compiled images for the TLU related images for NI Windows system. It is unfortunately not just TLUPRoducer.exe but also a set of associated run time lib .dll together. An alternative option of running TLUProducer in YARR also under investigation which looks quite doable but .

8:00 MCC found the the ESA middle mesh gate latch lost which effectively lost the controlled area protection and MCC assembly team to search ESA to reestablish controlled access. This will take a few hours.

11:00 Controlled area reestablished and we can go for controlled access. Simone and Zach moved in HGTD setup to the back half of the telescope to run parasitically. HGTD sensor mounted immediately behind the 4th plane of Caladium (taped onto the Caladium plate). HGTD/UCSC scope is taking over 172.72.104.56 as its DUT DAQ IP.

11:30 Moved the TLU USB connection from NI to YARR computer and after local USB and other parameter adjustments, the Linux build TLUProducer came up and running on YARR.

12:00 Found long USB extension cable from SLAC electronics shop and hooked that up to YARR computer's wireless antenna for internet access.

1:15 Tried to align DUT and telescope with laser. The horizontal laser seems to be aiming many mm below the cross wire on the beam pipe exit window (but this was probably correct as the brief beam run yesterday position was here). Adjusted horizontal laser up aim at the beam exit window cross center. 1st plane ~1mm above; 6th plane ~2mm below our to tilt). Moved whole telescope up by ~6-7mm with Y-coarse. The RD53A DUT Y-centroid is clearly 5mm above the EUDET MIMOSA center line. Used Y-fine to move DUT down by 5mm to line up with the telescope Y-center.

1:45 Ready for beam.

2:00 Beam coming through and we are running EUDAQ 1.7 (/opt/install/eudaq/eudaq-1.7/) ! with Caladium only. The beam is low in Y (Caladium is too high in Y). This confirms that the initial laser position aiming several mm below the beam pipe exit cross wire was actually deliberate and correct and telescope should not be adjusted up. Ke retuned the telescope Y position to recenter the beam spot. Took two runs 7,8 with high stat as beam spot position reference. Online monitoring histograms from Run 8 (default location of online hist save is /opt/install/eudaq/eudaq-1.7/bin) copied to /home/tfuser/Desktop/2018June_onlinemon/ which may be the combined Run 7 and Run 8 and the online monitoring histograms are by default accumulative across runs until manually reset.

3:00 UCSC prompt offline analysis package broken with the new V1.7. We have no other know working build for the new data.

3:15 Access for HGTD to turn on HV.

3:30 While RD53 DAQ preparation and tuning calibration in progress, the Run program is primarily HGTD tests for the time being, while whenever RD53A is ready for beam we should switch to that immediately.

5:00 The setup of TLUProducer running on YARR clearly cannot be the standard solution for all future Caladium runs as the YARR computer is returning to LBNL at the end this period. Ben/Cesar/SD started to look into migrating the TLUProducer onto the Sun server at esadutdaq1 which is a regular part of the Caladium setup. The server needed a push of a front power button to fully power up (otherwise showing main power off). An extra (white) Ethernet cable is added from the Sun server (top socket #1 of the two Eth sockets near rack edge) to the Belgen patch panel channel 8 which is connected to switch port 37 on the ESA-RESTRICTED network. The node came up correctly as esadutdaq1 with IP 172.27.104.45. It has a fairly modern SLC6 operating system. However, the login is always a bit slow due to SLC6 (a CERN product) with a default habit of checking network info at CERN first...

7:00 HGTD datataking continues with requests to MCC for different intensities. The operation had made breaks when LCLS is changing beam energy which typically have sigificant effect on the ESTB particle rate and beam spot can shift by a few mm. MCC typically need to call us for beam energy change and getting the new setup to stablize then call us to take new runs.

9:00 HGTD daatataking done for the day.

9:50 MCC called and they had trouble to reestablishing beam after shift change. Toni Smith is called in.

10:17 MCC called with beam reestablished. Primary beam E=11.48, ABend Dipole=11.21 GeV. Beam Q=0.25 nC. Beam spot appeared to be off center in X by ~5mm and in Y by ~2mm. Adjusted telescope to center.

10:53 Started the final run of tonight Run 25 for beam spot characterization. 30 hit clusters per plane per event (65 hit pixels/plane for clean planes). Good coverage of large area of the 2x1cm area. Do we care about a bit of beam tail clipping MIMOSA edge metal frame for some showering debris ?

23:38 Done for tonight.

Jun/2

Plan:

Operations resume at 9am. Timon is building an RD53A DAQ build overnight, aiming for an RD53A run in the morning. Depending on the outcome, we will have different mix of RD53A runs and HGTD runs. Some special tasks besides the main datataking:

- Can we establish a working prompt offline analysis build to work with the new V1.7 data ?

- TLUProducer migration from YARR to Sun server esadutdaq1

- Is there a clever way to serve our online histograms to MCC ?

- Try to get RCEProducer integrated into V1.7 ?

9:30am discussion with Toni Smith (accelerator program deputy) on today's plan: Operations during day shift with energy scans at LCLS is a much more of a burden for MCC and they encouraged us take more advantage of the steady conditions in the evening shifts. There will be frequent energy changes that will result in beam spot shifts particle rate fluctuation at ESTB. Unless we are doing precise data taking requiring strict steady conditions during Day shift, we should just follow the conditions to take whatever is there with only infrequent bothering of MCC as the energy scan for LCLS is a busy operation there. The controlled steady condition runs can hopefully mostly schedule for evening shifts.

Activities:

9:00 With online operations default to EUDAQ 1.7, the various documentations are being updated and please be alert to changes from previous setup with V1.5.

9:30 Daily program discussion with MCC (see planning section above).

9:45 First short spell of beam (same beam energy as last night) showed similar particle rates and same beam spot positions as last night.

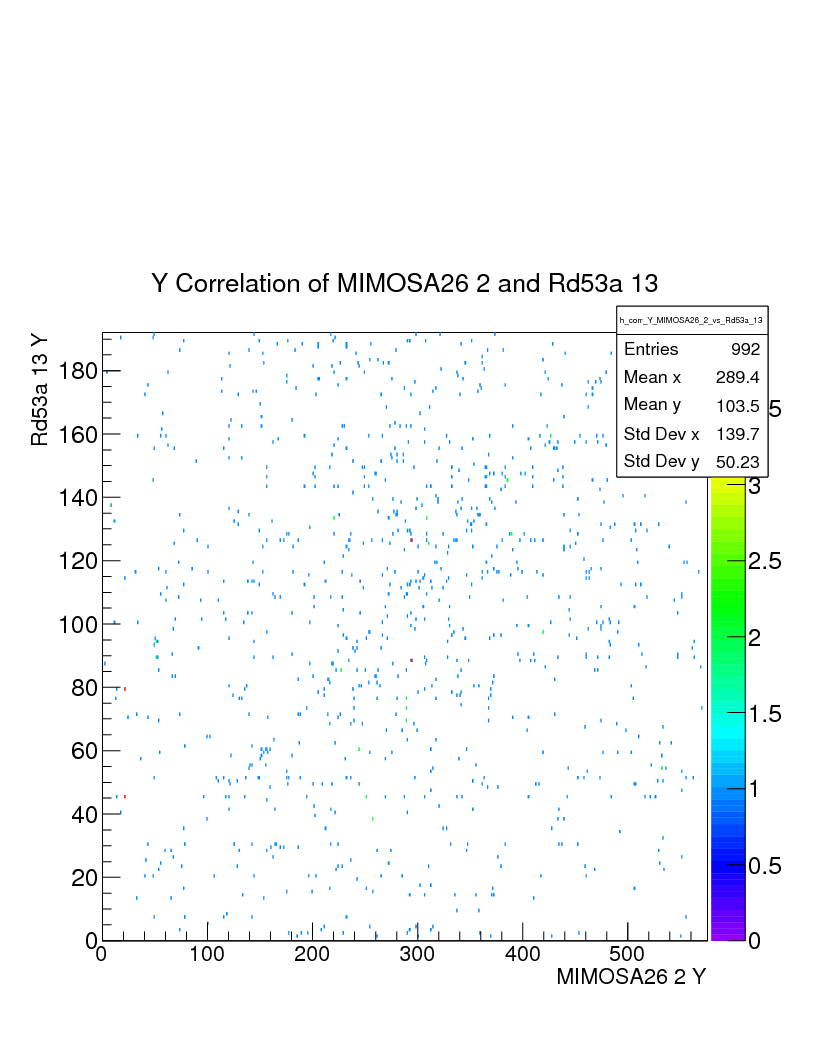

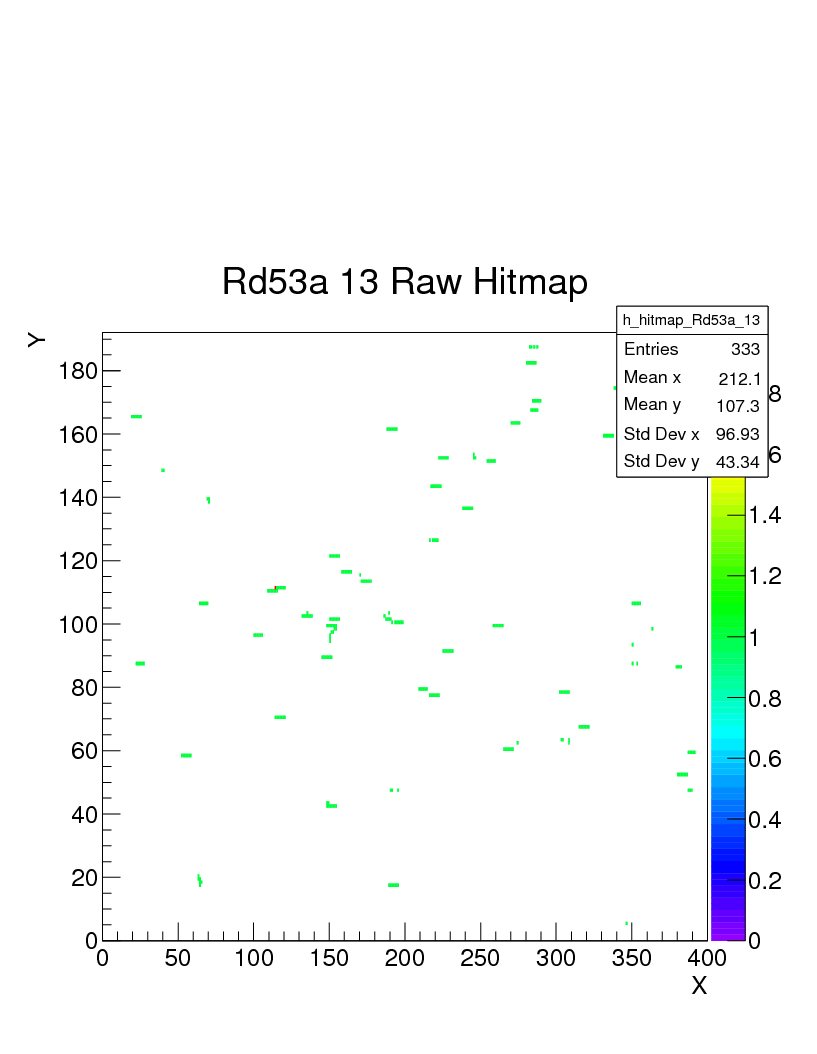

6:10 Standalone running of RD53A to snatch isolated trigger frame saw the beam hits in first shot once put in the same latency value as used for FE65P2 back in Sep/2016 (See event log date Sep/30/2016) where it was inferred the Caladium trigger is ~245 bc before the actual beam crossing. This saved a potential major hassle of latency search for time in which could be very tedious. RD53A module is rotated 90 degrees from the Caladium X/Y (MIMOSA and beam are long in X, while RD53A long side is vertical - can be seen in the photo also).

7:00 RD53A in integrated running with Caladium after inserting some protection against occasional anomalous data. One of the earliest integrated runs was Run 75.

7:15 We now also have an independent check on trigger latency from HGTD tests. Caladium trigger is channel 10 with a setting of -110488ns. UCSC LGAD is on trigger channel 11 with a setting of -104250 which 6238ns later than the Caladium trigger. From the HGTD detector, they can tell the trigger is 50ns before the actual beam crossing. Using the HGTD timing as reference, it implies the Caladium trigger is 6288ns (252 bc) before beam crossing. Given the other small internal delays etc. this is pretty close to the 245 bc estimates from 2016.

8:20 We ran into disk full problem on /dev/sda1 which is a small 20G disk containing all the EUDAQ software releases at /opt/install/ and other external packages such as root and qt they depend upon. Ben cleaned up some interim releases and there is a /home/tfuser/eudaq-archive directory on the /dev/sda8 with only 13% of the 200G used. In general files don't really need to be on the /opt disk should be moved to /home disk.

8:30 Given the success of the RD53A readout, we will take data through the night to gather more statistics at more stable beam conditions and lower particle rate to allow easy debuting of potential issues and more assured offline reconstruction .

10:30 They turned on the beam, and we asked them to decrease the number of e-s / beam, so we now have about 8 clusters / plane. The beam spot remained roughly the same place and size but perhaps somewhat wider. The 8 clusters/event rate is good for both RD53 reconstruction and HGTD data at the same time.

The DUT Y is all covered, but we only (in the initial configuration) have coverage from pixel 100 - 300 in X.

| Run Number | Start time | End time | Number of Events | Window coordinates (DUT) | Comments |

|---|---|---|---|---|---|

| 90 | 02/06/18 11pm | 02/06/18 11:25 pm | 8655 | x=100~300 | |

| 91 | 02/06/18 11:27 pm | ||||

| 92 | 02/06/18 11:27 pm | 03/06/18 00:27 am | 19007 | We hadn't saved the histogram at the end of run 91, so the run histogram just has twice as much data. But the beam conditions were stable between these two runs. | |

| 93 | 03/06/18 00:30 am | 03/06/18 01:03 am | 9021 | ||

| 94 | 03/06/18 01:05 am | 03/06/18 01:36 am | 9284 | ||

| 95 | 03/06/18 01:39 am | 03/06/18 02:07 am | 9013 | ||

| 96 | moving the RD53: up 1cm (Motor 3: Y fine : > 1cm) | ||||

| 97 | 03/06/18 02:24 am | 03/06/18 02:54 am | 9113 | ||

| 98 | 03/06/18 02:57 am | 03/06/18 | 6845 | Changed shift to Ke and Jannicke Stopped early because we were looking at Sensor 0 for measuring the number of events, will be using number of events from Sensor 2 from now on - it does not have hot pixels! | |

| 99 | 03/06/18 3:14 am | 03/06/18 3:40am | 9210 | ||

| 100 | 03/06/18 3:40am | 03/06/18 4:06am | 9406 | ||

| 101 | 03/06/18 4:06am | 03/06/18 4:34am | 9425 | ||

| 102 | 03/06/18 4:34am | 03/06/18 5:04 | 9173 | ||

| 103 | 03/06/18 5:04am | 03/06/18 5:31am | 9150 | X: (0,~100) | Moved RD53 (Motor 3 Y:fine < 2 cm) RBV from step at 10.00mm now. Aiming for coverage of 0-100 in X |

| 104 | 03/06/18 5:31am | 03/06/18 | 9245 | Moved RD53 (Motor 3 Y:fine > 2 mm) RBV from step at 12.00mm now, adjustment was made to get slightly better coverage in the 50-100 range. Looks good now! | |

| 105 | 03/06/18 | 03/06/18 6:12am | 1197 | Datataking came to a halt due to data disk /dev/sda1 full and YARR computer USB errors prevented login. More detail in Event log. Ended Run early. | |

| 106 | 03/06/18 | 03/06/18 9:05 | 9334 | Beam is back, restarted a new run. Also restarted a new run for HGTD | |

| 107 | 03/06/18 9:08 | 03/06/18 9:40 | 9384 | Shift Transferred to Alexandra & Charilou | |

| 108-119 | 03/06/18 09:41 | 03/06/18 | empty | The beam was turned off at 9:51. 108-119 are runs without beam used for testing the YarrProducer setup. No root files saved. RD53a config file changed. | |

| 120 | 03/06/18 10:50 | 03/06/18 10:53 | few events | To test the config files. | |

| 121 | 03/06/18 10:54 | 03/06/18 11:09 | 4439 | First run for analyzing with a new config. Restarted a new run for HGTD. The EUDAQ online monitor crashed; root file not saved. | |

| 122-126 | 03/06/18 11:13 | 03/06/18 11:40 | Testing Yarr and EUDAQ; no good data for analyzing. Root files not saved.

| ||

| 127 | 03/06/18 11:41 | 03/06/18 12:15 | 10000 | EUDAQ online histograms not reset or saved. | |

| 128 | 03/06/18 12:15 | 03/06/18 12:49 | 9812 | Automatic new run. Reset EUDAQ online histograms. | |

| 129 | 03/06/18 12:51 | 03/06/18 12:59 | 2300 | No root file saved. | |

| 130 | 03/06/18 12:59 | 03/06/18 13:08 | 2518 | No root file saved. | |

| 131 | 03/06/18 13:10 | 03/06/18 13:12 | 702 | No root file saved. FIXED in this run: Example producer was sending fake data every 20ms. | |

| 132 | 03/06/18 13:12 | 03/06/18 13:47 | 10000 | Automatic new run. EUDAQ online histograms were not reset. | |

| 133 | 03/06/18 13:47 | 03/06/18 14:20 | 9915 | EUDAQ online histograms with previous run. | |

| 134-137 | 03/06/18 | 03/06/18 | Path for data changed. Monitor didn't work after path changed, so took extra runs trying to fix it. Disregard files with 'Run 1' (0x0B57_000001.*); this was from eudaq not pointing to the run number .dat file. | ||

| 138 | 03/06/18 14:51 | 03/06/18 15:25 | 9861 | Monitor works now.

| |

| 139 | 03/06/18 15:37 | 03/06/18 15:47 | Used for moving RD53A | ||

| 140 | 03/06/18 15:48 | 03/06/18 16:22 | 9866 | X: (~150,~350) | Moved RD53 (Motor 3 Y:fine > 10 mm) RBV from step at 22.00mm now. (Previous RBV from step: 12.00mm.) Aiming for X~300. |

| 141 | 03/06/18 16:22 | 03/06/18 16:56 | 9875 |

Jun/3

6:00 Event data taking came to a halt during Run 105:

Run stopped early - noticed histograms stopped filling

ERROR IN DUT DATA PRODUCER

vent: tag(0) l1id(14 bcid(11398) hits (0)

Event: tag(0) l1id(15 bcid(11399) hits (0)

####################

### Stopping Run ###

####################

Got 1791events!

Caught interrupt, stopping data taking!

Abort will leave buffers full of data!

Caught interrupt, stopping data taking!

Abort will leave buffers full of data!

Waiting for run thread ...

-> Processor done, waiting for histogrammer ...

virtual void Fei4Histogrammer::process(): processorDone!

-> Processor done, waiting for analysis ...

virtual void Fei4Analysis::process(): histogrammerDone!

void Fei4Analysis::end()

-> All rogue threads joined!

-> Cleanup

Plotting : EnMask-0

Warning: empty cb range [1:1], adjusting to [0.99:1.01]

Plotting : OccupancyMap-0

Plotting : L1Dist-0

Empty data containers.

-> send EORE

Command Thread exiting due to exception:

Error sending data:

From /local/pixel/YarrEudaqProducer/EUDAQ/src/EUDAQ/main/lib/src/TransportTCP.cc:229

In void eudaq::{anonymous}::do_send_data(SOCKET, const unsigned char*, size_t)

ERROR IN TLU PRODUCER:

Error sending data:

From /local/pixel/eudaq/eudaq-1.7/main/lib/src/TransportTCP.cc:229

In void eudaq::{anonymous}::do_send_data(SOCKET, const unsigned char*, size_t)

While copying this error message pressed control C in TLU Producer ------------------------------------

restarted ./STARTRUN

reran ./TLUProducer.exe -r 172.27.100.8:44000

reconfigured EUDAQ in EUDAQ RD using ni_coins_YARR because it seems like it was the most recently updated

starting run 933

Histograms not popping up; killing all jobs and restarting ------------------------------------------------

went through steps 1-3

Called Su Dong: suggested we look at size of files

df -k

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda1 20030100 18989568 16392 100% /

moved data files to

/home/tfuser/data_20180603/

Now down to 81% full

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/sda1 20030100 15243540 3762420 81% /

Okay, so source of the problem was the disk full. --------------------------------------

Step 3 shows errors in finding libraries

[tfuser@ar-eudaq eudaq-1.7]$ ./OnlineMon.exe: /usr/lib64/libstdc++.so.6: version `CXXABI_1.3.8' not found (required by ./OnlineMon.exe)

./OnlineMon.exe: /usr/lib64/libstdc++.so.6: version `GLIBCXX_3.4.20' not found (required by ./OnlineMon.exe)

NEEDED TO SOURCE SETUP_EUDAQ.SH. Edited twiki to reflect this. ----------------------------

./TLUProducer.exe -r 172.27.100.8:44000

bin/YarrProducer -h configs/controller/specCfgExtTrigger.json -c configs/connectivity/example_rd53a_setup.json -s configs/scans/rd53a/std_exttrigger.json -r 172.27.100.8

Step 4 Cannot connect to pixel@172.27.104.52

ssh_exchange_identification: Connection closed by remote host

We can ping 172.27.104.52 but cannot log into it? Why? ------------------------------------------

From our end we are able to ssh into other places, so the issue appears to be with the 172.27.104.52 computer. We also tried just sshing with no username and this just hangs. Looked through ssh keys, no username associated with IP address although a key exists.

We think we might need to reset the computer, but the beam is on.

8:00 Timon and Alexandra have arrived, Timon made an access and they are now resetting the computer. We stopped the HGTD run before the access request was made.17:00 Controlled access to rotate the RD53A module.

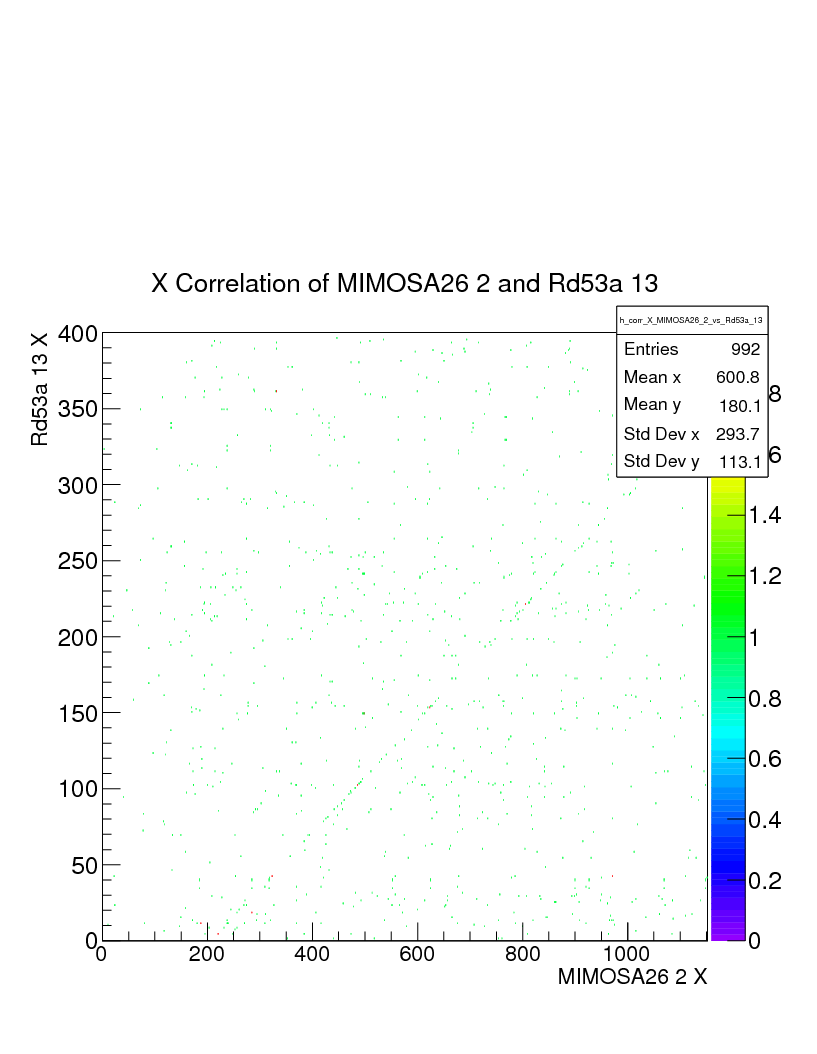

10:30 Offline analysis for Caladium X vs RD53A Y (due to transposed placement) showed clear correlation, but there are some puzzling extra spurious events.

12:00 RD53A data from last day had spurious extra events, which are tracked down as generated dummy events in the DUTproducer example template that was not removed after the actual DUT code implementation.

17:00 Access to remount RD53A DUT. HGTD added another sensor in front of the 4th Caladium plane (now two sensors attached to both front and back).

18:19 Access complete. RD53A rotated so display cables plugged in from the top.

18:21 Beam back on

| Run Number | Start time | End time | Number of Events | Window coordinates (DUT) | Comments |

|---|---|---|---|---|---|

| 142 | 03/06/18 18:24 | 03/06/18 18:28 | 0 | No events seen with RD53A, and few event correlations (<10 for MIMOSA 1 &2) between MIMOSA planes. | |

| 143 | 03/06/18 18:31 | 03/06/18 18:57 | Before Run: Terminated and restarted EUDAQ Run Control. Now seeing events with RD53A and more event correlations for MIMOSA. Only hitting diff FE; moving RD53A. Did not save root file. | ||

| 144-145 | 03/06/18 18:58 | 03/06/18 19:48 | Still moving RD53A. Asked Control Room to change rate from [First Mode 5Hz] . Increased rate by opening the collimators. Decreased rate right after @ 19:49. | ||

| 146 | 03/06/18 19:53 | 03/06/18 20:27 | 9917 | Y Fine: RBV from step: 16.00mm. X Fine: RBV from step: -25.00mm. EUDAQ online monitors saved. | |

| 147 | 03/06/18 20:28 | 03/06/18 21:01 | 9982 | no changes | |

| 148 | 03/06/18 21:02 | 03/06/18 21:38 | 9991 | no changes See monitoring plots showing correlations between RD53A and Mimosa26 2. |

21:00 Jason Nielsen & Murtaza Safdari on shift. Aleksandra stays to monitor data-taking and supervise rotation of the RD53A module.

21:48 The most recent run, 148, shows nice correlation between the RD53A and Mimosa hit locations.

21:50 The Main Control Room called to say there will be no beam for the next 10 minutes. We took the opportunity to perform a calibration scan.

22:00 Full shift program for remaining period signup complete. Thanks to everyone contributing.

22:10 Beam is restarting in ESA. The Main Control Room mentioned that they will be programmed for 6 keV X-rays. We do not think it will affect our beam much.

| Run | Start Time | End Time | Number of Events | Window Coordinates (DUT) | Notes | Mounting Orientation | Rotation (angle of sensor normal to beam) |

|---|---|---|---|---|---|---|---|

| 149 | 03/06/18 22:24 | 03/06/18 22:36 | ~3000 | no change | very low hit rate in RD53A, perhaps due to remnant configuration from calibration scan | columns running up & down | 0 |

| 150 | 03/06/18 22:38 | 03/06/18 23:11 | 9997 | no change | after stopping DAQ and restarting through init and configure | no change | no change |

| 151 | 03/06/18 23:12 | 03/06/18 23:46 | 9981 | no change | Hit rates seem about 10% lower than in previous run. Reason unknown. | no change | no change |

| 152 | 03/06/18 23:48 | 04/06/18 00:01 | 5021 | Rotation: from 78.17 to 153.13. | used for rotation of the chip | no change | 75 degrees |

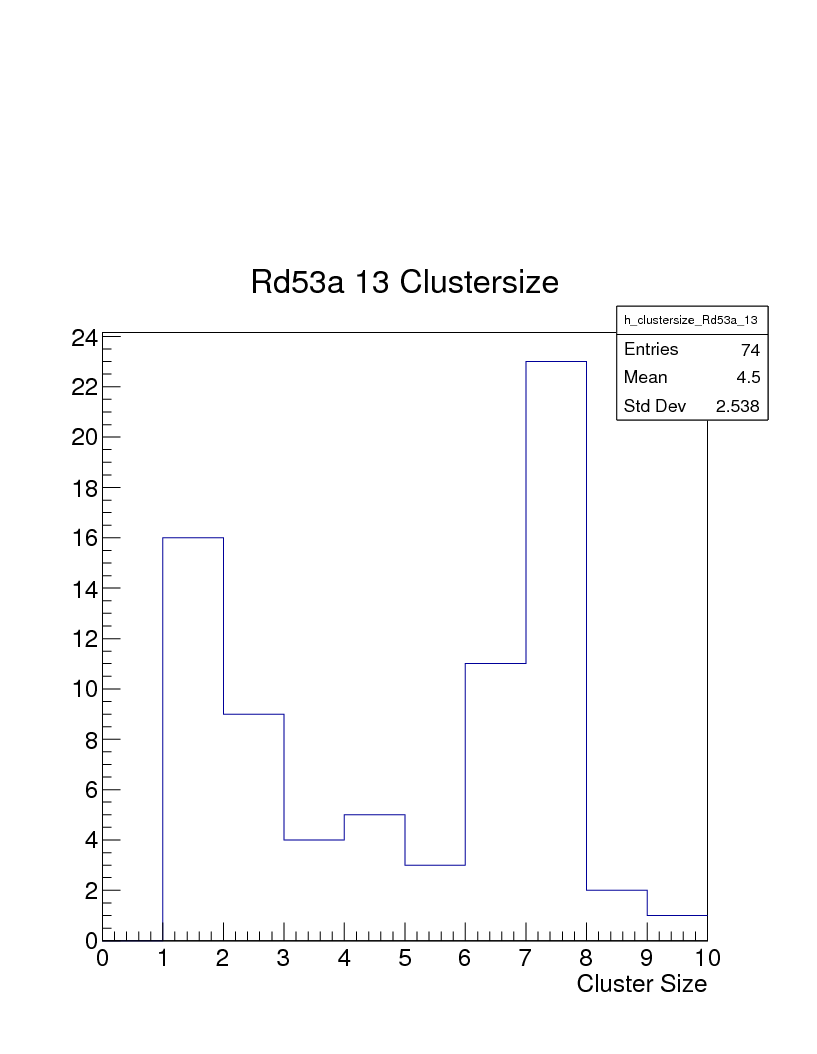

We confirmed that the cluster size is roughly 7-8 pixels in this last run.

00:01 Beam is off for calibration

00:10 Beam is back on.

After we saw a very weak correlation between RD53A and Mimosas in the previous run, we decided to pass through the configure step before starting the next run. It may be a good idea to do this before each run, just to be safe.

| Run | Start Time | End Time | Number of Events | Window Coordinates (DUT) | Notes | Mounting Orientation | Rotation (angle of sensor normal to beam) |

|---|---|---|---|---|---|---|---|

| 153 | 04/06/18 00:18 | 04/06/18 00:51 | 9992 | unchanged | unchanged | still 75 degrees | |

| 154 | 04/06/18 00:52 | problems getting through config | |||||

| 155 | 04/06/18 00:57 | 04/06/18 01:11 | 3392 | unchanged | bad run, beam status was changing, and rate was low | unchanged | still 75 degrees |

00:58 Control Room called to let us know that they will be going up a couple of GeV in energy. This may cause increased rate for us.

| 156 | 04/06/18 01:13 | 04/06/18 01:46 | 9991 | unchanged | this lower rate seems very good for us | unchanged | still 75 degrees |

| 157 | 04/06/18 01:47 | 04/06/18 01:58 | 3237 | unchanged | Did not pass through Config before Start. No correlation between RD53A and Mimosas. | unchanged | still 75 degrees |

| 158 | 04/06/18 01:59 | 04/06/18 02:33 | 9995 | unchanged | Passed through Config before Start | unchanged | still 75 degrees |

| 159 | 04/06/18 02:34 | 04/06/18 03:07 | 9687 | unchanged | Passed through Config before Start | unchanged | still 75 degrees |

2:55 Early morning crew arrived (Vallary, Carolyn)

03:07 Control Room called to let us know that the energy will be increased again for the primary, starting in 10 minutes.

| 160 | 04/06/18 03:08 | 04/06/18 03:13 | 866 | unchanged | unstable beam to ESA | unchanged | still 75 degrees |

| 161 | 04/06/18 03:15 | 04/06/18 03:48 | 9969 | unchanged | stable beam (primary beam E 13.26GeV) | unchanged | still 75 degrees |

| 162 | 04/06/18 3:52 | 04/06/18 04:24 | 9991 | unchanged | unchanged | still 75 degrees | |

| 163 | When started new run all producer and data collector state changed to dead. Error message on the YARREudaqProducer: terminate called after throwing an instance of 'eudaq::Exception' Kill the eudaq run control and started again | ||||||

| 164 | 04/06/18 04:33 | 04/06/18 05:07 | 9998 | unchanged | This is the run after crash and it doesn't show the correlation between the telescope planes and RD53 module | unchanged | still 75 degrees |

| 165 | 04/06/18 05:09 | 04/06/18 05:43 | 9993 | unchanged | Correlation plots look good | unchanged | still 75 degrees |

05:44 Control Room called to let us know that the energy will be increased again for the primary. Will start the new run once beam is stable.

| 166 | 04/06/18 05:52 | 04/06/18 05:59 | 2076 | unchanged | Primary beam E 14.08GeV control room called to let us know that the beam will be off for few minutes for gas detector calibration | unchanged | still 75 degrees |

| 167 | 04/06/18 06:03 | 04/06/18 06:37 | 9998 | unchanged | unchanged | still 75 degrees | |

| 168 | 04/06/18 06:39 | 04/06/18 06:50 | 3910 | unchanged | control room called as they are changing the beam energy for primary experiment. No beam for few minutes | unchanged | still 75 degrees |

| 169 | 04/06/18 06:59 | 04/06/18 07:35 | 9988 | unchanged | Primary beam E 12.4GeV Toni from control room called to inform that they are calibrating their gas detectors so turning off the beam for minute. Beam condition is unchanged so didn't stopped the run | unchanged | still 75 degrees |

| 170 | 04/06/18 07:36 | 04/06/18 08:08 | 9997 | unchanged | unchanged | still 75 degrees | |

| 171 | 04/06/18 08:11 | DAQ crashed | |||||

| 172 | 04/06/18 08:15 | 04/06/18 08:48 | 9978 | unchanged | This is the run after crash and it doesn't show the correlation between the telescope planes and RD53 module | unchanged | still 75 degrees |

| 173 | 04/06/18 08:50 | 04/06/18 09:25 | 9944 | unchanged | correlation plots look good | unchanged | still 75 degrees |

| 174 | 04/06/18 09:28 | 04/06/18

| ~1500 | Rotate: 153.13 to 159.13 | used for rotation | unchanged | 81 degrees |

| 175 | 04/06/18 09:40 | 04/06/18 10:05 | 6075 | unchanged | all good, we had to stop the run because the beam is down | unchanged | 81 |

10:05 Control Room called to let us know that the beam is down, they are changing the energy. Restart when the beam is back.

10:32 Beam is back, beam position changed a bit

| 176 | 04/06/18 10:33 | 04/06/18 11:06 | 9993 | unchanged | good, beam focused to the diff FE | unchanged | 81 |

| 177 | 04/06/18 11:07 | 04/06/18 11:10 | 910 | X fine: -25.00 mm to -24.00 | adjusting horizontally to the new beam position | unchanged | 81 |

| 178 | 04/06/18 11:12 | 04/06/18 11:45 | 9947 | unchanged | good, beam focused on lin and diff FE | unchanged | 81 |

| 179 | 04/06/18 11:46 | 04/06/18 12:13 | 7308 | X fine: -24.00 mm to -23.00 | good | unchanged | 81 |

12:13 Beam lost, should be back in 30 minutes

12:59 Beam is back, rate to high

| 180 | 04/06/18 12:59 | 04/06/18 13:06 | 2251 | unchanged | beam rate too high (almost 3 times higher), called control room to reduce it | unchanged | 81 |

| 181 | 04/06/18 13:06 | 04/06/18 13:45 | 9904 | unchanged | good | unchanged | 81 |

| 182 | 04/06/18 13:53 | 04/06/18 14:28

| 9815 | Rotation: 159.13 to 150.07 | good, rotation done before the start of the run DAQ restarted after the end of the run! | unchanged | 70 |

| 183 | 04/06/18 14:30 | 04/06/18 15:04 | 9930 | unchanged | good, with correlations | unchanged | 70 |

15:15 Requested access to make reference for the angle of the chip. Please take a screenshot after changing position!

16:07 Beam is back on

| 184 | 04/06/18 16:17 | 04/06/18 16:17 | 0 | Rotation: 150.07 to 161.24 | restarted DAQ | unchanged | to calculate |

| 185 | 04/06/18 16:20 | 04/06/18 15:04 | 9973 | unchanged | good | unchanged | 161.24 |

| 186 | 04/06/18 17:11 | 04/06/18 17:45 | 9976 | unchanged | good | unchanged | same |

17:34 Plan:

- take two runs for each position of the chip

- 150

- 140

- 130

- 120

- 115

- 110

- 105

- 100

- 95

- 90

- take a snapshot for each position, save the picture on the desktop, note the name of the picture in the table

- note the position of the motor in the table

- when reached perpendicular to the beam take the data for the end of the beam

- when the beam is off (and all angles are covered) notify Ben/Timon so we can tune the chip to a different threshold and wait for futher instructions

- before each start of the run, load the config files

- restart the DAQ system

- check the correlation plots Telescope - RD53a

18:00 Ke and SD on shift

18:20 Adjusted DUT rotation to RBV=151.24 (step -10). Rotation stage stuttered a bit but got there eventually.

18:30 Runs 187-189 had some suspiciously low rate of DUT hits, online mon crash because hitting reset during start. Finally got Run 190 going with reconfig.

18:35 Run summary info now recorded in Google Doc Run List directly.

19:30 Noticing the mean hit rate per event rather low seems to be due to beam going on and off. So the total number of events may not be a reliable number to use for the actual track statistics.

19:41 Run 191 reached 10000 events but very low entries in correlation plots so we just let the Run roll over to 192 without saving/reseting monitoring histograms.

20:15 Run 192 reached max events then rolled over to 193 which we stopped after small number of events to conclude this measurements for this angle, with combined mon histograms from Run 191-193 saves as run193.root. DUT rotation -10 RBV=141.24.

20:30 We did a simple calculation from the DUT X vs MIMOSA26_2 X correlation plot from the previous angle's Run190.root histo which showed a slope of (50um/18.4um)*dX(DUT)/dx(MIMOSA)=1/sin(theta) which implied 180-theta=155.3 degrees, which is not far off from RBV=151.24 which is supposedly quite close to the actual angle in degrees. This method is also immune to small telescope tilt which cancels in the slope. The X correlation should be also mostly independent of the active Si depth knowledge. The cluster sized based estimate from tan(theta)=150um/(Cluster-size) gave 150 degrees while the cluster size measurement is somewhat less precise and needs to be sure of the 150um Si depth.

21:22 Run 195 looks like has lost the DUT vs MIMOSA correlation. Stopped run, terminated Run Control and restarted everything for Run 196.

21:40 Run 196 still showing only very faint DUT correlation so we stopped it to reconfig, but looked like we lost beam upon the start of Run 197 so we stopped that short run also.

21:45 Beam back. Started Run 198 and correlations looked much more healthy.

21:50 Primary beam energy went up to 12.48 briefly and then back to 12.12 GeV. Starting a new run for HGTD anyway.

22:18 Stopped Run 198 to change DUT rotation angle to 130 deg. DUT rotation -10 RBV=131.24. Reconfigured twice to be sure to start Run 199. Correlations look good.

22:23 Noticed that each time we rotate and correlated hit region of DUT keeps on marching to higher X and the top edge is getting closer to the right edge. Since the top tip of the correlation diagonal line is running behind the histogram stat box, we tried to move the display stat box but that crashed the online Mon. Had to restart everything for new Run 200.

23:00 After the end of Run 200, we moved the DUT by -2.5mm (towards ESA gate - camera confirmed) to get away from the +x edge.

23:02 Started Run 201. X correlation moved left by the desired amount as expected.

23:14 The DUT correlation looked rather faint/low stat. Ending Run 201 early to reconfigure.

23:15 Started new Run 215 after double reconfig. Correlations looked better.

23:42 MCC called to change primary beam energy to 12.40 GeV. Ending Run 202.

23:45 Rotated DUT -10 to RBV=121.24.

23:46 Started Run 203, but noticed no beam, so stopped it with 0 event.

0:39 Moved DUT dx=-2mm to avoid +x edge

0:39 Run 207 started. Seemed to have more background hits and correlation faint. Ending Run to reconfig.

0:53 Started Run 208. Rate and correlation looked better.

1:30 Rotated DUT -5 RBV=116.24.

1:32 Started Run 209.