Page History

...

| Anchor | ||||

|---|---|---|---|---|

|

Writing a user application

...

that reads from shared memory

...

| Anchor | ||||

|---|---|---|---|---|

|

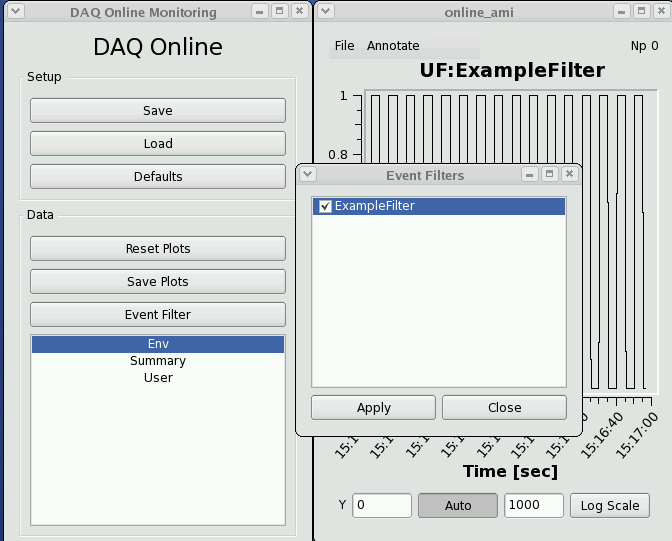

If you wish to write a user application that reads from shared memory, it is recommended that you get in touch with the hutch point-of-contact for assistance. AMI and psana can both read from shared memory and most analyses can be accomplished within one of the two frameworks.

The shared memory server can either support 1 or multiple clients, depending on the command line flags passed in when the server is launched. Events can be handed to multiple clients in a round-robin style (useful for parallelism) or every client can see all events. Multiple shared memory servers can run on one monitoring node, but each server must have a unique name for its block of memory (seen in /dev/shm).

| Code Block |

|---|

Usage: monshmserver -p <platform> -P <partition> -i <node mask> -n <numb shm buffers> -s <shm buffer size> [options]

Options: -q <# event queues> (number of queues to hold for clients)

-t <tag name> (name of shared memory)

-d (distribute events)

-c (allow remote control of node mask)

-g <max groups> (number of event nodes receiving data)

-T <drop prob> (test mode - drop transitions)

-h |

Shared memory server flags:

- -c: indicates a shared memory server whose dss node mapping can be dynamically changed. (The psana monshmservers should not have the "-c" flag.)

- -d: distribute each event to a unique clients (other option is all clients are given the same event). A faster client will receive more events in "-d" mode.

-i: bitmask specifying multicast groups (i.e. which dss node's data we see)

-n: total number of buffers. if there are 8 clients, and 8 buffers, each client gets 1 buffer.

- -q: maximum number of clients ("queues") that this shared memory server will send events to

- -s: the buffer size (in bytes) reserved for each event. the event has to fit in this space. if the product of this number and the argument to "-n" is too large, the software can crash because a system shared memory limit has been exceeded.

- -t: "name" of the shared memory (e.g. the "psana" in DataSource('shmem=psana.0:stop=no')

- -P: the partition name used to construct the name of the shared memory. c

The shared memory server can be restarted with a command similar to this:

/reg/g/pcds/dist/pds/tools/procmgr/procmgr restart /reg/g/pcds/dist/pds/cxi/scripts/cxi.cnf monshmsrvpsana |

The last argument is the name of the process which can be found by reading the .cnf file (the previous argument). This command must be run by the same user/machine that the DAQ used to start all its processes (e.g. for CXI it was user "cxiopr" on machine "cxi-daq"). If a shared-memory server is restarted, it loses its cached "configure/beginrun/begcalib" transitions, so the DAQ must redo those transitions for the server to function correctly.

To find out the name of the shared memory segment on a given monitoring node, do something similar to the following:

[cpo@daq-sxr-mon04 ~]$ ls -rtl /dev/shmtotal 74240-rw-rw-rw- 1 sxropr sxropr 1073741824 Oct 15 15:29 PdsMonitorSharedMemory_SXR[cpo@daq-sxr-mon04 ~]$ |

There are shared-memory server log files that are available. On a monitoring node do "ps -ef | grep monshm" you will see the DAQ command line that launched the monshmserver, which includes the name of the output log file.

Online Monitoring and Simulation Using Files

...