Investigating transfer learning, what can we do with a fully trained ImgNet model?

The idea is to take a fully trained model, like a ImgNet winner, and re-use it for your own task. You could throw away the final logits and retrain for your classification task, or retrain a few of the top layers.

- Preparing the data, vggnet takes color images, [0-255] values, small xtcav is grayscale, [0-255].

- vggnet subtracts the mean per channel

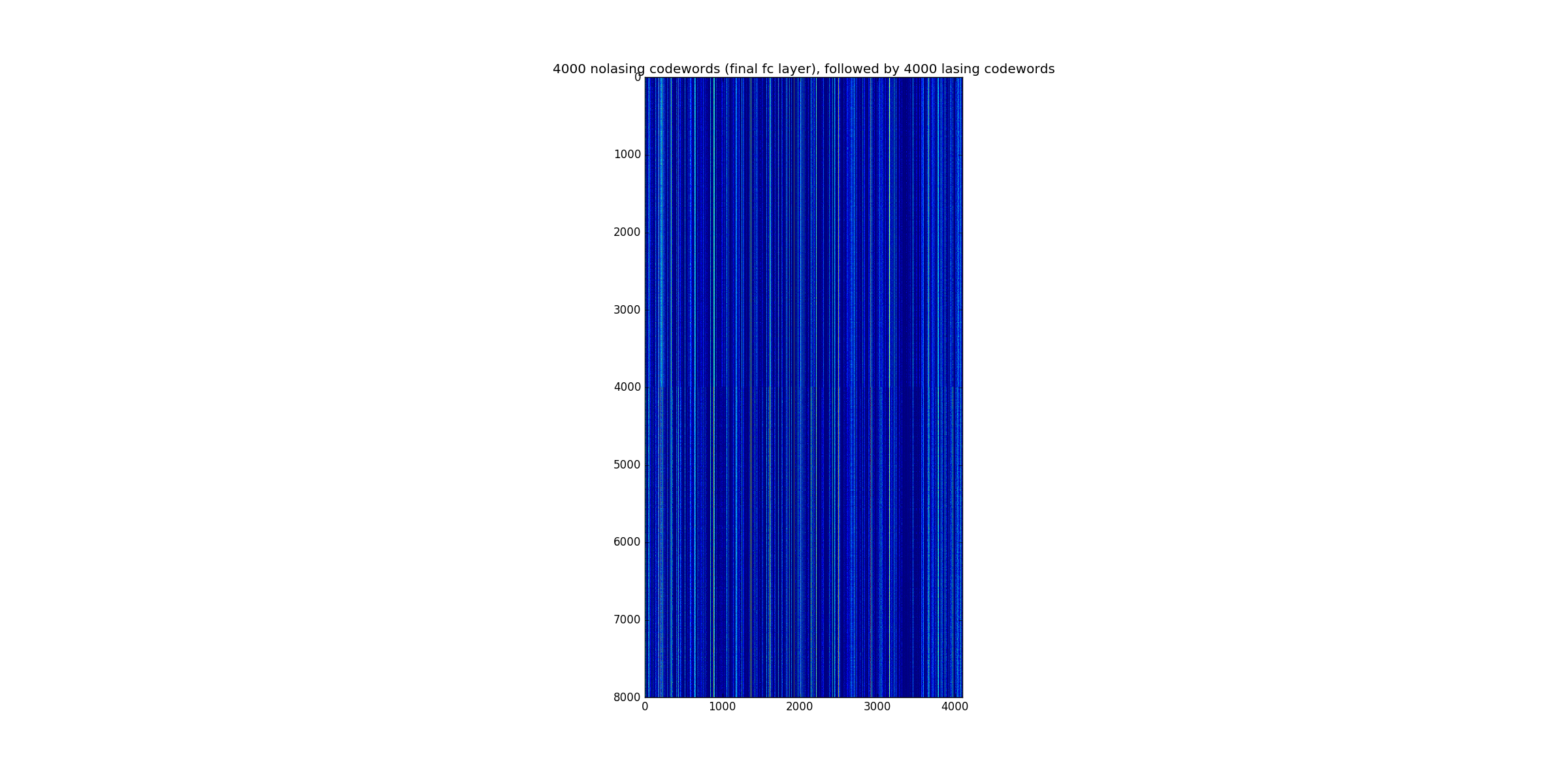

- Codewords are 4096, but look quite spare, not so much variation from lasing/no lasing classes

- Still - looks like it discerns, mean for class 0 and class 1 are 16 apart, vs like .3 or 1 for random subsets

Dataset

The reduced xtcav, took runs 69, 70 and 71, but for run 70 and 71, conditioned on acq.enPeaksLabel >=1 to make sure some lasing was measured. About 20,000 no-lasing samples from run 69, and 40,000 lasing samples.

Preprocess

vggnet works with 224 x 224 x 3 color images. simplest way to pre-process, replicate our intensities across all three channels

ideas

- why don't we take the original 16 bit ADU images and colorize them, to send the image on the left through? Then we could encode our 16 bit ADU intensity in 24 bits without losing information, and each channel would be in [0-255], would this help?

vggnet does not mean center the images, but it subtracts the per channel mean for each or R,G,B, that is it subtracts 123.68, 116.779, 103.939 repsecively from the channels. We compute this over our small xtcav dataset and get 8.46 for each.

codewords

vggnet, http://cs231n.stanford.edu/slides/winter1516_lecture7.pdf, around slide 72, after 5 convnet layers, with relu's and max pooling, produces 7 x 7 x 512 (25,088 numbers).

Then three fully connected layers, the first two are 4096, the last, the logits, 1000 for the imagenet classes. The terminology 'codeword' refers to the final fc layer (which had relu activation producing the output) before doing classificaiton with the 'logits' (ref: http://cs231n.stanford.edu/slides/winter1516_lecture9.pdf around slide 12).

If we plot 4000 of the nolasing, then 4000 of the lasing, you get the following:

One interesting thing, the lasing on/off codewords are veryu