This note describes imaging detectors' hierarchical geometry model which is implemented in LCLS offline software since release ana-0.13.1.

Analysis of LCLS data from imaging experiments requires precise coordinate definition of the photon detection spot. In pixel array detectors photon energy usually deposited in a single pixel and hence pixel location precision should be comparable or better than its size, about 100μm. Apparently, calibration of the detector and entire experimental setup geometry with such a precision is a challenging task for many reasons;

To take into account this structure we may consider a variable length series of hierarchical objects like sensor -> sub-detector -> detector -> setup, where lower-level child object(s) is(are) embedded in its higher-level parent object. Nodes/objects of this hierarchical model form the tree which is convenient for navigation and recursion algorithms. Each child object location and orientation can be described in the parent frame. Tree-like structure can be kept in form of table saved in and retrieved from file. The last feature is practically useful for calibration purpose. All constants for detector/experiment geometry description can be saved in a single file. Relevant parts of the hierarchical table can be calibrated and updated whenever new geometry information is available, for example from optical measurement or dedicated runs with images of bright diffraction rings or Bragg peaks.

This note contains description of the implemented hierarchical geometry model, coordinate transformation algorithms, tabulation of the hierarchical objects and calibration file format, description of software interface in C++ and Python, details of calibration, etc.

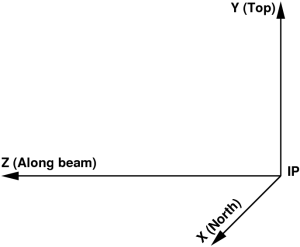

Cartesian coordinate system of setup is defined by three mutually orthogonal right-hand-indexed axes with origin in the interaction point (IP) of the photon beam with target:

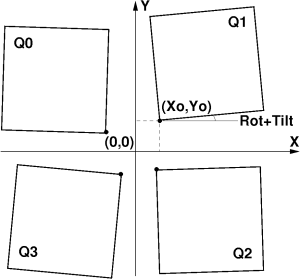

as shown in the plot:

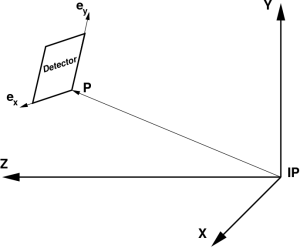

Detector coordinate system may have a translation and rotation with respect to the setup, which can be defined by the 3 vectors in the setup frame:

ey – unit vector along the detector frame y axis,

as shown in the plot:

Third unit vector ez – is assumed to be right-hand triplet component,

ez=[ex × ey].

Components of these three unit vectors form the rotation matrix

/ ex1 ey1 ez1 \

R = | ijex2 ey2 ez2 |

\ ex3 ey3 ez3 /

where indexes "i" and "j" corresponds to the vector components in the setup and detector local coordinate frames, respectively. Within this definition 3-d pixel coordinate "c" in the detector frame can be transformed to the setup coordinate "C" using equation

Ci=Rij·cj + Pi.

The list of parameters, which defines detector position and orientation in the setup (lab) frame consists of vector components:

| P1 | P2 | P3 | ex1 | ex2 | ex3 | ey1 | ey2 | ey3 |

|---|

assuming ez=[ex × ey], and |ex|=|ey| =|ez|=1.

Alternatively, directions of the three unit vectors ex, ey, and ez of the detector frame can be defined in terms of three Euler angle rotations with respect to the setup (lab) frame:

| P1 | P2 | P3 | alpha | beta | gamma |

|---|

with classic relations between (ex,ey,ez) and (alpha, beta, gamma) presentations.

Access to these parameters should should be provided at least two methods of the calibration store object:

(P1, P2, P3, ex1, ex2, ex3, ey1, ey2, ey3) = calib.get( type, detector_name )

calib.put(type, detector_name, (P1, P2, P3, ex1, ex2, ex3, ey1, ey2, ey3))

or similar methods for Euler angles.

In this note we assume that:

These assumptions are not necessary for most of algorithms. But, in many cases it may be convenient to use small angle approximation or ignore angular misalignment for image mapping between tiles and 2-d image array.

For any type of tile there should be a method returning pixel coordinates in this ideal geometry,

xarr, yarr, zarr = get_xyz_maps_um()

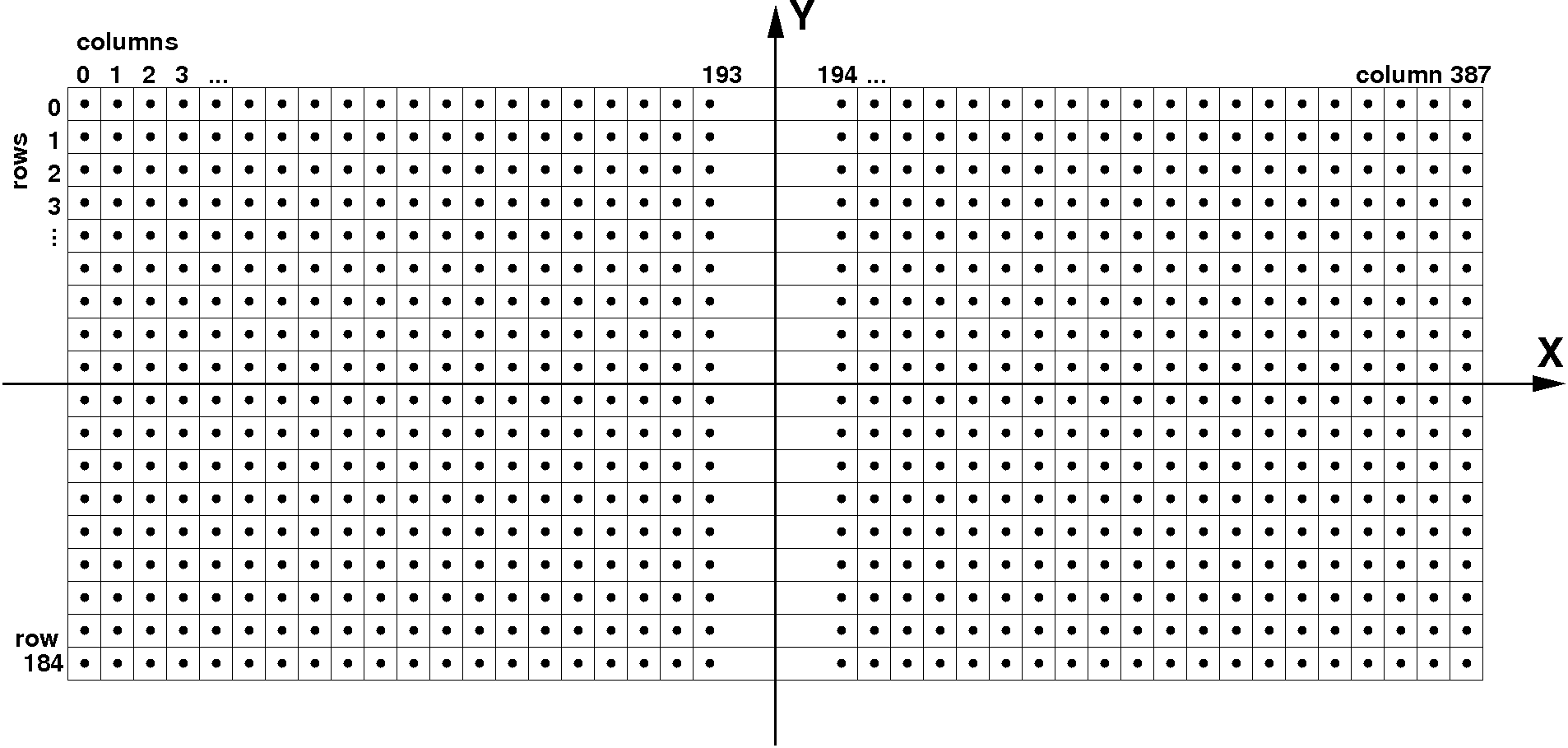

For example, Python interface for CSPAD2x1

from PyCSPadImage.PixCoords2x1 import cspad2x1_one

...

xarr, yarr, zarr = cspad2x1_one.get_xyz_maps_um() |

returns x-, y-, and z-coordinate arrays of shape [185,388].

It is assumed that each tile is presented in DAQ or offline data by a consecutive block of memory. Uniform matrix-type geometry of pixel array is preferable, but other geometry can be handled. For example, for CSPAD 2x1 sensor pixel index in the tile memory block of size (185,388) can be evaluated as

i = column + row*388.

Detector data record consists of consecutive tile-blocks, in accordance with numeration adopted in DAQ. For effective memory management, some of the tile-blocks may be missing due to current detector configuration. Available configuration of the detector tile-blocks should be marked in a bit-mask word in position order (bit position from lower to higher is associated with the tile number in DAQ).

Optical measurements provide a list of coordinates for all sensor points:

| Tile # |

|---|

| Point # | X[µm] | Y[µm] | Y[µm] |

|---|

Quality check of optical measurement may include for each tile:

Detector pixels' geometry in 3-d can be unambiguously derived from

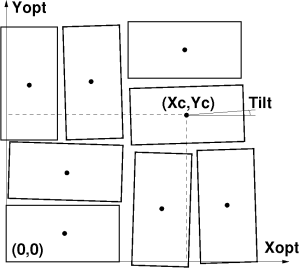

The tile location and orientation can be defined by the table of records (see example of file: geometry/0-end.data)

| Parent name | Parent index | Object name | Object index | X0[µm] | Y0[µm] | Y0[µm] | Rotation Z [°] | Rotation Y [°] | Rotation X[°] | Tilt Z[°] | Tilt Y[°] | Tilt X[°] |

|---|

where

Tile center coordinates are defined as an average over 4 corners. Tilt angles are projected angles of the tile sides on relevant planes. Each angle is evaluated as an averaged angle for 2 sides.

Origin of the detector local frame is arbitrary. For example, for CSPAD with moving quads it is convenient to define

Pixel coordinate reconstruction in the detector frame uses

Each geometry object pixel coordinate xj are transformed to the parent frame pixel coordinate Xi applying rotations and translations as

Xi=Rij·xj + Pi,

or in Python code:

#file: pyimgalgos/src/GeometryObject.py

def rotation(X, Y, C, S) :

Xrot = X*C - Y*S

Yrot = Y*C + X*S

return Xrot, Yrot

class GeometryObject :

...

def transform_geo_coord_arrays(self, X, Y, Z) :

...

# define Cx, Cy, Cz, Sx, Sy, Sz - cosines and sines of rotation + tilt angles

...

X1, Y1 = rotation(X, Y, Cz, Sz)

Z2, X2 = rotation(Z, X1, Cy, Sy)

Y3, Z3 = rotation(Y1, Z2, Cx, Sx)

Zt = Z3 + self.z0

Yt = Y3 + self.y0

Xt = X2 + self.x0

return Xt, Yt, Zt |

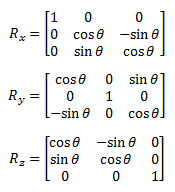

where the 3-d rotation matrix R is a product of three 2-d rotations around appropriate axes,

Pixel coordinates in the detector should be accessed by the method like

xarr, yarr, zarr = get_pixel_coords()

For example, Python interface for CSPAD

from pyimgalgos.GeometryAccess import GeometryAccess

...

fname_geometry='<path>/geometry/0-end.data'

geometry = GeometryAccess(fname_geometry)

X,Y,Z = geometry.get_pixel_coords() |

will return X,Y,Z arrays of the shape [4,8,185,388], or similar for CSPAD quad, one has to show the top object for coordinate arrays, say it is 'QUAD:V1' with index 1:

geometry = GeometryAccess(fname_geometry)

X,Y,Z = geometry.get_pixel_coords('QUAD:V1', 1) |

return X,Y,Z arrays of the shape [8,185,388].

Interface description is available in doxygen

The same package and file names as in C++ but with file name extension .py:

Suggested method for imaging detector geometry description provides simple and unambiguous way of pixel coordinate parametrization. This method utilizes all available information from optical measurement and design of the detector and tiles. All geometry parameters are extracted without fitting technique and presented by natural intuitive way.