...

Discontinued: IOC Info query application

This is a jsp web application containing data about IOCs and applications, and their configurations. It is no longer maintained.

https://seal.slac.stanford.edu/IRMISQueries/

...

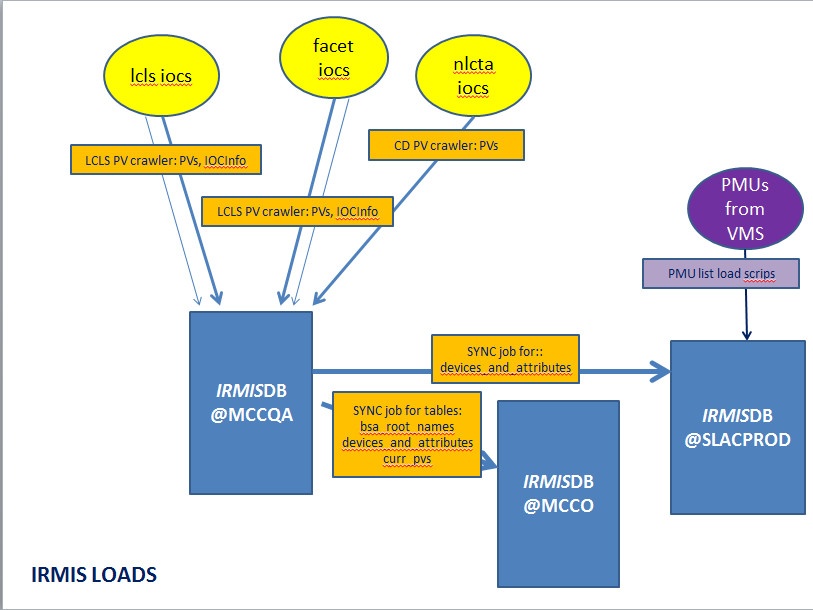

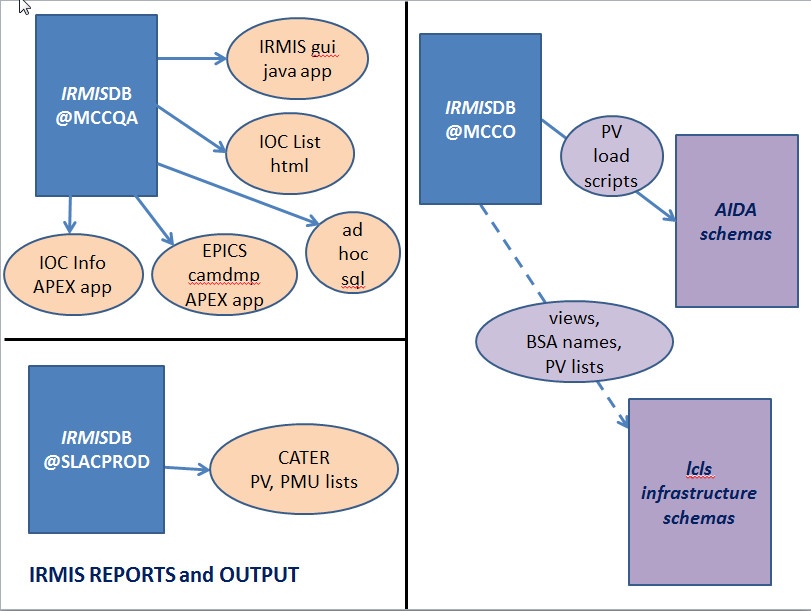

IRMIS@SLAC INFORMATION FLOW DIAGRAMS AND LOAD PROCESSES LIST

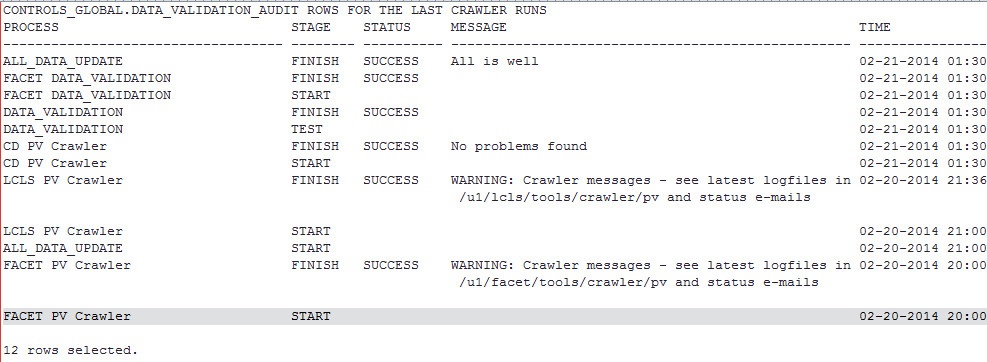

IRMIS LOAD PROCESSES IN REVERSE CHRONOLOGICAL ORDER (snip from Data Validation daily e-mail)

...

OPERATIONAL and SUPPORT DETAILS

At SLAC, in a nutshell the PV crawlers update:

- all IRMIS tables in MCCQA. All IRMIS UIs query data on MCCQA: PV and IOC data.

- 3 production tables on MCCO, which are used by other system – AIDA, BSA applications

Oracle schemas and accounts

The IRMIS database schema is installed in 4 SLAC Oracle instances:

- MCCQA IRMISDB schema: Production for all gui’s and applications. Contains data populated nightly by perl crawler scripts from production IOC configuration files. Data validation is done following each load.

- MCCO IRMISDB schema: contains production data, currently for 3 tables only: bsa_root_names, devices_and_attributes, curr_pvs. MCCQA data is copied to MCCO once it has been validated. So MCCO is as close as possible to pristine data at all times.

- SLACDEV IRMISDB schema: sandbox testing and staging new features before release to production on MCCQA (see accounts below)

- MCCODEV IRMISDB schema: can be used for staging a release. Currently, the schema and data are out of date. Refresh from prod, then apply SLACDEV changes to use for staging.

- SLACPROD IRMISDB schema: this is obsolete; however, it is referenced in some scripts or other and has to be kept around until the scripts are pointed elsewhere. See Poonam, Elie, Mike Z. for details.

Other Oracle accounts

- IRMIS_RO – read-only account (not used much yet – but available)

- IOC_MGMT – created for earlier IOC info project with a member of the EPICS group which is not active at the moment- new ioc info work is being done using IRMISDB

For passwords see Poonam Pandey or Elie Grunhaus (or use getPwd)

As of September 15, all crawler-related shell scripts and perl scripts use Oracle Wallet to get the latest Oracle password. Oracle passwords must be changed every 6 months, and is coordinated and implemented by Poonam Pandey.

| Note | ||

|---|---|---|

| ||

The IRMIS GUI uses hardcoded passwords. This must be changed “manually” at every password change cycle. See below for detailed procedure. |

Database structure

see schema diagram below. (this diagram excludes the EPICS camdmp structure, which is documented separately here: <url will be supplied>)

Crawler scripts

The PV crawler is run once for each IOC boot directory structure. The LCLS and FACET PV crawlers run the same code, that in the LCLS directory structure. The LCLS crawler code has been modified to be production-specific; it is a different version than the SLAC PV crawler.

The SLAC PV crawler (runSLACPVCrawler.csh) runs the crawler a couple of times to accommodate the various CD IOC directory structures.

...

Each job and process sends a status e-mail to a selected list of recipients.

Emails, in chronological order

- FACET PV Crawler errors and warnings: output from the FACET crawler (to: Shashi, Jingchen, Poonam, Judy) note: warnings are not serious, just fyi. In general.

- LCLS PV Crawler errors and warnings: output from the LCLS crawler (to: controls-software-reports, Shashi, Jingchen, Poonam, Judy) note: warnings are not serious, just fyi. In general.

- MCCQA SLAC CD PV Crawler errors and warnings: output from the CD (NLCTA) crawler (to: Shashi, Jingchen, Poonam, Judy)

- <timestamp> IRMIS data validation <SUCCESSFUL or FAILED>: shows whether the data validation succeeded or not, and the status of each job in the sequence in reverse cron order, and logfiles to refer to in case of issues (to: controls-software-reports, Shashi, Jingchen, Poonam, Judy)

- IRMIS data sync to MCCO completed: Only received if the data validation was successful, and the sync to MCCO completed. (to: Shashi, Jingchen, Poonam, Judy)

- DUPLICATE PVs FOUND in FACET and LCLS: Only received if duplicate PVs were found. (to: controls-software-reports, Ernest, Shashi, Jingchen, Mike Z., Murali)

** Only LCLS data validation will affect the sync to MCCO. FACET, and CD e-mails are fyi only.

Daily monitoring of e-mails

- Check the "IRMIS data validation" e-mail title:SUCCESS or FAILED? If it's SUCCESSful, and there is a subsequent "IRMIS data sync to MCCO completed", then all is well.

- If the data validation FAILED:

- What does the message for the DATA_VALIDATION step say? The errors found will be listed. (e.g. "PV Count drop > 5000", and/or "LCLS Crawler did not complete", etc.)

- Refer to the <acc> PV Crawler errors and warnings e-mails. These show the changes in PV counts by IOC (useful in locating which IOC's PVs count dropped for example)

- If needed, go to the logfiles: see "IRMIS data validation" email for the log directory for each crawler. Also, see table below for logfiles to refer to.

...

At SLAC, what the PV crawlers update, in a nutshell

- all IRMIS tables in MCCQA. All IRMIS UIs query data on MCCQA: PV and IOC data.

- 3 production tables on MCCO, which are used by other system – AIDA, BSA applications

Oracle schemas and accounts

The IRMIS database schema is installed in 4 SLAC Oracle instances:

- MCCQA IRMISDB schema: Production for all gui’s and applications. Contains data populated nightly by perl crawler scripts from production IOC configuration files. Data validation is done following each load.

- MCCO IRMISDB schema: contains production data, currently for 3 tables only:

As IOCs are rebooted during maintenance and upgrades, IOC.dbDumpRecord will eventually become the only files crawled in the system, resulting in easier maintenance.

cron jobs

- LCLS side: laci on lcls-daemon2: runLCLSPVcrawlerLx.bash: crawls LCLS PVs and creates lcls-specific tables (

- bsa_root_names, devices_and_attributes

).- LCLS side: laci on lcls-daemon2: caget4curr_ioc_device.bash: does cagets to populate curr_ioc_devices for the IOC Info APEX app. Run separately from the crawlers because cagets can hang unexpectedly – they are best done in an isolated script!

- CD side: laci on lcls-prod01: runAllCDCrawlers.csh: runs CD PV crawler, data validation, and sync to MCCO. Client crawlers are no longer run! alh and car data sources have changed and the code has not been updated.

- FACET side: flaci on facet-daemon1: runFACETPVcrawlerLx.bash: crawls FACET PVs.

PV crawler operation summary

For the location of the crawler scripts, see Source code directories below.

Basic steps as called by cron scripts are:

- run FACET pv crawler to populate MCCQA tables

- run LCLS pv crawlers to populate MCCQA tables

- run Data Validation for PV data in MCCQA

- if Data Validation returns SUCCESS, run synchonization of MCCQA data to selected (3 only at the moment) MCCO tables.

- run caget4curr_ioc_device to populate caget columns of curr_ioc_device

** For PV Crawlers: the crawler group for any given IOC is determined by its row in the IOC table. The system column refers to the boot group for the IOC, as shown below.

LOGFILES, Oracle audit table

Log filenames are created by appending a timestamp to the root name shown in the tables below.

The major steps in the crawler jobs write entries into the Oracle CONTROLS_GLOBAL.DATA_VALIDATION_AUDIT table. Each entry has these attributes:

- Instance

- Schema

- Process

- Stage

- Status

- Message

- TOD (time of day)

(see below for details on querying this table)

Descriptions of the MAIN scripts (there other subsidiary scripts as well):

these are all ultimately invoked from the cron jobs shown above; the cron scripts call the others.

BLUE script names are on the CD side

GREEN script names are on the LCLS side

PURPLE script names are on the FACET side

- , curr_pvs. MCCQA data is copied to MCCO once it has been validated. So MCCO is as close as possible to pristine data at all times.

- SLACDEV IRMISDB schema: sandbox testing and staging new features before release to production on MCCQA (see accounts below)

- MCCODEV IRMISDB schema: can be used for staging a release. Currently, the schema and data are out of date. Refresh from prod, then apply SLACDEV changes to use for staging.

- SLACPROD IRMISDB schema: this is obsolete; however, it is referenced in some scripts or other and has to be kept around until the scripts are pointed elsewhere. See Poonam, Elie, Mike Z. for details.

Other Oracle accounts

- IRMIS_RO – read-only account (not used much yet – but available)

- IOC_MGMT – created for earlier IOC info project with a member of the EPICS group which is not active at the moment- new ioc info work is being done using IRMISDB

For passwords see Poonam Pandey or Elie Grunhaus (or use getPwd)

As of September 15, all crawler-related shell scripts and perl scripts use Oracle Wallet to get the latest Oracle password. Oracle passwords must be changed every 6 months, and is coordinated and implemented by Poonam Pandey.

| Note | ||

|---|---|---|

| ||

The IRMIS GUI uses hardcoded passwords. This must be changed “manually” at every password change cycle. See below for detailed procedure. |

Database structure

see schema diagram below. (this diagram excludes the EPICS camdmp structure, which is documented separately here: <url will be supplied>)

Crawler scripts

The PV crawler is run once for each IOC boot directory structure. The LCLS and FACET PV crawlers run the same code, that in the LCLS directory structure. The LCLS crawler code has been modified to be production-specific; it is a different version than the SLAC PV crawler.

The SLAC PV crawler (runSLACPVCrawler.csh) runs the crawler a couple of times to accommodate the various CD IOC directory structures.

NEW: As of mid-January 2014, the LCLS PV crawler now has a function to crawler $IOC_DATA/<ioc>/IOC.dbDumpRecord files, which contain an IOC's record names and a subset of configured fields. This file is waaaaaay simpler to crawler than any ioc's st.cmd syntax. If IOC.dbDumpRecord is found, it will be crawled. If the file is not found, the old crawler code will operate on the ioc's st.cmd file.

At the same time, the FACET PV crawler scripts were modified to share the LCLS crawler code, so IOC.dbDumpRecord files are also crawled for FACET.

As IOCs are rebooted during maintenance and upgrades, IOC.dbDumpRecord will eventually become the only files crawled in the system, resulting in easier maintenance.

cron jobs

- LCLS side: laci on lcls-daemon2: runLCLSPVcrawlerLx.bash: crawls LCLS PVs and creates lcls-specific tables (bsa_root_names, devices_and_attributes).

- LCLS side: laci on lcls-daemon2: caget4curr_ioc_device.bash: does cagets to populate curr_ioc_devices for the IOC Info APEX app. Run separately from the crawlers because cagets can hang unexpectedly – they are best done in an isolated script!

- CD side: laci on lcls-prod01: runAllCDCrawlers.csh: runs CD PV crawler, data validation, and sync to MCCO. Client crawlers are no longer run! alh and car data sources have changed and the code has not been updated.

- FACET side: flaci on facet-daemon1: runFACETPVcrawlerLx.bash: crawls FACET PVs.

PV crawler operation summary

For the location of the crawler scripts, see Source code directories below.

Basic steps as called by cron scripts are:

- run FACET pv crawler to populate MCCQA tables

- run LCLS pv crawlers to populate MCCQA tables

- run Data Validation for PV data in MCCQA

- if Data Validation returns SUCCESS, run synchonization of MCCQA data to selected (3 only at the moment) MCCO tables.

- run caget4curr_ioc_device to populate caget columns of curr_ioc_device

** For PV Crawlers: the crawler group for any given IOC is determined by its row in the IOC table. The system column refers to the boot group for the IOC, as shown below.

LOGFILES, Oracle audit table

Log filenames are created by appending a timestamp to the root name shown in the tables below.

The major steps in the crawler jobs write entries into the Oracle CONTROLS_GLOBAL.DATA_VALIDATION_AUDIT table. Each entry has these attributes:

- Instance

- Schema

- Process

- Stage

- Status

- Message

- TOD (time of day)

(see below for details on querying this table)

Descriptions of the MAIN scripts (there other subsidiary scripts as well):

these are all ultimately invoked from the cron jobs shown above; the cron scripts call the others.

BLUE script names are on the CD side

GREEN script names are on the LCLS side

PURPLE script names are on the FACET side

| Wiki Markup |

|---|

{table:border=1}

{tr}

{th}script name{th}

{th}descr{th |

| Wiki Markup |

{table:border=1} {tr} {th}script name{th} {th}descr{th} {tr} {td}{color:#0000ff}runAllCDCrawlers.csh{color}\\ {color:#0000ff}{_}in /afs/slac/g/cd/soft/tools/irmis/cd_script{_}{color}{td} {td}runs * SLAC PV crawler * data validation for all current crawl data (LCLS and CD) * sync to MCCO \\ Logfile: /nfs/slac/g/cd/log/irmis/pv/ CDCrawlerAll.log {td} {tr} {td}{color:#0000ff}runSLACPVCrawler.csh{color}\\ {color:#0000ff}{_}in /afs/slac/g/cd/soft/tools/irmis/cd_script{_}{color}{td} {td}run by runAllCDCrawlers.csh: crawls NLCTA IOCs (previously handled PEPII IOCs) \\ The PV crawler is run 4 times within this script to accommodate the various boot directory structures: || system || IOCs crawled || log files || | NLCTA | IOCs booted from $CD_IOC on gtw00. \\ Boot dirs mirrored to /nfs/mccfs0/u1/pepii/mirror/cd/ioc for crawling | /nfs/slac/g/cd/log/irmis/pv/pv_crawlerLOG_ALL.\* \\ /tmp/pvCrawler.log | | TR01 | TR01 only (old boot structure) | /nfs/slac/g/cd/log/irmis/pv/pv_crawlerLOG_TR01.\* \\ /tmp/pvCrawler.log | {td} {tr} {tr} {td}{color:#008000#0000ff}runLCLSPVCrawlerLxrunAllCDCrawlers.bashcsh{color}\\ {color:#008000#0000ff}{_}in /afs/slac/usrg/localcd/lclssoft/tools/irmis/cd_script/{_}{color}{td} {td}crawls LCLS IOCs || system || IOCs crawled || log files || | LCLS | LCLS PRODUCTION IOCs. all IOC dirs in \\ $IOC /usr/local/lcls/epics/iocCommon | /u1/lcls/tools/crawler/pv/pv_crawlerLOG_LCLS.timestamp \\ /tmp/ LCLSPVCrawl.log | {td} {trruns * SLAC PV crawler * data validation for all current crawl data (LCLS and CD) * sync to MCCO \\ Logfile: /nfs/slac/g/cd/log/irmis/pv/ CDCrawlerAll.log {td} {tr} {td}{color:#800080#0000ff}runFACETPVCrawlerLxrunSLACPVCrawler.bashcsh{color}\\ {color:#800080#0000ff}{_}in /afs/slac/usrg/localcd/facetsoft/tools/irmis/cd_script/{_}{color} runs LCLS crawler code{td} {td}run by runAllCDCrawlers.csh: crawls FACET IOCs NLCTA IOCs (previously handled PEPII IOCs) \\ The PV crawler is run 4 times within this script to accommodate the various boot directory structures: || system || IOCs crawled || log files || | FACETNLCTA | FACETIOCs PRODUCTIONbooted IOCs all IOCfrom $CD_IOC on gtw00. \\ Boot dirs inmirrored facet $IOC /usr/local/facet/epics/iocCommon | /u1/facettools/crawlerto /nfs/mccfs0/u1/pepii/mirror/cd/ioc for crawling | /nfs/slac/g/cd/log/irmis/pv/pv_crawlerLOG_LCLSALL.timestamp\* \\ /tmp/FACETPVCrawlpvCrawler.log | {td} {tr} {tr} {td}{color:#008000}run_find_devices.bash{color}\\ {color:| TR01 | TR01 only (old boot structure) | /nfs/slac/g/cd/log/irmis/pv/pv_crawlerLOG_TR01.\* \\ /tmp/pvCrawler.log | {td} {tr} {tr} {td}{color:#008000}runLCLSPVCrawlerLx.bash{color}\\ {color:#008000}{_}in /usr/local/lcls/tools/irmis/script/_{color}{td} {td}forcrawls LCLS and FACET only, populate the devices_and_attributes table, a list of device names and attributes based on the LCLS PV naming convention. For PV DEV:AREA:UNIT:ATTRIBUTE, DEV:AREA:UNIT is the device, ATTRIBUTE is the attribute.IOCs || system || IOCs crawled || log files || | LCLS | LCLS PRODUCTION IOCs. all IOC dirs in \\ $IOC /usr/local/lcls/epics/iocCommon | /u1/lcls/tools/crawler/pv/pv_crawlerLOG_LCLS.timestamp \\ /tmp/ LCLSPVCrawl.log (log in as laci@lcls-daemon2)| {td} {tr} {tr}\\ {td}{color:#008000}run_load_bsa_root_names#800080}runFACETPVCrawlerLx.bash{color}\\ {color:#008000#800080}{_}in /usr/local/lclsfacet/tools/irmis/script/_{color} runs LCLS crawler code{td} {td} loads bsa_root_names table by running stored procedure LOAD_BSA_ROOT_NAMES. LCLS and FACET names.crawls FACET IOCs || system || IOCs crawled || log files || | FACET | FACET PRODUCTION IOCs all IOC dirs in facet $IOC /usr/local/facet/epics/iocCommon | /u1/facettools/crawler/pv/pv_crawlerLOG_LCLS.timestamp \\ /tmp/FACETPVCrawl.log (log in as flaci@facet-daemon1)| {td} {tr} {tr} {td}{color:#008000}iocrun_find_reportdevices.bash{color}\\ {color:#008000}{_}in /usr/local/lcls/tools/irmis/script/_{color}{td} {color:#800080}ioc_report-facet.bash{color}\\ {color:#800080}{_}in /usr/local/facet/tools/irmis/script/_{color}{td} {td}runs at the end of the LCLS PV crawl, which is last, creates the web ioc report: \\ [http://www.slac.stanford.edu/grp/cd/soft/database/reports/ioc_report.html] td}for LCLS and FACET only, populate the devices_and_attributes table, a list of device names and attributes based on the LCLS PV naming convention. For PV DEV:AREA:UNIT:ATTRIBUTE, DEV:AREA:UNIT is the device, ATTRIBUTE is the attribute.{td} {tr} {tr}\\ {td}{color:#0000ff}updateMaterializedViews.csh#008000}run_load_bsa_root_names.bash{color}\\ {color:#0000ff#008000}{_}in /afsusr/slac/g/cd/softlocal/lcls/tools/irmis/cd_script{/_}{color}{td} {td}refreshes materialized view from curr_pvs loads bsa_root_names table by running stored procedure LOAD_BSA_ROOT_NAMES. LCLS and FACET names.{td} {tr} {tr} {td}{color:#008000}findDupePVsioc_report.bash{color}\\ {color:#008000}{_}in /usr/local/lcls/tools/irmis/script/_{color} {color:#800080}findDupePVsioc_report-allfacet.bash{color}\\ {color:#800080}{_}in /usr/local/facet/tools/irmis/script/_{color}{td} {td}finds duplicate PVs for reporting to e-mail \\ (the \--all version takes system as a parameter){td} {runs at the end of the LCLS PV crawl, which is last, creates the web ioc report: \\ [http://www.slac.stanford.edu/grp/cd/soft/database/reports/ioc_report.html] {td} {tr} {tr} {td}{color:#008000}refresh_curr_ioc_device.bash#0000ff}updateMaterializedViews.csh{color}\\ {color:#008000#0000ff}{_}in /afs/slac/usrg/localcd/lclssoft/tools/irmis/cd_script/{_}{color}{td} {td}refreshes the curr_ioc_device table with currently booted infomaterialized view from ioc_device (speeds up query in the Archiver PV APEX app). \\ Also see caget4curr_ioc_device.bash below.{td} curr_pvs{td} {tr} {tr} {td}{color:#008000}find_LCLSpv_count_changesfindDupePVs.bash{color}\\ {color:#008000}{_}in /usr/local/lcls/tools/irmis/script/_{color} {color:#800080}find_pv_count_changesfindDupePVs-all.bash{color}\\ {color:#800080}{_}in /usr/local/facet/tools/irmis/script/_{color}{td} {td}finds theduplicate IOCsPVs withfor PVreporting counts that changed in the last LCLS crawler run. This is reported in the logfile, and in the daily e-mail. \\ (the \--all version takes system as a parameterto e-mail \\ (the \--all version takes system as a parameter){td} {tr} {tr} {td}{color:#008000}populaterefresh_dtypcurr_ioioc_tabdevice.bash{color}\\ {color:#008000}populate_io_curr_pvs_and_fields.bash{color}\\ {color:#008000}run_load_hw_dev_pvs.bash{color}\\ {_}in /usr/local/lcls/tools/irmis/script/_{color}{td} {td}refreshes the curr_ioc_device table with currently booted info from ioc_device (speeds up query in the Archiver PV APEX app). \\ Also see caget4curr_ioc_device.bash below.{td} {tr} {tr} {td}{color:#008000}runfind_parseLCLSpv_camaccount_iochanges.bash{color}\\ {color:#008000}{_}in /usr/local/lcls/tools/irmis/script/_{color}{td} {td}set of script to populate various tables for the EPICS camdmp APEX application.{td} {tr} {tr} {td}{color:#008000}write_data_validation_rowcolor:#800080}find_pv_count_changes-all.bash{color}\\ {color:#008000#800080}{_}in /usr/local/lclsfacet/tools/irmis/script/_{color} {color:#0000ff}write_data_validation_row.csh{color}\\ {color:#0000ff}{_}in /afs/slac/g/cd/soft/tools/irmis/cd_script{_}{color} {color:#800080}write_data_validation_row-facet.bash{color}\\ {color:#800080}{_}in /usr/local/facet/tools/irmis/script/_{color}{td} {td}writes a row to the controls_global.data_validation_audit table (all environmentstd} {td}finds the IOCs with PV counts that changed in the last LCLS crawler run. This is reported in the logfile, and in the daily e-mail. \\ (the \--all version takes system as a parameter){td} {tr} {tr} {td}runDataValidation.csh \\ runDataValidation-facet.csh \\ _in /afs/slac/g/cd/soft/tools/irmis/cd_script{_}{td} {td}runs IRMISDataValidation.pl -- CD and LCLS PV data validation{td} {tr} {tr} {td}{color:#b00e00}runSync.csh}{color:#008000}populate_dtyp_io_tab.bash{color}\\ {color:#008000}populate_io_curr_pvs_and_fields.bash{color}\\ {color:#008000}run_load_hw_dev_pvs.bash{color}\\ {color:#008000}run_parse_camac_io.bash{color}\\ {color:#b00e00#008000}{_}in /afsusr/slac/g/cd/softlocal/lcls/tools/irmis/cd_script{/_}{color}{td} {td}runsset dataof replicationscript to populate MCCO,various currentlytables thesefor objects ONLY: * curr_pvs * bsa_root_names * devices_and_attributesthe EPICS camdmp APEX application.{td} {tr} {tr} {td}{color:#008000}caget4currwrite_data_iocvalidation_devicerow.bash{color}\\ {color:#008000}{_}in /usr/local/lcls/tools/irmis/script/_{color} {td} {td}does cagets to obtain IOC parameters for the curr_ioc_device table. Run as a separate cron job from the crawlers because cagets \\ can hang unexpectedly -- they are best done in an isolated script\!{td} {tr} {table} |

Crawler cron schedule and error reporting

time | script | cron owner/host |

|---|---|---|

8 pm | runFACETPVCrawlerLx.bash | flaci/facet-daemon1 |

9 pm | runLCLSPVCrawlerLx.bash | laci/lcls-daemon2 |

1:30 am | runAllCDCrawlers.csh | laci/lcls-prod01 |

4 am | caget4curr_ioc_device.bash | laci/lcls-daemon2 |

Following the crawls, the calling scripts grep for errors and warnings, and send lists of these to Judy Rock, Bob Hall and Ernest Williams, Jingchen Zhou. The LCLS PV crawler and the Data Validation scripts send messages to controls-software-reports as well. To track down the error messages in the e-mail, refer to the logs file du jour, the cron job /tmp output files, and the Oracle CONTROLS_GLOBAL.DATA_VALIDATION_AUDIT (see below for details on how to query this)

...

color:#0000ff}write_data_validation_row.csh{color}\\ {color:#0000ff}{_}in /afs/slac/g/cd/soft/tools/irmis/cd_script{_}{color}

{color:#800080}write_data_validation_row-facet.bash{color}\\ {color:#800080}{_}in /usr/local/facet/tools/irmis/script/_{color}{td}

{td}writes a row to the controls_global.data_validation_audit table (all environments){td}

{tr}

{tr}

{td}runDataValidation.csh

\\

runDataValidation-facet.csh

\\

_in /afs/slac/g/cd/soft/tools/irmis/cd_script{_}{td}

{td}runs IRMISDataValidation.pl -- CD and LCLS PV data validation{td}

{tr}

{tr}

{td}{color:#b00e00}runSync.csh{color}\\ {color:#b00e00}{_}in /afs/slac/g/cd/soft/tools/irmis/cd_script{_}{color}{td}

{td}runs data replication to MCCO, currently these objects ONLY:

* curr_pvs

* bsa_root_names

* devices_and_attributes{td}

{tr}

{tr}

{td}{color:#008000}caget4curr_ioc_device.bash{color}\\ {color:#008000}{_}in /usr/local/lcls/tools/irmis/script/_{color}{td}

{td}does cagets to obtain IOC parameters for the curr_ioc_device table. Run as a separate cron job from the crawlers because cagets

\\

can hang unexpectedly -- they are best done in an isolated script\!{td}

{tr}

{table} |

Crawler cron schedule and error reporting

time | script | cron owner/host |

|---|---|---|

8 pm | runFACETPVCrawlerLx.bash | flaci/facet-daemon1 |

9 pm | runLCLSPVCrawlerLx.bash | laci/lcls-daemon2 |

1:30 am | runAllCDCrawlers.csh | laci/lcls-prod01 |

4 am | caget4curr_ioc_device.bash | laci/lcls-daemon2 |

Following the crawls, the calling scripts grep for errors and warnings, and send lists of these to Judy Rock, Bob Hall and Ernest Williams, Jingchen Zhou. The LCLS PV crawler and the Data Validation scripts send messages to controls-software-reports as well. To track down the error messages in the e-mail, refer to the logs file du jour, the cron job /tmp output files, and the Oracle CONTROLS_GLOBAL.DATA_VALIDATION_AUDIT (see below for details on how to query this)

| Anchor | ||||

|---|---|---|---|---|

|

Source directories

...

Source directories

description

cvs root

production directory tree root

details

IRMIS software

CD: /afs/slac/package/epics/tools/irmisV2_SLAC

description | cvs root | production directory tree root | details |

|---|---|---|---|

IRMIS software | SLAC code has diverged from the original collaboration version, and LCLS IRMIS code has diverged from the main SLAC code (i.e. we have 2 different version of the IRMIS PV crawler.) | CD: |

|

CD scripts | For ease and clarity, the CD scripts are also in the LCLS CVS repository under |

LCLS:

Please note different CVS location: the production directory for the LCLS crawlers is /usr/local/lcls/package/irmis/irmisV2_SLAC/. the LCLS "package" dir structure is NOT in CVSed. So, to modify crawler code, you can cvs co the code, modify, test, copy the mods straight into production, then CVS them.

CVS location is in tools/irmis/crawler_code_CVS

/afs/slac/g/cd/tools/irmis |

| |

LCLS scripts | /afs/slac/g/lcls/cvs |

/usr/local/lcls/tools/irmis |

| |

FACET scripts | These scripts share the LCLS repository (different names so they don’t collide with LCLS scripts) |

LCLS:

.stanford.edu/cgi-wrap/cvsweb/tools/irmis/crawler_code_CVS/?cvsroot=LCLS | /usr/local/ |

facet/ |

tools/irmis |

|

- db/src/crawlers contains crawler source code. SLAC-specific crawlers are in directories named *SLAC

- apps/src contains UI source code.

- apps/build.xml is the ant build file

- README shows how to build the UI app using ant

CD scripts

For ease and clarity, the CD scripts are also in the LCLS CVS repository under

tools/irmis/cd_script, cd_utils, cd_config

http://www.slac.stanford.edu/cgi-wrap/cvsweb/tools/irmis/crawler_code_CVS/?cvsroot=LCLS

/afs/slac/g/cd/tools/irmis

- cd_script contains most scripts

- cd_utils has a few scripts

- cd_config contains client crawler directory lists

LCLS scripts

/afs/slac/g/lcls/cvs

http://www.slac.stanford.edu/cgi-wrap/cvsweb/tools/irmis/?cvsroot=LCLS

/usr/local/lcls/tools/irmis

- script contains the LCLS pv crawler run scripts, etc.

- util has some subsidiary scripts

FACET scripts

These scripts share the LCLS repository (different names so they don’t collide with LCLS scripts)

http://www.slac.stanford.edu/cgi-wrap/cvsweb/tools/irmis/crawler_code_CVS/?cvsroot=LCLS

/usr/local/facet/tools/irmis

- script contains the FACET pv crawler run scripts, etc.

- util has some subsidiary scripts

PV Crawler: what gets crawled and how

- runSLACPVCrawler.csh, runLCLSPVCrawlerLx.bash and runFACETPVCrawlerLx.bash set up for and run the IRMIS pv crawler multiple times to hit all the boot structures and crawl groups. Environment variables set in pvCrawlerSetup.bash, pvCrawlerSetup-facet.bash and pvCrawlerSetup.csh, point the crawler to IOC boot directories, log directories, etc. Throughout operation, errors and warnings are written to log files.

- The IOC table in the IRMIS schema contains the master list of IOCs. The SYSTEM column designates which crawler group the IOC belongs to.

- An IOC will be hit by the pv crawler if its ACTIVE column is 1 in the IOC table: ACTIVE is set to 0 and 1 by the crawler scripts, depending on SYSTEM, to control what is crawled. i.e. LCLS IOCs are set to active by the LCLS crawler job. NLCTA IOCs are set to active by the CD crawler job.

- An IOC will not be crawled unless its LCLS/FACET STARTTOD or CD TIMEOF BOOT (boot time) PV has changed since the last crawl.

- Crawler results will not be saved to the DB unless at least one file mod date or size has changed.

- Crawling specific files can be triggered by changing the date (e.g. touch).

- In the pv_crawler.pl script, there’s a mechanism for forcing the crawling of subsets of IOCs (see the code for details)

- IOCs without a STARTTOD/TIMEOFBOOT PV will be crawled every time. (altho PV data is only written when IOC .db files have changed)

...

|

PV Crawler: what gets crawled and how

- runSLACPVCrawler.csh, runLCLSPVCrawlerLx.bash and runFACETPVCrawlerLx.bash set up for and run the IRMIS pv crawler multiple times to hit all the boot structures and crawl groups. Environment variables set in pvCrawlerSetup.bash, pvCrawlerSetup-facet.bash and pvCrawlerSetup.csh, point the crawler to IOC boot directories, log directories, etc. Throughout operation, errors and warnings are written to log files.

- The IOC table in the IRMIS schema contains the master list of IOCs. The SYSTEM column designates which crawler group the IOC belongs to.

- An IOC will be hit by the pv crawler if its ACTIVE column is 1 in the IOC table: ACTIVE is set to 0 and 1 by the crawler scripts, depending on SYSTEM, to control what is crawled. i.e. LCLS IOCs are set to active by the LCLS crawler job. NLCTA IOCs are set to active by the CD crawler job.

- An IOC will be crawled if it has been rebooted since the last crawer run: if its LCLS/FACET STARTTOD or CD TIMEOF BOOT (boot time) PV has changed since the last crawl. Otherwise it will be skipped.

- Crawler results will be saved to the DB if at least boot file has changed, as determined by mod date and file size size.

- Crawling specific files can be triggered by changing the mod date (e.g. touch).

- In the pv_crawler.pl script, there’s a mechanism for forcing the crawling of subsets of IOCs (see the pv_crawler.pl code)

- IOCs without a STARTTOD/TIMEOFBOOT PV will be crawled every time. (altho PV data is only written when IOC .db files have changed)

Removing an IOC from the active crawler list

Sometimes an IOC becomes obsolete. Although IOCs are automatically added to the IRMISDB.IOC table when the crawler encounters a new $IOC/<ioc> directory, IOCs are not automatically removed from the system. Typically, an IOC engineer will request that her IOC be removed from the crawler (and hence all current PV and IOC lists, and reports).

To remove an IOC from the active crawler list:

Update the IRMISDB.IOC table in MCCQA to set the SYSTEM value to something that is not understood by the crawler:

update ioc

set system = 'LCLS-NOCRWL'

where ioc_nm = 'iocname';

or

update ioc

set system = FACET-NOCRWL'

where ioc_nm = 'iocname';

TROUBLESHOOTING: post-crawl emails and data_validation_audit entries: what to worry about, how to bypass or fix for a few…

| Info | ||

|---|---|---|

| ||

Once a problem is corrected, the next night’s successful crawler jobs will fix up the data. |

- The DATA_VALIDATION FINISH step MSG field in the CONTROLS_GLOBAL.DATA_VALIDATION_AUDIT table will show the list of errors found by the data validation proc:

- Log into Oracle on MCCQA as IRMISDB

- use TOAD or

- from the Linux command line:

- source /usr/local/lcls/epics/setup/oracleSetup.bash

- export TWO_TASK=MCCQA

- sqlplus irmisdb/`getPwd irmisdb`

- The DATA_VALIDATION FINISH step MSG field in the CONTROLS_GLOBAL.DATA_VALIDATION_AUDIT table will show the list of errors found by the data validation proc:

select * from controls_global.data_validation_audit where schema_nm=’IRMISDB’ order by tod desc;

This will show you (most recent first) the data validation entries, with any error messages.

You can also see a complete listing of data_validation_audit entries in reverse chron order by using AIDA (but you will have to pick out the IRMISDB lines):

- Launch AIDA web https://mccas1.slac.stanford.edu/aidaweb

- In the query line, enter LCLS//DBValidationStatus

** Please focus only on entries where schema_nm is IRMISDB. This report shows entries for ALL of our database operations; sometimes entries from different systems are interleaved.*

For a “good” set of IRMISDB entries, scroll down and see those from: 9/22 9 pm, continuing into 9/23, which completed successfully. The steps in ascending order are:

FACET PV Crawler start

FACET PV Crawler finish

ALL_DATA_UPDATE start

LCLS PV Crawler start

LCLS PV Crawler finish

CD PV Crawler start

CD PV Crawler finish

DATA_VALIDATION start

DATA_VALIDATION finish

FACET DATA_VALIDATION start

FACET DATA_VALIDATION finish

ALL_DATA_UPDATE finish

then there are multiple steps for the sync to MCCO, labelled REFRESH_MCCO_IRMIS_TABLES

| Note | ||

|---|---|---|

| ||

REFRESH_MCCO_IRMIS_TABLES will not kick off unless ALL_DATA_UPDATE finishes with a status of SUCCESS. ONLY VALIDATION OF LCLS STEPS AND SUCCESS WILL AFFECT THE SYNC TO MCCO. FACET, and CD e-mails are FYI ONLY. |

some specific error checks:

- comparison of PV counts: pre and post crawl

An error is flagged if the total PV count has dropped more than 5000, because this may indicate that some IOCs have been skipped that were previously being crawled. This would happen when a change occurs in the IOC boot files or IOC boot structure that the crawler doesn’t understand. For example, if the crawler encounters new IOC startup file syntax that it doesn’t understand, but that doesn’t actually crash the crawler program.

The logfile and e-mail message will tell you which IOCs are affected. The first thing to do is check with the responsible IOC engineer(s). It’s possible that the PV count drop is “real”, i.e. the engineer(s) in question intentionally removed a large block of PVs from the system.

- comparison of PV counts: pre and post crawl

- If the drop is intentional: You will need to update the database manually to enable the crawler to proceed: the count difference is discovered by comparing the row count of the newly populated curr_pvs view with the row count of the materialized view curr_pvs_mv, which was updated on the previous day. If the PV count has dropped > 5000 PVs, the synchronization is cautious - it prevents updating good data in MCCO until the reason for the drop is known.

The step to say "it's ok, the drop was intentional or at least non-destructive - synchronization is now ok" is to go ahead and updated the materialized view with current data. Then the next time the crawler runs, the counts will be closer (barring some other problem) and the synchronization can proceed

So, to enable the crawlers proceed, you need to update the materialized view with data in the current curr_pvs view, like this:

log in as laci on lcls-prod01 and run

/afs/slac/g/cd/tools/irmis/cd_script/updateMaterializedViews.csh

The next time the crawler runs (that night), the data validation will be making the comparison with correct current data, and you should be good to go.

- If the drop is intentional: You will need to update the database manually to enable the crawler to proceed: the count difference is discovered by comparing the row count of the newly populated curr_pvs view with the row count of the materialized view curr_pvs_mv, which was updated on the previous day. If the PV count has dropped > 5000 PVs, the synchronization is cautious - it prevents updating good data in MCCO until the reason for the drop is known.

- If the drop is NOT intentional:Check the log for that IOC (search for “Processing iocname”) and/or ask the IOC engineer to check for changes in the IOC boot directories, and IOC boot files for:

- a syntax error in the boot file or directory

- new IOC st.cmd syntax has change to something that the crawler hasn’t learned yet. May require a PVCrawlerParser.pm modification to teach IRMIS a new syntax. This is rare but does occur. It’s possible the IOC engineer can switch back to an older-style syntax, add quotes around a string, etc. to temporarily adjust the situation.

- If the drop is NOT intentional:Check the log for that IOC (search for “Processing iocname”) and/or ask the IOC engineer to check for changes in the IOC boot directories, and IOC boot files for:

...

- log into mcclogin

- cvs co irmis gui code:

export CVSROOT=/afs/slac/package/epics/slaconly/cvs

cd to a working directory

cvs co -d irmis tools/irmisV2_SLAC - cd irmis

- edit site.build.properties and change this line:

db.trust-read-write.password=newpw - set java environment to version 1.4 (this may not be needed):

export JAVA_HOME=/afs/slac/package/java/@sys/jdk1.4

export JAVA_VER=1.4

export PATH=${JAVA_HOME}/bin:${PATH}

- cd db

- ant clean

- ant deploy

- cd ..

- cp db/build/irmisDb.jar apps/deploy

- cd apps/deploy

- test the gui:

java -jar irmis.jar

The gui should connect to the db. - deploy new jars to production:

scp irmisirmisDb.jar over to /usr/local/lcls/package/irmis/irmisV2_SLAC/apps/deploy/. (this one is used by LCLS and FACET)

cp irmisirmisDb.jar /afs/slac/package/epics/tools/irmisV2_SLAC/apps/deploy/.

*** Please note, the app directory (parallel to the db directory) is not build-able in ant anymore, because of changes in java version syntax and method calls deep in the gui code. Therefore, please follow the directions above to BUILD AND DEPLOY THE DB JAR ONLY!

...

IRMISDB history data cleanup

$TOOLS/irmis/data_cleanup has scripts and a README for cleaning historical IRMIS data. The procedure has been done once in February, 2012.

Description and plans and click IRMIS_database_cleanup

...

SCHEMA DIAGRAM

| Anchor | ||||

|---|---|---|---|---|

|

...