...

This document is the basis of a paper (SLAC-PUB-16343) comparing PingER performance on a 1 U bare metal host to that on a Raspberry Pi. It has been running successfuly since July 2015.

This is a project suggested by Bebo White to build and validate a PingER Measurement Agent (MA) based on an inexpensive piece of hardware called a Raspberry Pi (see more about Raspberry Pi) using a linux distribution as the Operating System (see more about Raspbian). If successful one could consider using these in production: reducing the costs, power drain (they draw about 2W of 5V DC power compared to typically over 100W for a deskside computer or 20W for a laptop) and space (credit card size). This is the same type of power required for a smartphone so appropriate off the shelf products including a battery and solar cells are becoming readily available. Thus the Raspberry could be very valuable for sites in developing countries where cost, power utilization and to a lesser extent space may be crucial.

...

- The Raspberry Pi PingER MA must be robust and reliable. It needs to run for months to years with no need for intervention . This still needs to be verified, so far the Raspberry Pi has successfully run without intervention for over a month. This has included and needs automatic recovery after two test power outages.

- The important metrics derived from the measurements made by the Raspberry Pi should not be significantly different from those made by a bare metal PingER MA, or if they are then this needs to be understood.

- The For applications where there is no reliable power siource, the Raspberry Pi needs to be able to run 24 hours a day with only solar derived power. Let's say the power required is 3W at 5V or (3/5)A=0.6A. If we have a 10,000 mAh battery, then at 0.6A it should have power for 10Ah/0.6A ~ 16.6 hours. Then we need a solar cell to be able to refill the battery in a few hours of sunlight. Let's take a 20W 5 V solar panel (see http://www.amazon.com/SUNKINGDOM-trade-Foldable-Charger-Compatible/dp/B00MTEDTWG) = 20/5= 4 A solar panel. So initial guess is 10A-h/4A = 2.5hours. But inefficiences (see http://www.voltaicsystems.com/blog/estimating-battery-charge-time-from-solar/) of say 2.5 extends this to 6.25 hours.

...

PingER measurements are made by ~60 MAs in 23 countries. They make measurements to over 700 targets in ~ 160 countries containing more than 99% of the world's connected population. The measurement cycle is scheduled at roughly 30 minute intervals. The actual scheduled timing of a measurement is deliberately randomized so measurements from one MA are not synchronized with another MA. Typical absolute separation of the timestamp of a measurement from say pinger.slac.stanford.edu to sitka.triumf.ca versus pinger-raspberry.slac.stanford.edu to sitka.triumf.ca is several minutes (e.g. ~ 8 mins for measurements during the time frame June 17 to July 14, 2015), see spreadsheet. At each measurement cycle, each MA issues a set of 100 Byte pings and a set of 1000 Byte ping requests to each target in the MA’s list of targets, stopping when the MA receives 10 ping responses or it has issued 30 ping requests. The number of ping responses is referred to as N and is in the range 0 - 10. The data recorded for each set of pings consists of: the MA and target names and IP addresses; a time-stamp; the number of bytes in the ping request; the number of ping requests and responses (N); the minimum Round Trip Time (RTT) (Min_RTT), the average RTT (Avg_RTT) and maximum RTT (Max_RTT) of the N ping responses; followed by the N ping sequence numbers, followed by the N RTTs. From the N RTTs we derive various metrics including: the minimum ping RTT; average RTT; maximum RTT; standard deviation (stdev) of RTTs, 25% probability (first quartile) of RTT; 75% probability (third quartile) of RTT; Inter Quartile Range (IQR); loss; and reachability (host is unreachable if it gets 100 % loss). We also derive the Inter Packet delay (IPD) and the Inter Packet Delay Variability (IPDV) as the IQR of the IPDs.

...

Localhost comparisons

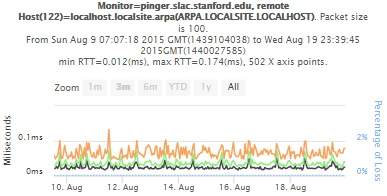

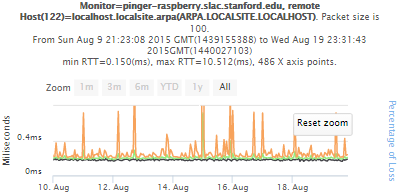

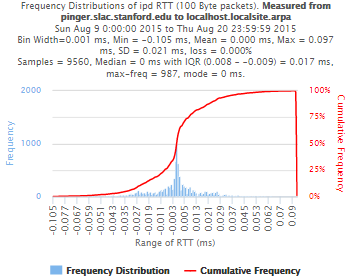

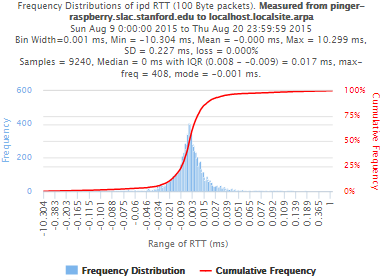

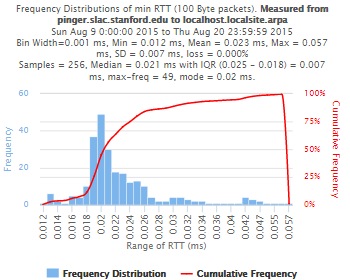

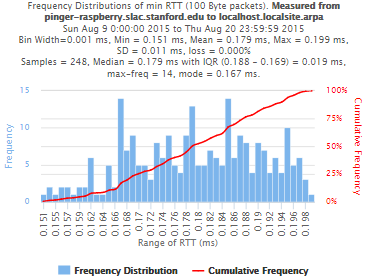

To try and determine more closely the impact of the host on the measuremensts we measured to the measurements, we eliminated the effect of the network by making measurements to the host's localhost NIC port from August 9th to August 19th. Looking . Looking at the time series analyzed data it is apparent that:

- the pinger hosts has considerably shorter RTTs

- the pinger host has fewer large RTT outliers

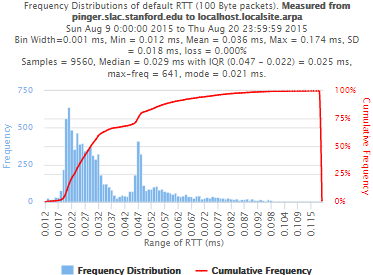

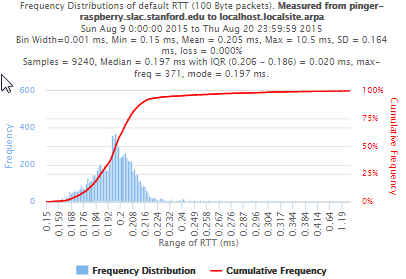

Looking at the RTT frequency distributions it is apparent that:

- the pinger and raspberry host RTT frequency distributions hardly overlap at all (one sample (0.04%) of the raspberry pi distribution overlaps ~ 7% of the pinger distribution).

- the pinger host's Median RTT is 7 times smaller (0.03ms vs 0.2 ms) than that of the raspberry-pi

- the pinger host's maximum outlier (0.174ms) his is ~ factor 60 smaller than the raspberry-pi.

- the pinger host exhibits a pronounced bimodaility not seen for the raspberry-pi.

- The the IQRs are very similar (0.025 vs 0.020ms)

| pinger.slac.stanford.edu | pinger-raspberry.slac.stanford.edu | |

|---|---|---|

| Time series | ||

| RTT frequency | ||

| IPD frequency |

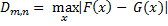

Kolmogorov-Smirnov Test

The Kolmogorov-Smirnov test (KS-test) tries to determine if two datasets differ significantly. The KS-test has the advantage of making no assumption about the distribution of data. In other words it is non-parametric and distribution free. The method is explained here and makes use of an Excel tool called "Real Statiscs". The tests were made using the raw data and distributions, both methods had similar results except for the 100Bytes Packet that had a great difference in the results. The results using raw data says both samples does not come from the same distribution with a significant difference, however if we use distributions the result says that only the 1000Bytes packet does not come from the same distribution. Below you will find the graphs for the distributions that were created and the cumulative frequency in both cases plotted one above other (in order to see the difference between the distributions).

| Raw data - 100 Packets | Distribution - 100 Packets | Raw data - 1000 Packets | Distribution - 1000 Packets | |

|---|---|---|---|---|

| D-stat | 0.194674 | 0.039323 | 0.205525 | 0.194379 |

| P-value | 4.57E-14 | 0.551089 | 2.07E-14 | 7.32E-14 |

| D-crit | 0.0667 | 0.067051 | 0.0667 | 0.067051 |

| Size of Raspberry | 816 | 816 | 816 | 816 |

| Size of Pinger | 822 | 822 | 822 | 822 |

| Alpha | 0.05i | |||

If D-stat is greater than D-crit the samples are not considerated from the same distribution with a (1-Alpha) of accuracy. Remember that D-stat is the maximum difference between the two cumulative frequency curves.

Source: http://www.real-statistics.com/non-parametric-tests/two-sample-kolmogorov-smirnov-test/

In these links you can find the files containing the graphs, histograms and the complete analysis for Kolmogorov-Smirnov between Pinger and Pinger-Raspberry and for SITKA.

Futures

Extension to Android systems

Topher: Here is where we would like you to say something about the potential Android opportunity.

Installation process

The installation procedures for a PingER MA are relatively simple, but do require a Unix knowledgeable person to do the install and it typically takes a couple hours and may require a few corrections pointed out by the central PingER admin. It is possible to pre-configure the Raspberry Pi at the central site and ship it pre-configured to the MA site. However that requires funding the central site Raspberry Pi acquisitions, may raise issues of on-going commitment, and may not be acceptable for the Cyber security folks at the MA site. We are looking at simplifying the install process, possibly by creating an ISO Image

Robustness and Reliability

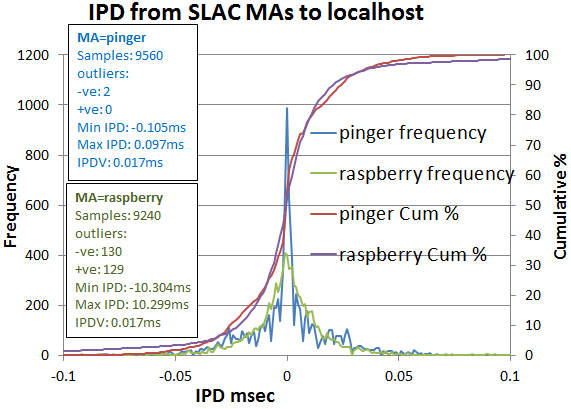

Looking at the IPD distributions it is apparent that:

- the pinger distribution is much sharper (max frequency 987 vs 408 for raspberry-pi)

- there is a slight hint of multi-modaility in the pinger distribution less visible in the raspberry-pi distribution

- the minimum and maximum outliers are about a factor 100 lower for pinger than the raspberry-pi (~ +-0.1 vs ~10ms)

- the IPDV's are similar (0.017ms), whereas the standard deviations differ by almost a factor of 10 (0.021ms vs. 0.227ms. This further illustrates the impact of smaller outliers seen by pinger.

Comparing the IPD frequency distributions for the pinger.SLAC and pinger-raspberry MAs to their localhosts with those to TRIUMF it is seen that the differences are washed out as one goes to the longer RTTs.

Though there are large relative differences in the distributions, the absolute differences of the aggregated statistics (medians, IQRs) are sub millisecond and so should not noticeably affect PingER wide area network results.

Though we have not analyzed the exact reason for the relative differences, we make the following observations:

- The factor of 7 difference in the median RTTs is probably at least partially related to the factor ten in the NICs (1Gbps vs 100Mbps) and the factor of 5 difference in the clock speeds (3Ghz vs 600MHz).

- Looking at the minimum RTTs for each set of pings it is seen that pinger has a larger ratio of median RTT / IQR compared to the raspberry-pi (0.33 vs 0.096).

- This may be related to the fact that the pinger host is less dedicated to acting as a PingER MA since in addition it runs lots of cronjobs to gather, archive and analyze the data. This may also account for some of the more pronounced multi-modality of the pinger host's RTT distributions.

- Currently we do not have a rationale for the reduced outliers on pinger vs the raspberry-pi.

| pinger.slac.stanford.edu | pinger-raspberry.slac.stanford.edu | |

|---|---|---|

| Time series | ||

| RTT frequency | ||

| Minimum RTTs for each set of 10 pings | ||

| IPD frequency |

Kolmogorov-Smirnov Test

The Kolmogorov-Smirnov test (KS-test) tries to determine if two datasets differ significantly. The KS-test has the advantage of making no assumption about the distribution of data. In other words it is non-parametric and distribution free. The method is explained here and makes use of an Excel tool called "Real Statiscs". The tests were made using the raw data and distributions, both methods had similar results except for the 100Bytes Packet that had a great difference in the results. The results using raw data says both samples does not come from the same distribution with a significant difference, however if we use distributions the result says that only the 1000Bytes packet does not come from the same distribution. Below you will find the graphs for the distributions that were created and the cumulative frequency in both cases plotted one above other (in order to see the difference between the distributions).

| Raw data - 100 Packets | Distribution - 100 Packets | Raw data - 1000 Packets | Distribution - 1000 Packets | |

|---|---|---|---|---|

| D-stat | 0.194674 | 0.039323 | 0.205525 | 0.194379 |

| P-value | 4.57E-14 | 0.551089 | 2.07E-14 | 7.32E-14 |

| D-crit | 0.0667 | 0.067051 | 0.0667 | 0.067051 |

| Size of Raspberry | 816 | 816 | 816 | 816 |

| Size of Pinger | 822 | 822 | 822 | 822 |

| Alpha | 0.05i | |||

If D-stat is greater than D-crit the samples are not considered from the same distribution with a (1-Alpha) of accuracy. Remember that D-stat is the maximum difference between the two cumulative frequency curves.

Source: http://www.real-statistics.com/non-parametric-tests/two-sample-kolmogorov-smirnov-test/

In these links you can find the files containing the graphs, histograms and the complete analysis for Kolmogorov-Smirnov between Pinger and Pinger-Raspberry and for SITKAThis still needs to be demonstrated in the field. We also need to more fully understand the solar power requirements.