...

- PingER makes measurements at regular intervals and thus has results by time of day, day of month, month by month, and year by year going back to 1998.

- Also the regularity of measurements can enable measurement of the timing and impact of events such as: earthquakes, tsunamis, social upheavals, mistaken routing, changing from geo-stationary satellite links to terrestrial links etc.

- PingER has a history of Internet performance using a single mechanism for two decades

- PingER has measurements by country, region.

Simplicity of set up

- PingER does not require any software to be installed in the targets.

- PingER uses common targets using the standard Internet path to them, as opposed to selecting only well-connected targets.

- Fixed source (SLAC) eliminates changes if the Measurement Agent is closer to or inside of the country being measured (where they had not existed previously), which could show as a speed rise, yet the speeds experienced by citizens may not have subjectively changed.

Validity

Estimating the throughput using the ping loss and Round Trip Time (RTT) and the Mathis formula (see https://www.slac.stanford.edu/comp/net/wan-mon/thru-vs-loss.html) and comparing with the TCP throughput using ttcp (see https://en.wikipedia.org/wiki/Ttcp) gave good agreement.

...

- This is for a single TCP stream

- Use of multi-stream TCP to achieve high throughput is fairly common today

- Based on TCP Reno implementation.

- Today's standard for Linux is based on CUBIC (see https://www.noction.com/blog/tcp-transmission-control-protocol-congestion-control)

- Fails for no packet loss

- Assuming no loss, in a day PingER will send 480 packets from an MA to a target, or a loss rate of < 0.2%. In a 30 day month it sends 14,400 packet and no loss is a loss rate of < 0.007% and in a year 172,800 packets or loss < 0.00058%.

- No loss in a month is commonly observed for targets with excellent network connectivity (e.g. Singapore).

- When calculating annual throughputs, we filter out months with no loss.

No separate estimates of upload and download speeds

does PingER differ from speed tests

...

- no loss in month is a loss rate of < 0.007% and in a year no loss in 172,800 packets or loss < 0.00058%

-

- Bit error rates in fibre are typically between 10^-9 and 10^-12 (e.g. https://www.

...

...

...

...

- bit_error_rate.htm). For packet sizes of 1460 Bytes this translates to 0.0012% and 0.00001%

- Possible accomodations:

- Increase the number of pings per measurement:

- Decrease the inter ping interval from 1/second to 5/second (200msec is the minimum interval for a non super user in Linux) and send 5 times as many pings per measurement.

- Increase the number of pings sent per measurement but do not reduce the interval. Each measurement will now take extra time (e.g. if increase number of pings by 5 then a successful measurement with no losses will take 5 times as long. One may be able to compensate for this by increasing the number of threads. Currently the number of threads is 25 and it takes the SLAC MA between 1300 and 1750 seconds to measure about 1000 targets. Increasing the number of threads could lead to troubles (e.g. see https://stackoverflow.com/questions/344203/maximum-number-of-threads-per-process-in-linux) so would need to be done carefully and monitored.

- One could decide which targets have good connectivity (e.g. all targets in certain regions such as North America, Europe, East Asia, Australasia, and some special cases such as Singapore) and just increase the number of pings for them in the configuration database. This could be person power intensive or would need some extra automation to be developed.

- Increase the number of pings per measurement:

No separate estimates of upload and download speeds

Limited number of targets in a country

In several countries PingER only has a few targets which though carefully chosen may not be fully representative of the country. For example for ASEAN countries:

| S.E. Asia (98) | Brunei BN (3/1) | Cambodia KH (2/1) | Indonesia ID (40/1) | Laos LA (1/1) | Malaysia MY (24/2) |

|---|---|---|---|---|---|

| S.E. Asia (98) | Myanmar MM (4/1) | Philippines PH (7/1) | Singapore SG (3/1) | Thailand TH (10/1) | Vietnam VN (4/1) |

Which would indicate that the PingER results for Laos(1), Cambodia (2), Brunei (3), Singapore(3), Myanmar(4), and Vietnam (4) are less representative.

does PingER differ from speed tests

This is similar to monitor-io (see https://www.monitor-io.com/monitor-io-vs-speed-tests.html), where it says:

Internet service providers typically publish upload and download speeds to describe the size of the connection to the home. The test itself is not a real-world measure; actual user traffic rarely uses the connection this way. Thus it is very rare, if ever, to actually get the published speed when using the network.

Speed tests identify a server, typically not far from the source, and send multiple large samples of data to it and then back from it. The data is significant enough to extend beyond the capacity of the connection in order to measure the time it takes to move all the information. It is similar to trying to see how many cars can move on a highway by sending a lot of cars on it until all lanes are full. Clearly, not something that should be done too often because it saturates your network.

Most applications (other than large multi-threaded file uploads and downloads) do not push the boundaries of this measurement and therefore, while useful, are not the only test of internet performance that is relevant. Most video applications, adjust the rate of transmission of packets to a comfortable speed to give the best user experience. As long as there are no other issues, most modern connections have more than sufficient bandwidth (in spite of the attempt by some internet service providers to convince subscribers otherwise).

The monitor-io service sends a small number of packets, in a planned sequence, to different destinations to measure performance. The packets are analyzed in a detailed fashion to extract the precise cause of poor performance. It is the equivalent of sending one car to see if the road is congested, and documenting the issues seen by the car. By sending packets to different points on the globe, monitor-io has a better sense of the performance across different paths and provides a more real-world view of how any end-user would experience network performance.

The device that runs the monitor-io tests is tuned to perform only this one function and is independent of any interference from other applications, thereby guaranteeing accurate measurements. When the device is connected directly to the first router on the premises, it will give the most accurate view of the performance of the internet service provider.

The service keeps track of all this data so that a user can get a historical view of the quality and reliability of their internet service. It also alerts them if there is impaired or degraded performance at any time of the day. In fact, if they do want to do a standard bandwidth speed test, monitor-io allows them to run one from directly within our site as a sanity check...but we believe that our 24x7 lightweight test sequence will be much more useful.

- some measurements measure the capacity of a link between a source and destination by flooding it with data using multiple streams etc. (e.g. perfSONAR),

- or may flood a single stream for 10 seconds( NDT) as is done by Cable. The analogy in this case would be putting a big bus with many people (data) on the road.

- many regulatory and governmental bodies around the world, use physical equipment to constantly measure the maximum speed available on particular lines across a long period of time, and it is from this it will derive its average,

- Ookla filters out the slowest 30% and the fastest 10% speedtests.

- Some year-on-year speed changes could potentially be down to factors not connected to speeds in real terms. For example, if new M-Lab test servers were activated closer to or inside of the country being measured (where they had not existed previously), this could show as a speed rise, yet the speeds experienced by citizens may not have subjectively changed.

- Since PingER has fixed MAs this is not a problem for PingER

Providers of Internet speeds by Country

...

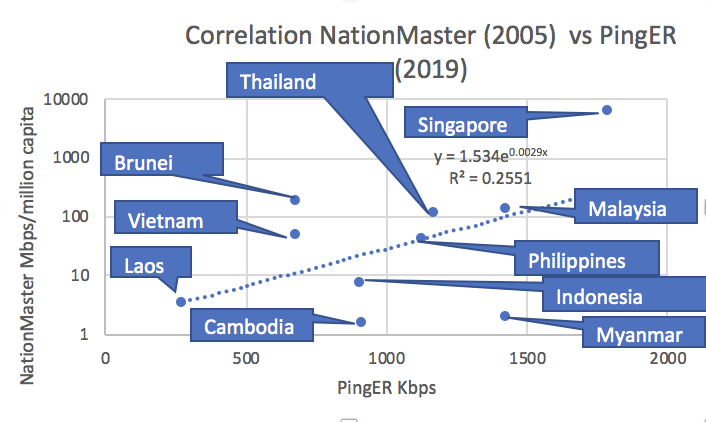

Their most recent data is for 2005 for which PingER has data for only 4 ASEAN nations (Brunei, Indonesia, Malaysia and Singapore). Thus we do not use this data for comparisons.

Akamai

Akamai now focuses their state of the Internet reports on online security threats and DDoS attacks. The last Akamai Internet speed report was in 2017.

PerfSONAR

See PingER and perfSONAR comparison.

...