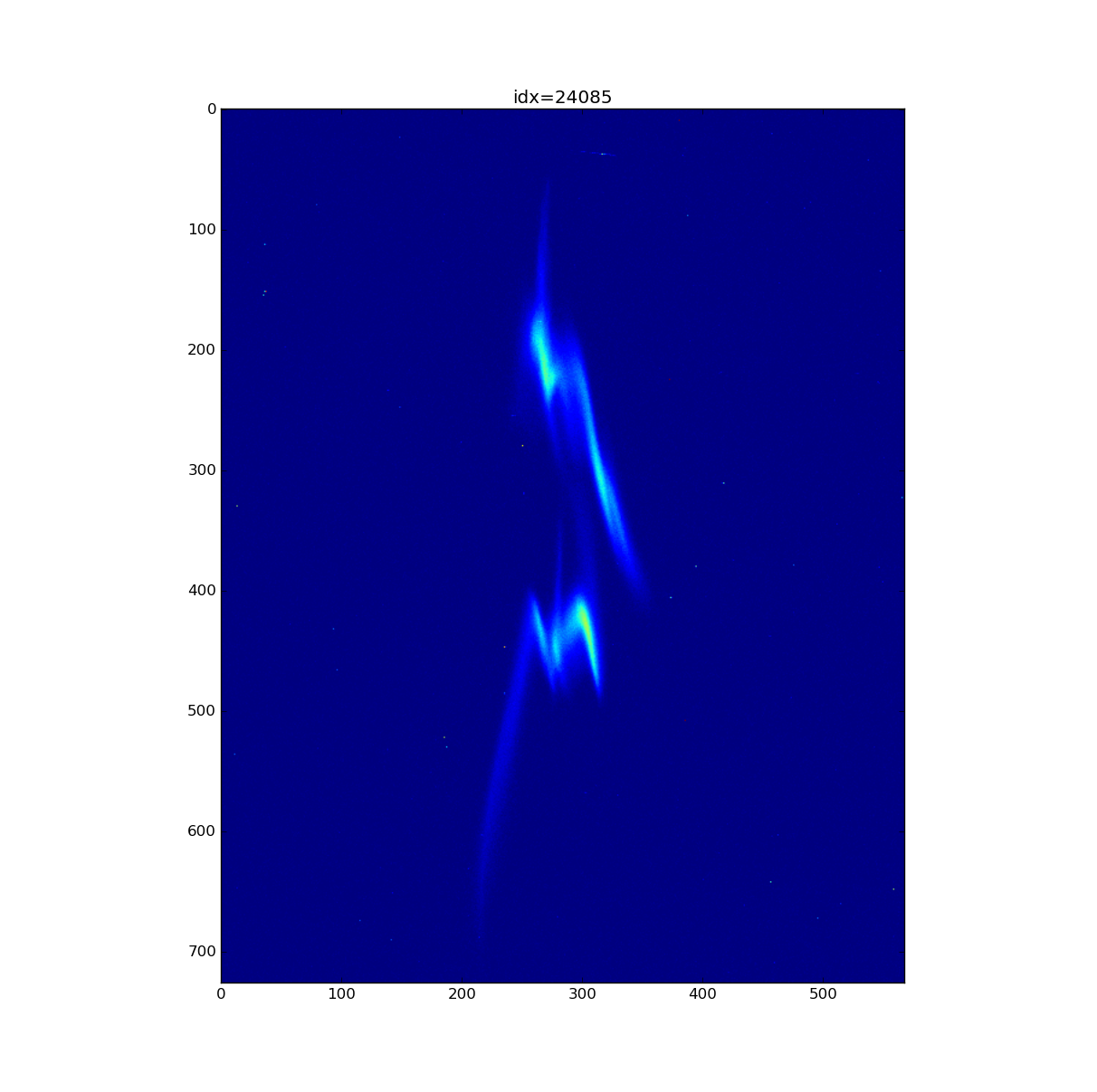

We'll build a simple model in keras to predict lasing. Images below, first no lasing (ignore curves to right of it) second is lasing in both colors.

This will be classifying 0 or 1 label.

Running

python ex01_keras_train.py

...

epoch=0 batch=0 train_loss=0.861 train_step_time=1.54

...

...

Code

Data

(mlearntut)psanagpu101: ~/github/davidslac/mlearntut $ h5ls -r /reg/d/ana01/temp/davidsch/ImgMLearnSmall/amo86815_mlearn-r069-c0000.h5/ Group/acq.e1.ampl Dataset {500}

/acq.e1.pos Dataset {500}

/acq.enPeaksLabel Dataset {500}

/acq.peaksLabel Dataset {500}

...

/acq.waveforms Dataset {500, 16, 250}

/bld.ebeam.ebeamL3Energy Dataset {500}

...

/evt.fiducials Dataset {500}

/evt.nanoseconds Dataset {500}

/evt.seconds Dataset {500}

/lasing Dataset {500}

/run Dataset {500}

/run.index Dataset {500}

/xtcavimg Dataset {500, 363, 284}

Code

Discussion

- use python 3 semantics, print(x) instead of print x

- will use cross entropy loss, ref: tensorflow mnist tutorial, in particular: colah post on Visual Information

- predict a probability distribution for each sample

- truth will be 0.0, 1.0 or 1.0, 0.0

- cross entropy loss and softmax - good for classification

- utitlity function takes 1D vector of labels and returns one hot - 2D vector with 2 columns

- model - as in code

- convolution is a sum over all channels of input, over kernel rows/columns

- good to shuffle data between each epoch

- keras tutorial talks about fit() for in memory, doesn't scale well

Exercises

- Swtich code to use tensorflow channel convention - hint, adjust all convent layers

- See how training time increases/descreases with minibatch size