Page History

...

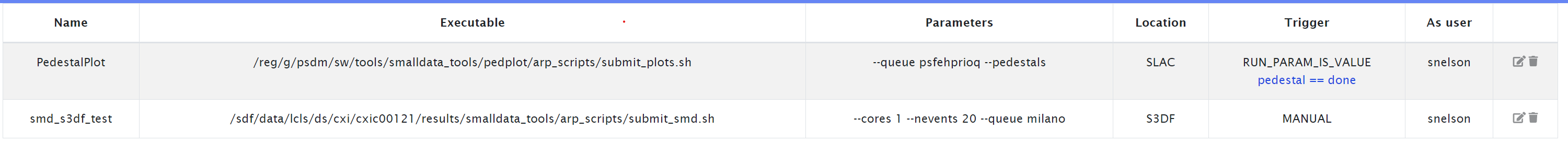

Many MEC user group rely on tiff files for each detector in each event/shot taken. The necessary jobs are setup in the elog/ARP and are typically automatically triggered. A standard experiment has the following jobsfairly standard setup looks like this:

This setup is now using smalldata_tools, where the producer is given a few options

...

--tiff: in addition to the hdf5 files, each dataset in 2-d shape per event will be also stored as tiff file in the scratch directory. This means this code should be run with --full --image options if you want all detectors as tiff files. If you are only interested in the VISAR tiff files, you can omit --image.

--cores 1: you need to run tiff-file production in single-core mode as the file name uses the index of event in a main event loop. The standard MPI setup happens under the hood where different events are passed out to loops over parts of the dataset, if we had each core write out tiff-files, they would be overwritten.

To avoid saving separate tiff files for each of the 6015 events of a standard pedestal run, we explicitly limit the number of shots to be translated to 20 by default (most MEC runs have 1 or 10 events). If you are able to work from hdf5 files directly, you could run the translation on multiple cores, speeding up the analysis. For runs with 10 or less events, this is usually not necessary.

Reaching S3DF

To reach s3df, you can access 's3dflogin' via ssh (or from a NoMachine/nx server). To be able to read data/run code, you need to go to the 'psana' machine pool (ssh psana). Alternatively, you can use Jupyterlab in the OnDenand service (request the asana-pool of machines).

Copy data to your home institution (or laptop):

...